Building anything with large language models feels a bit like duct-taping a jet engine to a go-kart. It’s thrilling, it’s powerful, but man, it is chaotic. One minute you’re getting mind-blowing results in a Colab notebook, the next you’re trying to figure out why your 'production' app is hallucinating about sentient teacups when a user asks a simple question.

I’ve spent more hours than I’d like to admit stitching together different services. A vector database over here, a prompt versioning system in a spreadsheet (I’m not proud), some half-baked evaluation scripts, and a logging service that gives me about half the information I actually need. It's a Frankenstein's monster of a tech stack. Fun for a weekend project, but a total nightmare for a real product.

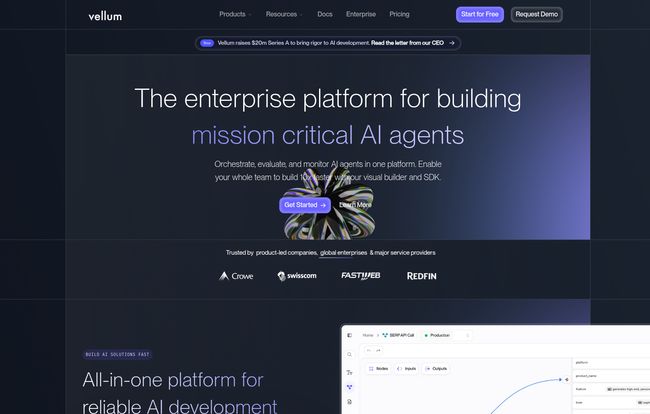

So when I started seeing talk about Vellum AI, I was skeptical. Another 'all-in-one' platform? Sure. But the more I looked, the more it seemed like they might have actually understood the assignment. They weren't just offering another API wrapper; they were building a full-on workbench for teams who are serious about shipping AI features. And that got my attention.

So, What Exactly is Vellum AI?

At its heart, Vellum AI is a development platform designed to take your AI-powered ideas from that chaotic 'hey this is cool' stage to a reliable, scalable feature in your application. Think of it as the central nervous system for your AI stack. It’s built to handle the entire lifecycle: experimenting with prompts, evaluating which ones are better, deploying them, and then watching them like a hawk in the wild.

Instead of you being the glue between five different services, Vellum aims to be the workbench where all the tools are already laid out. It’s for building what they call “mission-critical AI agents,” which is a fancy way of saying AI systems that you can actually depend on for important business tasks. Things like customer support bots that don’t go off the rails or internal tools that accurately summarize complex documents.

The Core Features That Actually Matter

A platform is only as good as its tools. I’ve seen plenty with flashy UIs that fall apart under pressure. Vellum seems to focus on the stuff that causes the most headaches for developers. At least, for me.

A Visual Workflow Builder That Isn't a Nightmare

Okay, I’m a sucker for a good visual builder. But only when it gets out of my way. Vellum’s orchestration tool lets you chain together different parts of your AI system—LLM calls, custom code, API lookups, document retrieval—in a visual graph. It’s like drawing a flowchart of your AI’s brain. This is huge for visualizing complex logic, especially when you're building Retrieval-Augmented Generation (RAG) systems. You can literally see where your data is coming from, how it’s being processed, and where it’s going next. It makes debugging a thousand times more intuitive than staring at a wall of code and print statements.

Prompt Engineering and an LLM Playground

We all know prompt engineering is more art than science sometimes. Vellum gives you a proper studio to work in. Their “LLM Playground” lets you test prompts against a bunch of different models side-by-side. Want to see if Claude 3 Sonnet is better than GPT-4o for your specific task? Easy. You can tweak prompts, change parameters, and compare the outputs right there. They even have tools to help optimize your prompts automatically. It turns the guesswork of A/B testing into a more structured, data-driven process. No more endless spreadsheets.

Visit Vellum AI

From a Cool Demo to a 'Reliable' Product with Evaluations

This, for me, is the most important part. A cool demo is easy. A reliable product is hard. Vellum has a whole suite of evaluation tools to help bridge that gap. You can create test suites with hundreds of scenarios and run your different prompt versions against them. It then spits out scores on things like accuracy, relevance, and even checks for toxicity. You can even generate test cases with AI, which saves a ton of time. This is how you prove that your new prompt is actually better, not just that it feels better on a few examples.

From Messy Dev to Smooth Deployment

Once you’ve found a prompt and a workflow that works, Vellum offers one-click deployment. It handles the infrastructure, turning your workflow into a production-ready API endpoint. That's a huge weight off a developer's shoulders.

But it doesn't stop there. The platform includes robust observability tools. You can monitor every single AI decision made in production. You can track costs, latency, and quality scores over time. And, my favorite part, you can capture real-world user feedback. If a user finds an output unhelpful, you can log that feedback and use it to create new test cases, closing the loop and allowing for continuous improvement. It’s a proper feedback cycle, not just a 'deploy and pray' strategy.

Let's Talk Pricing: Startup vs. Enterprise

Alright, the big question. What does all this cost? Vellum has a two-tiered approach, which is pretty standard for platforms like this. The details are on their pricing page, but here’s my quick breakdown:

"We have changed the game on how we build at Dropbox. All made possible from the enterprise leaders at Vellum." – Emily Dresner, Head of Engineering at Dropbox

The Startup Plan is designed for smaller teams (up to 5 users) who are building out their initial AI applications. It gives you access to most of the core goodies: the workflow management, collaborative editing, prompt optimization, and the core evaluation tools. It's a solid starting point to get your feet wet and build something real without needing to talk to a sales rep.

The Enterprise Plan is... well, it’s for enterprises. This is the whole shebang. You get everything in the Startup plan plus advanced features like Role-Based Access Control (critical for bigger teams), custom compliance and security (SOC2, etc.), external monitoring integrations, and dedicated support with an SLA. The pricing is custom, so you'll have to book a demo and have a chat with their team. This is for companies where the AI agent is a core, mission-critical part of the business, as evidenced by their big-name customers like Dropbox and Scribe.

The Good, The Bad, and The Platform-y

No tool is perfect, right? Based on my analysis and what I know about the space, here's my take.

On the plus side, the biggest win is speed and reliability. By bringing everything under one roof, you eliminate so much friction. Teams can collaborate better, and you can build a more robust product faster. The ability to capture real-world feedback and continuously improve your implementation is genuinely a game-changer. It gets you out of the lab and into a real, iterative development cycle.

Now for the reality check. There's probably a learning curve. A platform this comprehensive won’t be something you master in an afternoon. You'll need to invest some time to really understand how to get the most out of it. The pricing could also be a hurdle for very early-stage startups or solo developers. Finally, when you go all-in on a platform like Vellum, you are tying your development process to them. It's a dependency, and that's always a strategic choice you have to make consciously.

Vellum AI FAQs (The Stuff You're Probably Wondering)

Who is Vellum AI best for?

I'd say it's for product teams and engineering groups that have moved past the initial 'wow, AI is cool' phase and need to build, ship, and maintain serious AI-powered features. If you're managing multiple prompts, complex logic chains, and need to prove reliability, this is for you.

Can I use any LLM with Vellum?

Yes, the platform is model-agnostic. The pricing page mentions support for top proprietary models and open-source models. This is a huge plus, as you're not locked into a single provider like OpenAI or Anthropic.

Is there a free trial for Vellum?

The pricing page for the Startup plan has a 'Get Started' button, which usually implies a free trial or a freemium tier to let you try things out. For the Enterprise plan, you'd need to book a demo to get the full picture.

How does Vellum help with RAG systems?

The visual workflow builder is perfect for RAG. You can create nodes for document retrieval, chunking, embedding, and then feed that context into an LLM prompt. Vellum's features for Search (including Document Retrieval & Semantic Search) are specifically designed for this.

What kind of support can I expect?

The Startup plan comes with standard support, likely through a community channel like Slack and email. The Enterprise plan offers dedicated support, an engineering support SLA, and what they call 'AI Expert Support', which sounds like getting direct access to their specialists.

My Final Take: Is Vellum the Real Deal?

Look, the AI development landscape is still the wild west. Vellum AI is one of the first platforms I've seen that feels like it's building a proper town hall, sheriff's office, and general store all in one. It's bringing some much-needed order to the chaos.

It’s not for the casual hobbyist. It's a professional tool for professional teams with real business objectives. If you're tired of the duct tape and tangled scripts, and you want a more systematic way to build and improve AI products, then yes, I think Vellum is the real deal. It’s an investment, for sure, but the cost of building an unreliable product or moving too slowly is often much, much higher.