Building anything with AI these days feels like you’re assembling a Frankenstein's monster. You've got your application database over here (probably good ol' Postgres). Then you need a vector database over there—hello, Pinecone or Weaviate subscription. Oh, and don't forget the API calls to OpenAI or a self-hosted LLM on yet another server. It's a mess of network calls, security concerns, and multiplying bills. My head hurts just thinking about it.

For years, the mantra has been to separate concerns. Specialized tools for specialized jobs. But I've been seeing a trend bubbling up, a sort of counter-reformation, that asks a simple question: what if we just... didn't? What if we brought the AI to the data?

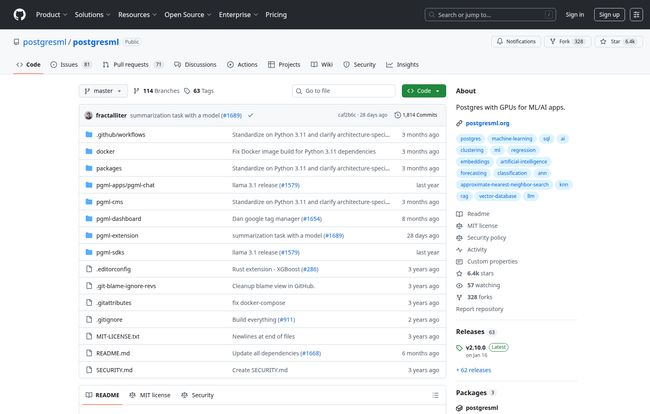

That's the promise of PostgresML. And I've got to say, it’s a pretty compelling one.

So What on Earth is PostgresML?

In the simplest terms, PostgresML is a super-powered extension for PostgreSQL. It’s not a separate program. It’s not another service you have to plumb into your system. You install it, and suddenly your trustworthy, slightly boring database can train models, generate vector embeddings, and run LLMs. It’s like your reliable Toyota Camry suddenly has a GPGPU and can do a 0-60 in three seconds.

The whole idea is to stop shuffling your data all over the internet. Instead of your app fetching data from Postgres, sending it to a model for processing, getting it back, then maybe sending it to a vector database for storage... you just do it all with a few SQL commands. Or with their Python and Javascript SDKs, if that’s more your speed.

It’s an MLOps platform that lives inside your database. A wild concept, I know.

Visit PostgresML

The All-in-One Dream: Consolidating That Messy AI Stack

The biggest win here, the thing that made me sit up and pay attention, is the simplification. The modern AI stack is often a fragile house of cards. PostgresML proposes turning that house of cards into a single, solid brick.

Let’s look at a typical RAG (Retrieval-Augmented Generation) setup:

| Traditional Stack | PostgresML Stack |

|---|---|

| Application Server (Node.js/Python) | Application Server (Node.js/Python) |

| PostgreSQL Database (for user data) | PostgresML Database (for user data, vectors, and models) |

| Vector Database (e.g., Pinecone) | (Included) |

| Embedding Model (e.g., Hugging Face) | (Included) |

| LLM (e.g., OpenAI API or self-hosted) | (Included) |

See the difference? You’re collapsing at least three separate components into one. This isn’t just about making your architecture diagram look cleaner. It has real-world consequences. We're talking less network latency because your data doesn't have to travel. We're talking about a smaller attack surface for security. And, my favorite part, we're talking about fewer monthly bills to justify to the finance department. A single, manageable cost. What a concept.

Getting Your Hands Dirty With PostgresML's Functions

Okay, so it sounds great in theory. But how does it actually work? It all comes down to a few powerful functions they've added to SQL.

Imagine you’re building a chatbot that answers questions based on your company's internal documents. With PostgresML, the workflow might look something like this, right inside your database:

- First, you’d use the

pgml.embed()function. You'd point it at your documents, choose a model like 'all-MiniLM-L6-v2', and it would automatically generate and store the vector embeddings for you. No need to pipe gigs of data to an external service. - Then, when a user asks a question, you’d use

pgml.vector_recall()to find the most relevant document chunks based on their query. It’s semantic search, built right in. - Finally, you'd package that context up and feed it to an LLM using the

pgml.transform()function. You can call open-source models like Mistral or Llama that you’ve loaded directly into the database. The model generates the answer, and you pass it back to the user.

It even supports classic machine learning. Need an XGBoost model to predict customer churn? You can use pgml.train() on your user data table and then pgml.predict() in real-time. All without the data ever leaving the safety of your PostgresqL instance.

The Good, The Bad, and The PostgreSQL-y

No tool is perfect, right? It's all about tradeoffs. I’ve spent enough time in this industry to know that there's no silver bullet. So, let’s get into the nitty-gritty.

The Good Stuff

I'm genuinely excited about the performance and security implications. By co-locating the data and the compute, you slash network latency. Their GitHub page claims it's significantly faster than the classic HuggingFace + Pinecone combo, and I believe it. Every millisecond counts, especially in interactive applications.

The data privacy angle is huge too. For anyone working with sensitive user information, medical records, or proprietary company data, the idea of not having to send that data to a third-party API is a massive relief. It simplifies compliance and just feels… safer.

And, of course, the cost. You're potentially eliminating your vector database subscription and reducing your model inference costs. That's real money back in your budget.

The Not-So-Good Stuff

Now for the reality check. The biggest hurdle is right in the name: PostgresML. If you and your team aren't already comfortable with PostgreSQL, there's going to be a learning curve. This is an extension, not a standalone GUI tool. You need to be willing to write some SQL.

I've also seen some chatter that loading new, non-cached models can take a moment. That's a physical limitation—you have to get the model weights onto the GPU. It’s not a deal-breaker for most, but something to be aware of if you're constantly swapping models in a production environment.

And let's be honest, while it simplifies the infrastructure, MLOps is still a complex field. PostgresML is a powerful tool, but it won’t magically teach you the principles of model validation or feature engineering. It makes the execution easier, but the strategic thinking is still on you.

So, What's This Going to Cost Me?

This is where it gets interesting. They have two main paths:

- PostgresML Cloud: This is their managed service. You sign up, get a database with GPU access, and you can start building immediately. They have a free tier which is fantastic for experimenting and getting your feet wet. For production workloads, you'd move to a paid plan, but it's all consolidated.

- Self-Hosted: It's open source, baby! If you've got your own servers (and the skills to manage them), you can grab their Docker image and run the whole show yourself. This gives you maximum control and can be very cost-effective if you're already managing your own infrastructure.

My Final Take: Is This the Future?

I think for a huge number of developers and companies, the answer is a resounding yes. PostgresML isn't trying to be the end-all-be-all for every massive, hyperscale AI company. It’s a pragmatic solution for the rest of us.

It’s for the startup that needs to ship an AI feature fast without hiring a dedicated MLOps team. It's for the established company that wants to add AI capabilities to its existing PostgreSQL-backed product without a massive architectural overhaul. It's for anyone who values speed, security, and simplicity over a sprawling, complex, and expensive stack of microservices.

It might not be the right fit for everyone, but it represents a powerful, logical, and frankly, refreshing direction for the world of applied AI. It’s definitely one to watch.

Frequently Asked Questions (FAQ)

- What is PostgresML in simple terms?

- It's an extension for the PostgreSQL database that lets you perform machine learning tasks—like training models, creating vector embeddings, and running LLMs—directly inside your database using SQL commands.

- Can I use popular models like Llama or Mistral with PostgresML?

- Yes! PostgresML integrates with Hugging Face, allowing you to download and run thousands of open-source models, including popular ones from the Llama and Mistral families, directly within the platform.

- Is PostgresML a replacement for Pinecone or other vector databases?

- For many use cases, yes. It has built-in vector search capabilities (`pgml.vector_recall`), which can eliminate the need for a separate, dedicated vector database, thereby reducing cost and complexity.

- Do I need to be a database expert to use it?

- While it helps to be familiar with PostgreSQL and SQL, you don't need to be a deep database administrator. If you know basic SQL and have some experience with Python or JavaScript (for the SDKs), you can be very productive.

- Is PostgresML free to use?

- It can be. The project itself is open-source, so you can host it yourself for free (minus your hardware costs). Their managed cloud service also offers a generous free tier to get started.

- How does it improve performance for AI applications?

- By keeping the data, vectors, and models all in one place (the database), it dramatically reduces network latency. You're not making slow, round-trip API calls between different services; you're executing functions within a single, GPU-accelerated system.