Let’s have a little chat. Just you and me. Have you ever felt that tiny jolt of fear right before checking your cloud services bill after a week of intense AI development? That little voice in your head that whispers, “Did I accidentally leave the meter running on a firehose of tokens?” I’ve been there. We've all been there.

We’re living in this incredible new age of Large Language Models. We can build things with GPT-4o, Claude 3 Opus, and Llama 3 that felt like science fiction just a couple of years ago. But this power comes with a new kind of anxiety. The pay-as-you-go pricing model is a beast. It’s fantastic for getting started, but it can turn on you in a heartbeat. One inefficient prompt, one runaway loop in your code, and suddenly your 'fun little side project' has a bill that looks like a car payment.

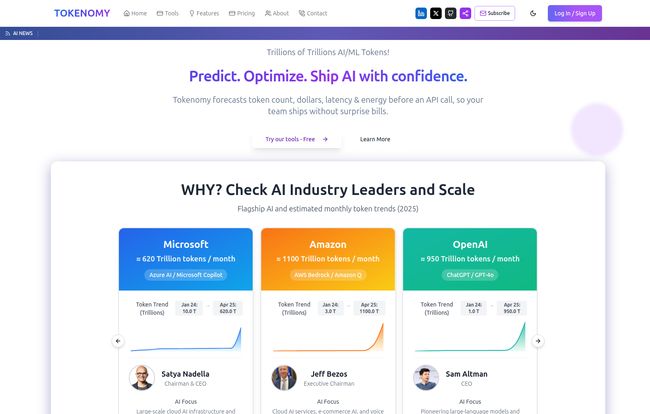

For years, I've been looking for something to put my mind at ease. A way to peek into the future, just for a second, to see the financial damage before I hit send on that API call. And folks, I think I’ve found it. It’s called Tokenomy, and it’s quickly become an indispensable part of my workflow.

So, What on Earth is Tokenomy?

At its heart, Tokenomy is an advanced AI token calculator and cost estimator. But calling it just a “calculator” is like calling a Swiss Army knife just a “knife.” It’s a whole suite of tools designed to give developers and content creators confidence when working with LLMs. Think of it as a financial crystal ball for your AI endeavors.

It lets you paste in your text or code, choose your model (or multiple models at once!), and it instantly tells you three critical things: how many tokens it'll consume, how much it will cost in actual dollars, and even an estimate of how long it will take to process. All before you spend a single cent.

The Terrifying Tale of the Runaway API Bill

I remember a project a while back. We were building a summarization feature. It worked great on our test documents, which were all neat and tidy. But when we pushed it to a staging environment with real-world, messy user data? Whoops. Some of those documents were gigantic. The token count, and the associated cost, went through the roof. It was a scramble to implement checks and balances we should have had from the start. A stressful, expensive lesson.

This is the exact problem Tokenomy aims to solve. It’s a pre-flight check for your prompts. By giving you a clear picture of token usage upfront, it helps you avoid those nasty surprises. You can actually see how a small change to your prompt can drastically alter the cost, letting you optimize for efficiency from day one, not as a panicked reaction to a shocking bill.

A Closer Look at the Tokenomy Toolkit

This isn't just a one-trick pony. The platform is surprisingly deep, especially for something that, well, I’ll get to the price later. Let’s just say you'll be pleasantly shocked.

More Than Just a Token Counter

The core feature is the calculator, and it's brilliant. It supports all the major models you’d expect—OpenAI’s GPT family, Anthropic’s Claude models, and others. The killer feature here for me is the real-time model comparison. You can paste your text and see a side-by-side breakdown of the cost and token count for GPT-4o versus Claude 3 Sonnet, for example. This is huge for making informed decisions. Maybe Sonnet is 20% cheaper for your specific use case? Now you know, without running multiple real-world tests.

Simulating Speed and Memory Before You Commit

Cost is one thing, but performance is another. Tokenomy includes a Speed Simulator and a Memory Calculator. This moves beyond simple cost analysis into genuine performance engineering. You can get a feel for the latency of your calls and the memory footprint required, which is incredibly useful for building responsive, well-architected applications. No one wants an AI feature that makes users stare at a loading spinner for ten seconds.

For the Serious Developer: APIs and Integrations

This is where Tokenomy really won me over. They get that developers live in their code editor. They offer a bunch of ways to integrate their tools directly into your workflow:

- A sleek VS Code sidebar integration means you don't even have to leave your editor to check a prompt.

- A full-featured CLI tool for those of us who love the command line.

- Cross-Platform Libraries for JavaScript, Python, and Ruby. You can programmatically estimate costs within your application logic.

- Even a LangChain callback for those building with that popular framework.

This shows a real understanding of the developer experience. It's not just a website; it's a utility designed to fit where you work.

Okay, So What's the Catch? The Pricing.

Alright, you've been patient. A tool this comprehensive, with API access, VS Code integration, and support for all the top models... it has to cost something, right? There's got to be a “Pro” tier for $49/month, or a credit-based system. That’s just how SaaS works.

I was hunting for the pricing page, fully expecting to see a comparison table with a free tier that was basically useless. But what I found was... well, this:

| Plan | Cost | Features |

|---|---|---|

| Free Forever | $0 /month | Unlimited calculations, model comparisons, speed/memory simulation, optimization suggestions, CSV/PDF export, all major model support. No credit card required. |

You read that right. It’s free. Completely, totally, 100% free. No hidden fees, no credit card required, no nonsense. I had to read it twice. In an industry where everything seems to have a tiered subscription, this is a massive breath of fresh air. It makes the decision to try it a complete no-brainer.

Who Is This For?

Honestly, if you're touching an LLM API, you should probably be using this. But to be more specific:

- Indie Developers & Bootstrappers: When every dollar counts, this tool is not just helpful, it's essential for budget management.

- Development Teams: It's a great way to standardize cost estimation and empower every dev on the team to be cost-conscious.

- AI Content Creators: If you're using APIs to generate content, you can now accurately forecast the cost of producing a blog post or a series of social media updates.

- Students and Learners: It’s a risk-free way to experiment and understand the real-world cost implications of different models and prompts.

Frequently Asked Questions about Tokenomy

How accurate is the Tokenomy calculator?

In my experience, it’s highly accurate. It uses the same tokenization libraries as the models themselves (like tiktoken for OpenAI). Of course, final billing depends on the provider, but for estimation and comparison, it's spot on. It’s designed to give you a precise forecast to prevent those billing surprises.

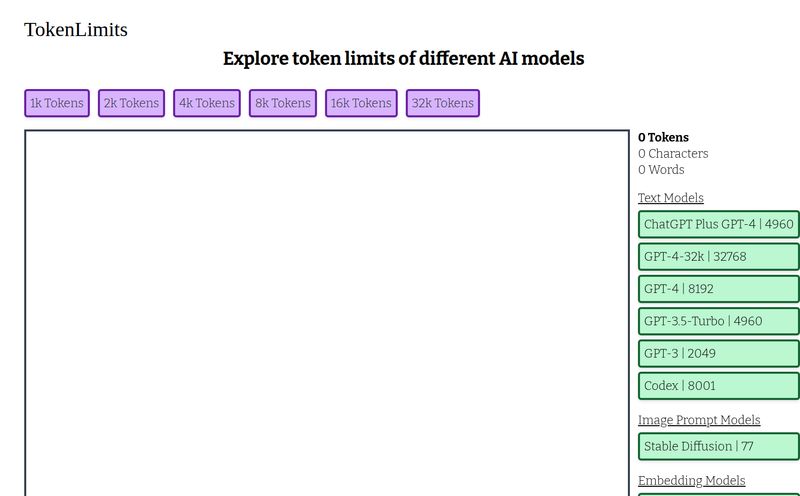

Which AI models does Tokenomy support?

It supports a wide range of the most popular models from providers like OpenAI (GPT-4, GPT-4o, GPT-3.5-Turbo), Anthropic (Claude 3 Opus, Sonnet, Haiku), Google, and more. The list is constantly updated as new models are released.

Is Tokenomy really free? How do they make money?

Yes, it's really free. The pricing page is very clear: "Free Forever" with no credit card required. As for how they make money, that's the million-dollar question. It could be a lead generation tool for a future enterprise product, or perhaps they have other business models. But for now, as users, we get to enjoy a powerful tool at zero cost.

Can I use Tokenomy directly in my code?

Absolutely. That's one of its strongest selling points. They provide a simple API and ready-to-use libraries for Python, JavaScript, and Ruby, so you can integrate cost estimation and analysis directly into your development and deployment pipelines.

What kind of optimization suggestions does it offer?

Beyond just showing you the numbers, Tokenomy provides actionable tips. For instance, it might highlight parts of your prompt that are unnecessarily verbose or suggest a different, more cost-effective model that would achieve a similar result for your specific task.

How do I get started with Tokenomy?

Just head over to their website, tokenomy.ai. Since it's free and requires no signup for the basic tools, you can start pasting text and calculating tokens in seconds. It’s about as frictionless as it gets.

A Tool for Confident AI Development

Look, the AI space is moving at a breakneck pace. It’s exciting, but it can also be intimidating, especially from a financial perspective. Tools like Tokenomy are so important because they democratize access and reduce risk. They take away the fear of the unknown bill and replace it with the power of informed decision-making.

Giving developers a suite this powerful for free is a bold move, and one I really respect. It’s a financial seatbelt for your AI projects, a pre-flight check for your prompts, and a guide for navigating the complex world of LLM costs. If you're building with AI, do yourself a favor and give it a try. Your wallet will thank you.

Reference and Sources

- Tokenomy Official Website: https://tokenomy.ai/

- Tokenomy Pricing Information: https://tokenomy.ai/pricing

- OpenAI API Pricing: https://openai.com/pricing

![chatai[apps]](https://files.declom.com/chatai-apps_featured.jpg?)