You know the feeling. You've spent an hour crafting the perfect, epic prompt. It’s a masterpiece of context, a novel of instruction, destined to make an AI sing. You paste it into the API playground or your custom app, hit enter, and... error. “Prompt exceeds maximum context length.”

Ugh. It’s the digital equivalent of stubbing your toe. All that creative momentum, gone. You’re left hacking away at your beautiful prose, trying to guess what you can cut without losing the magic. It’s a frustrating, clumsy dance I’ve done more times than I care to admit.

For a while, my solution was a clunky script and a lot of guesswork. But recently, I stumbled upon a little web tool that’s so simple, so single-minded, it's brilliant. It's called TokenLimits, and it has quickly become a permanent fixture in my browser tabs.

First Off, What in the World Are Tokens Anyway?

Before we go further, let's get this out of the way. If you’re new to the AI space, the word “token” gets thrown around a lot. Think of tokens as the building blocks of language for an AI. They aren’t quite words, and they aren’t quite characters. A good rule of thumb, especially for English, is that one token is roughly 4 characters or about ¾ of a word.

So, a word like “love” is one token. But a word like “lovingly” might be two tokens: “loving” and “ly”. And “SEO” might be three separate tokens: “S”, “E”, and “O”. It’s how the machine breaks down our language into pieces it can actually process. Every AI model, from GPT-4 to Stable Diffusion, has a hard limit on how many of these little pieces it can look at at once. This is its “context window.” Go over that limit, and the AI just can’t compute.

This is also super important for anyone using these models via an API, because companies like OpenAI charge you per token. A bigger prompt costs more money. So knowing your token count isn’t just about avoiding errors; it’s about managing your budget.

Introducing TokenLimits: Simplicity is its Superpower

So, what is TokenLimits? It's a website. That's it. It’s not an app you have to download or a service you need to sign up for. It’s a clean, simple webpage with one job: to count the tokens in your text.

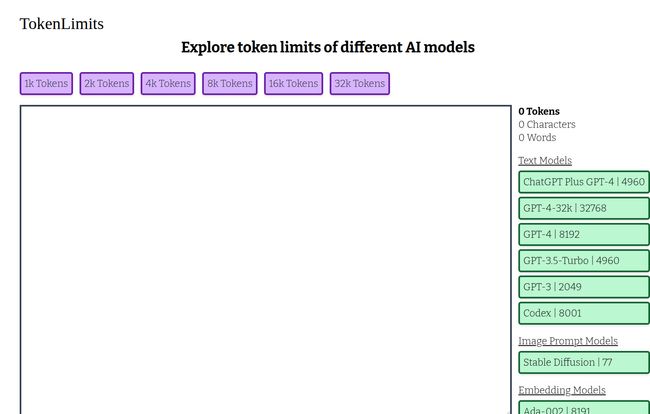

When you land on the page, you're greeted with a big, inviting text box. To the right, there’s a live counter for Tokens, Characters, and Words. Below that, a handy list of popular AI models and their corresponding maximum token limits. There's no fluff, no ads trying to sell you a crypto course, no annoying pop-ups. It’s just… simple.

You paste your text, and instantly, you know where you stand. It’s the digital measuring tape for your AI prompts.

Visit TokenLimits

Using the Tool is as Easy as It Sounds

I almost feel silly writing a “how-to” for this, because it’s so intuitive. You literally just copy your prompt from wherever you're writing it—a Google Doc, VS Code, your notes app—and paste it directly into the big box on the TokenLimits website. The counters on the right update in real-time. You can then glance at the list of models and see if you’re in the green. For instance, if the counter says “3,500 Tokens,” you know you’re good for GPT-3.5-Turbo (limit 4960) but you might need to trim it down if you were targeting an older GPT-3 model (limit 2049).

Which AI Models Does it Cover?

This is where the tool really shines for me. It’s not just for one specific model. The developers have included a pretty decent list of the AIs we’re all using day-to-day. As of my last check, it covers:

- Text Models: This is the main event, with support for giants like ChatGPT Plus (GPT-4), the beastly GPT-4-32k, the standard GPT-4, GPT-3.5-Turbo, and the older GPT-3. It even has Codex for you programmers out there.

- Image Prompt Models: This one was a nice surprise. It includes Stable Diffusion, which has a notoriously small token limit (just 77!). This is incredibly helpful for crafting detailed image prompts without getting them truncated.

- Embedding Models: For the more technical crowd, it also lists OpenAI’s Ada-002 embedding model.

Having them all listed right there next to your count saves you the mental gymnastics of remembering each specific limit. It’s a small detail, but it makes the workflow so much smoother.

The Good, The Bad, and The... Well, It's Free

Like any tool, it’s not perfect, but its flaws are tied directly to its greatest strength: its simplicity.

What I Absolutely Love

Honestly, I'm a sucker for tools that do one thing and do it well. TokenLimits is the embodiment of that philosophy. It's incredibly easy to use, it's fast, and it gives you the three key metrics you need: tokens, characters, and words. The multi-model support is the cherry on top. And did I mention it's completely free? There's no account, no paywall, no nonsense. In an industry full of complex, subscription-based platforms, a tool like this feels like a breath of fresh air.

Potential Room for Improvement?

Okay, let’s be real. This is a one-trick pony. It checks token limits. That’s it. It doesn’t offer suggestions for shortening your text or provide deep analytics. Also, the world of AI moves at a breakneck pace. A new model from Anthropic or Google could drop tomorrow, and TokenLimits might not have it on their list immediately. So, you might still need to look up the limits for more obscure or brand-new models. But for the mainstream workhorses like the GPT family, it's spot on.

Who is TokenLimits Really For?

I see a few groups getting a ton of value out of this:

- Content Creators & SEOs: When you're feeding huge amounts of text into an AI for summarization, rephrasing, or article generation, this is a lifesaver.

- Developers & Prompt Engineers: A must-have for anyone building apps on top of AI APIs. It's perfect for debugging and for teaching clients or junior devs about context window constraints.

- Students & Researchers: Great for interacting with AI for research papers or assignments without constantly hitting walls.

- Curious Hobbyists: If you're just playing with AI, it’s a great way to understand how these models “think” and why sometimes a shorter prompt is a better prompt.

FAQs about TokenLimits and AI Tokens

What exactly is an AI token again?

Think of it as a piece of a word. The AI breaks down your text into these tokens to understand it. On average, 100 tokens is about 75 words in English. This is the fundamental unit for both processing your request and, for paid services, calculating your bill.

Is TokenLimits really free to use?

Yes, as of this writing, it is 100% free. There's no sign-up or payment required. Just a straightforward, useful tool for the community.

Why is checking my token count so important?

Two main reasons. First, every AI model has a maximum token limit. If your prompt and the expected response exceed this limit, you'll get an error. Second, if you're using a paid API like OpenAI's, you are charged per token. Managing your token count directly manages your costs.

How accurate is the token count on TokenLimits?

It's very accurate for the models listed. It uses the same tokenization rules that platforms like OpenAI use. However, always treat it as a very strong estimate. The final, official count is always what the API itself determines upon execution, but this tool will get you 99.9% of the way there and prevent most errors.

Does TokenLimits work for non-English languages?

Yes, but the relationship between words and tokens changes. A single character in some languages, like Chinese, might be one or more tokens. The tool still counts the tokens correctly, but the “1 word ≈ 1.3 tokens” rule of thumb for English won't apply.

Can I use this for my Stable Diffusion prompts?

Absolutely! And you should. Stable Diffusion has a very small context window of 77 tokens. It's easy to go over that with a descriptive prompt. Pasting your prompt into TokenLimits first is a fantastic way to ensure the model actually “sees” your entire idea.

A Simple Tool for a Complex World

In the end, we don't always need another complex platform or an all-in-one suite. Sometimes, we just need a good measuring tape. TokenLimits is exactly that. It's a sharp, simple, and incredibly practical utility that solves a common and annoying problem for anyone working with modern AI.

It won't write your prompts for you, and it won't revolutionize your workflow. What it will do is save you time, frustration, and maybe even a little bit of money. And in my book, that makes it a winner. I highly recommend bookmarking it.

References and Sources

- TokenLimits Official Website: tokenlimits.com

- OpenAI's Explanation of Tokens: What are tokens and how to count them?