Building with Large Language Models feels a bit like the Wild West right now. It's exciting, chaotic, and everyone’s rushing to build the next big thing. But behind the flashy demos and mind-blowing results, there’s a grittier reality: managing the actual development process can be a downright mess.

I’ve lost count of the number of times I've had a 'genius' prompt chain working perfectly in a Jupyter notebook, only to lose it in a sea of `prompt_final_v3_really_final.txt` files. It feels like we're all writing incredible software but using Notepad as our version control. The plumbing, the orchestration, the simple act of keeping things tidy—it's a genuine headache.

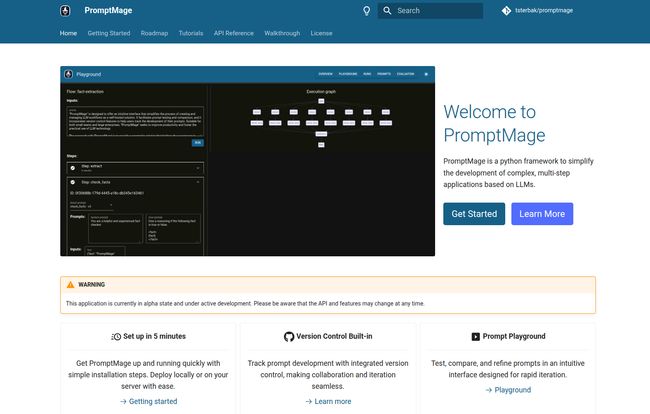

Every so often, a tool pops up that seems to whisper, “I get it. I feel your pain.” Today, that tool for me is PromptMage. It's a Python framework that isn't trying to be another LLM, but rather a sane way to manage them. And the best part? It's an open-source, self-hosted solution. Let's dig in.

So, What on Earth is PromptMage?

Think of PromptMage as the director's chair for your AI project. You’ve got your cast of talented actors (the LLMs), but you need someone to orchestrate the scenes, manage the script changes, and make sure everything flows into a coherent story. PromptMage is that director.

In more technical terms, it’s a Python framework designed to simplify building complex, multi-step applications that rely on LLMs. Instead of duct-taping Python scripts and API calls together, it gives you a structured environment to create, test, and deploy your workflows. Because it’s built with Python, it feels immediately familiar to anyone already in the AI/ML space, which is a huge plus. No need to learn a whole new esoteric language. It just fits.

Visit PromptMage

The Features That Actually Matter

A feature list is just a list. What I care about is whether those features solve real problems. After poking around PromptMage, a few things really stood out to me as being built by people who have actually been in the trenches.

A Prompt Playground That Doesn't Feel Like Work

My current prompt testing process is... let's call it artisanal. It involves a lot of copying and pasting. PromptMage offers a clean, intuitive interface they call the 'Prompt Playground'. This is where you can rapidly test and refine your prompts. It’s designed for quick iteration—tweak a word, see the result, compare it to the last version. This tight feedback loop is exactly what you need when you're trying to coax the right response out of a model. It turns a chore into a creative process.

Finally, Sanity with Version Control

This. This is the big one for me. PromptMage has version control for prompts built right in. If you're a developer, you understand why Git is non-negotiable. Building a serious AI application without prompt version control is, frankly, irresponsible. How do you roll back to a version that worked better? How do you collaborate with a teammate without overwriting their changes? PromptMage treats your prompts like the critical code they are, giving you the ability to track changes and collaborate effectively. It’s a simple concept, but its impact is massive.

Your Own Auto-Magical API

Okay, so you've built a brilliant multi-step LLM workflow. Now what? You need to wrap it in an API to actually use it in your application. This usually means firing up FastAPI or Flask and writing a bunch of boilerplate code. PromptMage just… does it for you. It automatically generates a FastAPI-powered API for your workflow. That’s a huge time-saver and lowers the barrier to getting your project off the ground and integrated into a real product.

Evaluating Prompts Like a Pro

How do you know if your new prompt is actually better, or just different? Guessing isn't a strategy. PromptMage includes an evaluation mode that lets you assess prompt performance through both manual and automatic testing. This moves you from “I think this sounds better” to “I have data showing this version performs 15% better on my test cases.” It’s the kind of discipline that separates hobby projects from professional products.

The Good, The Bad, and The Self-Hosted

No tool is perfect, especially not a new one. I've always felt that an honest review needs to balance the excitement with a healthy dose of reality.

The good stuff is obvious. It simplifies complex workflows, the version control is a lifesaver, the auto-API is brilliant, and it's all wrapped in a clean UI. It feels like a tool built for builders.

However, the project's homepage has a big yellow warning sign: This is an alpha state project. And we need to take that seriously. This means the API and features might change. I wouldn't bet my company's flagship product on it tomorrow. It's for the pioneers, the experimenters. It also requires Python knowledge, which is a given for the target audience but worth mentioning. The other big point is that it's a self-hosted solution. This is a classic double-edged sword. On one hand, you have total control. Your data, your prompts, your workflow—it all lives on your server. Awesome. On the other hand, you are now responsible for setting up that server, maintaining it, securing it, and keeping the lights on. It’s not a plug-and-play SaaS, and that's a critical distinction.

So, How Much Does This Cost?

This is often the million-dollar question. I went looking for a pricing page to see what the damage would be. And I found a '404 - Not found' error. Honestly? That's the best kind of pricing page. It's a strong signal that PromptMage is free and open-source, which is confirmed by its GitHub repository. The project is supported by Media Tech Lab, and the focus seems to be on community and development, not monetization. At least for now.

Just remember my earlier point: 'free software' doesn’t mean 'free to run'. You’ll still need to cover the costs of whatever server you decide to host it on.

Who Should Give PromptMage a Spin?

So, is this tool for you? In my experience, it really depends on who you are.

- Indie Hackers & Small Teams: Absolutely. This is a fantastic tool for getting a sophisticated LLM-powered project off the ground quickly without getting bogged down in workflow management tools that cost a fortune.

- Researchers & Academics: The version control and evaluation features are killer for creating reproducible experiments. This could be a huge help in formalizing LLM research.

- Developers Learning LLM-ops: This is a perfect learning tool. It’s a hands-on way to understand the challenges of prompt engineering and workflow orchestration in a contained, manageable environment.

Who should probably wait? Large enterprises that need 24/7 support contracts and rock-solid API stability guarantees. The alpha status is a real consideration there. But for the rest of us on the frontier, it's very compelling.

A Promising Tool for a Real Problem

I see a lot of tools. Most are just slight variations of something else. PromptMage feels different. It’s not trying to do everything; it’s trying to do a few very important things very well. It brings much-needed structure to the messy, creative process of building with LLMs.

Yes, it's young and still in alpha. But the foundation is solid, and it addresses a pain point that nearly every single developer in this space is feeling right now. It's a pragmatic, open-source solution in a field that’s getting increasingly crowded and expensive. For that reason alone, I'm rooting for it. It's definitely a project I'll be keeping my eye on.

Frequently Asked Questions

- Is PromptMage free to use?

- Yes, PromptMage is an open-source project and is free to use. However, since it is a self-hosted solution, you will be responsible for the costs of the server and infrastructure required to run it.

- What skills do I need to use PromptMage?

- You'll need a good understanding of Python, as PromptMage is a Python framework. Familiarity with LLMs and API concepts is also very helpful. If you plan to self-host, some basic server administration or DevOps knowledge will be necessary.

- Can I use PromptMage in a production environment?

- The developers state that the project is currently in an 'alpha state.' This means APIs and features could change without warning. While you technically can use it in production, it's best suited for personal projects, research, and prototyping until it reaches a more stable release.

- What is the main benefit of PromptMage over just writing Python scripts?

- The core benefits are structure and efficiency. PromptMage provides a unified interface for testing, version control for your prompts (like Git for code), automatic API generation, and built-in evaluation tools. This saves you from building all that management infrastructure from scratch.

- How can I contribute to PromptMage?

- The project welcomes community contributions. You can contribute by reporting bugs, fixing existing issues, or even suggesting new features. The best place to start is by visiting their GitHub repository and checking out the contribution guidelines.

Reference and Sources

PromptMage Official Website: https://promptmage.tsterbak.com/

PromptMage GitHub Repository: https://github.com/tsterbak/promptmage