If you're in the trenches with AI, whether you're a developer burning through API credits, an SEO crafting meta descriptions with AI assistance, or a prompt engineer trying to build the next big thing, you live and die by the token. We’ve all been there. You craft the perfect prompt, a beautiful tapestry of instructions and examples, only to have it brutally truncated by an unforgiving context window. Or worse, you get that gut-sinking feeling when you see your monthly API bill and realize your “small tests” were more like a small country’s GDP.

For years, I've juggled a messy combination of half-baked Python scripts, official (and often clunky) tokenizers, and just plain guesswork. It’s a pain. A real pain. So when I stumbled upon this browser-based LLM Token Counter, I was skeptical. Another browser tool? Great. But then I used it. And I haven't closed the tab since.

So, What Exactly Is This Thing?

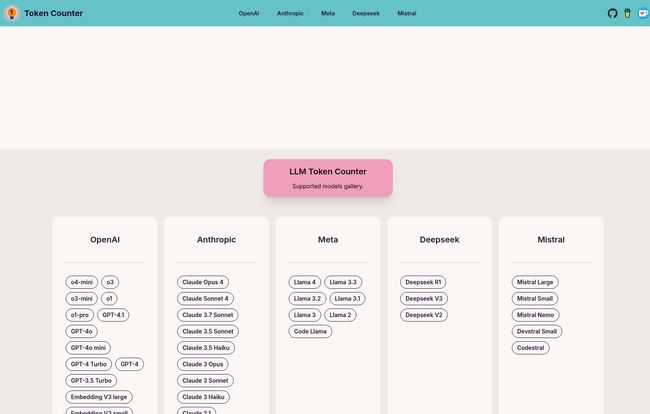

In short, it’s a beautifully simple calculator. But instead of crunching numbers, it crunches tokens for a whole smorgasbord of Large Language Models. You paste your text, select the model you’re targeting — whether it's OpenAI's latest GPT-4o, Anthropic's powerful Claude 3 Opus, or Meta's Llama 3 — and boom. Instant, accurate token count. No frills, no fuss.

It's one of those tools that does one thing, but does it so well it becomes completely indispensable. Think of it as the digital caliper for your words, ensuring every prompt fits perfectly before you send it off into the wild.

Why I’m Genuinely Obsessed With This Tool

Okay, a token counter is a token counter, right? Not quite. The magic here is in the details, the things that show the creators actually understand the workflow and worries of people who use these models daily.

The Privacy-First Approach Is a Game Changer

This is the big one for me. The absolute headline. The LLM Token Counter performs all its calculations directly in your browser. Your text, your prompts, your super-secret proprietary data… it never leaves your computer. It’s all handled client-side. In an age where we’re constantly being told to be careful about what we paste into random online tools, this is a massive sigh of relief. It's like having a private accountant for your prompts who tallies everything up without ever actually looking at your confidential documents. I can test sensitive client information or proprietary code snippets without a single worry about where that data is going. This alone puts it head and shoulders above many other tools out there.

It’s Blazing Fast and Ridiculously Accurate

Speed matters when you're in the flow. The FAQ mentions it leverages a Rust implementation of the tokenizer library, and you can feel it. It’s instantaneous. This speed comes from using `Transformers.js`, the slick JavaScript version of Hugging Face's legendary library. This isn't some approximation; it's using the proper, official tokenization methods for each model, giving you a count you can actually trust when you’re managing tight token budgets.

Visit LLM Token Counter

It Speaks Fluent AI: Comprehensive Model Support

The list of supported models is just… chef’s kiss. It’s not just the big players. Of course, you have your GPT-3.5 and GPT-4 variants, the full Claude 3 family (Opus, Sonnet, and Haiku), and even the various embedding models. But it also includes models from Deepseek, Mistral (including the new Codestral), and Meta's Llama family. I did a double-take when I saw Llama 3.1 and even Llama 4 listed. Either these guys have a crystal ball, or they're just incredibly prepared for the future of AI. Either way, I'm impressed.

Having them all in one dropdown menu saves me from having to hunt down a different specific tool every time I switch projects or want to compare prompt lengths across different model families. It’s a simple convenience that adds up to a lot of saved time and frustration.

So Simple, It's Almost Foolproof

The user interface is clean. It's minimalist in the best way possible. There are no ads cluttering the screen (a miracle!), no confusing options, no mandatory sign-ups. It's just a text box, a dropdown menu, and your result. That’s it. This design philosophy clearly respects the user's time and intelligence. You're there to do a job, and the tool gets out of your way to let you do it.

Is It Really Free? What's the Catch?

From everything I can see, yes, it's completely free to use. There’s no pricing page, no subscription model, no “5 free counts per day” nonsense. And that often leads to the question, “what’s the catch?”

Honestly, I don't think there is one. My best guess? The tool is a fantastic piece of utility marketing. It's part of a suite of other helpful AI tools (I saw an AI Math Solver and an OCR tool on there too), and it builds goodwill and trust. By providing a genuinely useful, private, and free tool, they're demonstrating their expertise and likely hoping you'll remember them when you need other solutions. It’s a strategy I can get behind—providing real value first. The only “limitation,” if you can even call it that, is that it requires JavaScript to be enabled in your browser, which is standard for pretty much any modern web tool.

Your Burning Questions Answered

I had a few questions myself, so I figured I’d put together a quick FAQ to cover what you're probably wondering.

Is my data and my prompts safe when using this tool?

Absolutely. This is its standout feature. All the token counting happens locally in your web browser. Your text is never sent to any external server, making it one of the most private options available.

Which LLMs does it actually support?

A whole bunch! It covers the major families from OpenAI (GPT-4o, GPT-3.5-Turbo), Anthropic (Claude 3 Opus, Sonnet, Haiku), Meta (Llama 2, Llama 3), Mistral (Mistral Large, Codestral), and Deepseek. The list is constantly being updated too.

Why is caring about tokens so important anyway?

Two main reasons: cost and context. Most AI models charge you per token, so knowing your count helps you manage your API budget. Secondly, every model has a maximum “context window,” or the total number of tokens it can remember at once. Exceeding this limit can lead to errors or truncated, nonsensical output.

Does the LLM Token Counter cost anything?

Nope. It appears to be 100% free to use, with no hidden fees or subscriptions.

How is it so fast?

It's built with modern web technologies, specifically using `Transformers.js` which can be backed by a highly optimized Rust/WASM (WebAssembly) implementation. This allows it to perform complex calculations at near-native speeds, right inside your browser tab.

Do I need to install any software or browser extensions?

Not at all. It's a pure browser-based tool. Just navigate to the web page, and you're ready to go. Bookmark it, and you're golden.

My Final Verdict: A Must-Have in Your Toolkit

In the fast-moving world of AI and SEO, the tools that stick around are the ones that are simple, reliable, and solve a real problem without creating new ones. The LLM Token Counter is exactly that.

It’s a no-brainer to bookmark this thing. It has saved me time, it has probably saved me a non-trivial amount of money on API calls, and most importantly, it gives me peace of mind with its privacy-first design. It's a small, sharp, and perfect utility that has earned a permanent place in my digital toolbox. Give it a try; I have a feeling it’ll earn a place in yours, too.