If you've been building anything with LLMs lately, you've felt the pain. You spend days, maybe weeks, perfecting a prompt chain. You tweak the temperature, wrestle with the system message, and finally, you think you've cracked it. But then comes the dreaded question:

Is it actually better?

And how do we usually answer that? We throw it at another LLM. The whole "LLM-as-a-judge" approach. It feels modern, it feels automated, but half the time it's like asking a moody artist to grade a physics exam. You get a different answer depending on the day, the weather, or the phase of the moon. It's slow, it's expensive, and worst of all, it's not consistent. I've personally wasted more hours (and API credits) on this than I'd like to admit.

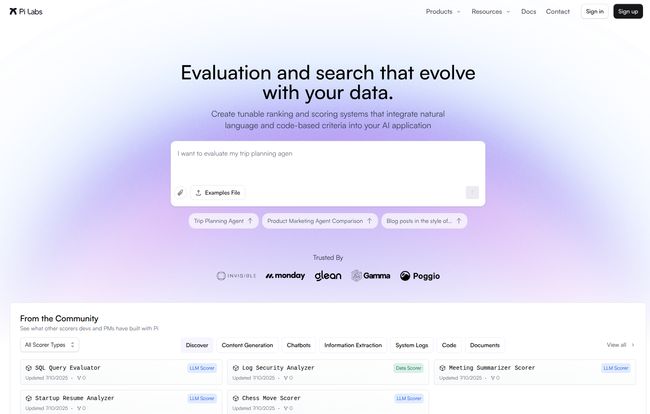

So when I heard about a new platform called Pi Labs, started by a team with serious chops from Google Search, my ears perked up. They're not just building another tool; they're trying to fundamentally fix the broken evaluation process. And after digging in, I think they might actually be onto something big.

The Big Problem with Letting LLMs Judge Each Other

Before we get into what Pi Labs does, let's just vent for a moment about the status quo. The LLM-as-a-judge method is popular because, well, what's the alternative? Manually reviewing thousands of outputs? Nobody has time for that. But the trade-offs are brutal.

You run the same evaluation twice on GPT-4 and get wildly different scores. Why? Because you're at the mercy of a probabilistic model that wasn't designed for deterministic scoring. It’s a creative writer not a stern accountant. This makes true, reliable A/B testing a complete fantasy. You can't confidently say your new prompt is 10% better if your measuring stick changes size every time you use it.

Visit Pi Labs

So, What Is Pi Labs, Exactly?

Okay, enough complaining. What's the solution? Pi Labs isn't just another off-the-shelf evaluator. Instead, it’s a platform that automatically builds a custom evaluation system just for you.

You feed it examples of what you consider a 'good' or 'bad' output, based on your own unique needs, labels, and preferences. Pi Labs then creates a fine-tuned, lightweight scoring model that perfectly mirrors your criteria. It's not about asking a generic AI if your output is good; it's about building a specialized referee that knows the exact rules of your game. Think of it as creating a custom-calibrated instrument for measuring quality, instead of just eyeballing it with a general-purpose model.

This approach transforms evaluation from a vague, subjective art into a repeatable science. And that's a pretty big deal.

The Pi Scorer: A GPT-4 Killer for Evals?

At the heart of all this is their foundation model, the Pi Scorer. And the claims they're making are, frankly, wild. They state it outperforms leading models like GPT-4.1 and Deepseek specifically on scoring accuracy. That's a bold claim, but it makes sense when you think about it. It’s a specialized model, designed for one thing: understanding and applying scoring logic with high fidelity.

Here are the specs that caught my eye:

- Insane Speed: It can score over 20 custom dimensions in less than 100 milliseconds. Compare that to the seconds you might wait for a response from a massive LLM. This speed makes real-time observability practical.

- Accuracy and Consistency: Because it’s a purpose-built model, it provides deterministic scores. Run the same eval a hundred times, and you’ll get the same result a hundred times. Finally!

- Cost-Effective: They claim it's up to 5x cheaper than using traditional LLM judges. Faster, more accurate, and cheaper? That's the trifecta.

How Pi Labs Actually Improves Your Workflow

This all sounds great in theory, but how does it work in practice? I see it slotting in a few different ways that could genuinely change how teams build AI products.

Escaping the Manual 'Tweak-and-Pray' Cycle

Instead of endless prompt refinement based on gut feelings, you can build a scorer that represents your 'ideal user feedback'. Now, every change you make can be measured against a stable benchmark. It turns a frustrating creative process into a data-driven engineering one.

Integrations That Make Sense

A tool is only as good as how well it fits into your existing stack. Pi Labs seems to get this. They already integrate with common tools like Google Sheets (a classic for a reason!), PromptFoo, Griptape, and CrewAI. This isn't some walled garden you have to migrate your entire life into. It's designed to plug into the places where you're already working, which lowers the barrier to entry significantly.

One Scorer to Rule Them All

This is probably the most powerful concept. The custom scorer you build isn't just for one-off offline evaluations. Because it's so fast and cheap, you can deploy the exact same model across your entire AI lifecycle:

- Offline Evals: A/B test prompts and models before they ever see the light of day.

- Online Observability: Monitor the quality of your AI's responses in production, in real-time.

- Training Data Curation: Automatically score and filter massive datasets to find high-quality examples for fine-tuning.

- Agent Control: Use the scorer as a real-time check within an agent's logic to steer its behavior.

This creates a unified standard of quality across your whole operation. The same definition of 'good' is used from development to production.

Let's Talk About the Pricing

Alright, the money question. The pricing structure is refreshingly simple, which I appreciate. There are basically two tiers:

| Tier | Cost | Details |

|---|---|---|

| Free | $0 | Includes $10 in free credits, which they say covers about 25 million tokens. More than enough to kick the tires. |

| Pay as you go | $0.40 / million tokens | Covers unlimited use. Simple, transparent, and very competitive. |

The free tier is generous enough for any serious developer or small team to validate if it works for them without pulling out a credit card. And the pay-as-you-go pricing is straightforward. I also have to mention this little note on their pricing page:

We're still figuring out our pricing and would love to hear your feedback.

I love that. It shows they're building in public and listening to the community, not just handing down pricing from a boardroom.

The Rough Edges (For Now)

No tool is perfect, especially a new one. There are a couple of things to be aware of. First, it's currently text-only. If your main focus is on image generation or audio, this isn't for you... yet. They're clear that other modalities are on the roadmap, which is promising.

The other point is the pricing being in flux, as mentioned. While I see it as a positive, some might see it as a lack of stability. For early adopters though, it often means a chance to get in on the ground floor and potentially influence the direction of the product.

My Final Take: Is Pi Labs Worth Your Time?

In a word, yes. I think Pi Labs is one of the most interesting and genuinely useful platforms to emerge in the MLOps space this year. They are tackling a real, universal, and incredibly frustrating problem with a smart and pragmatic solution. The move away from inconsistent, expensive LLM judges to fast, cheap, and custom-built scorers feels like a natural and necessary evolution.

For any team that is serious about moving their AI projects from 'cool experiments' to 'reliable products', a robust evaluation framework isn't a nice-to-have, its a necessity. Pi Labs seems to provide just that. With a strong founding team and a generous free tier, there's very little reason not to give it a shot.

FAQs About Pi Labs

How is Pi Labs different from just using GPT-4 as a judge?

The main differences are consistency, speed, and cost. GPT-4 can give different scores for the same input, making it unreliable for testing. Pi Labs builds a deterministic scorer that is consistent, much faster (under 100ms), and up to 5x cheaper than using a large general-purpose LLM.

Is Pi Labs difficult to set up?

It's designed for easy integration. With support for tools like Google Sheets, PromptFoo, and CrewAI, you can plug it into your existing workflows rather than rebuilding everything. The process involves providing examples of good/bad outputs to train your custom scorer.

What do I get with the Pi Labs free tier?

The free tier gives you $10 in credits, which is enough to process around 25 million tokens. This is a substantial amount that lets you fully test the platform's capabilities for your specific use case before committing to a paid plan.

Can I use Pi Labs to evaluate images or audio?

Currently, Pi Labs is focused on text-only evaluation. However, they have stated that support for other modalities (like images and audio) is on their development roadmap and will be coming soon.

Who are the people behind Pi Labs?

The founding team comes from Google, with deep expertise from working on Google Search. This background gives them a strong foundation in understanding complex systems, relevance, and data at a massive scale, which adds a lot of credibility to their approach.

Conclusion

The world of AI tooling is noisy, but every now and then a tool comes along that just... makes sense. Pi Labs is one of those tools. It addresses a core pain point with an elegant solution. If you're tired of the eval grind, I'd definately recommend signing up for their free tier and seeing if it can bring some sanity back to your development process.