I’ve been in the data trenches for years, wrestling with pipelines, optimizing queries, and staring at cloud billing dashboards with a rising sense of dread. It's a familiar story. And at the center of many of those stories is Apache Spark. It's powerful, it's the industry standard... and let's be honest, it can be a bit of a beast. The JVM tuning, the memory hogs, the garbage collection pauses that hit at the worst possible moment—my relationship with it is, well, complicated.

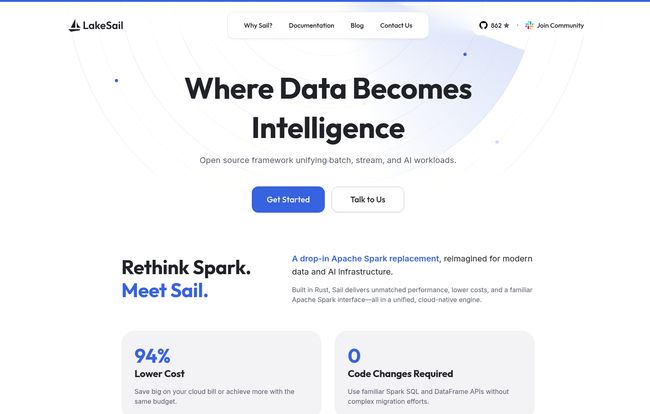

So, whenever a new challenger enters the ring, my ears perk up. Especially when that challenger claims to be a drop-in replacement that's 4x faster and can slash your cloud bill by a jaw-dropping 94%. That’s not just a challenger; that’s a revolution. Meet LakeSail, an open-source framework built in Rust that's making some seriously bold promises.

So, What on Earth is LakeSail?

In a nutshell, LakeSail is an open-source framework designed to unify your data workloads. Think stream processing, batch processing, and even those heavy AI jobs, all under one roof. The core idea is to create one engine that handles everything you throw at it, from your laptop to a massive cloud cluster. But here’s the kicker and the part that really got my attention: it’s engineered to be a drop-in replacement for Apache Spark.

It’s built from the ground up in Rust, a language famous for its performance and memory safety. This means no more JVM. Let me say that again for the folks in the back: zero JVMs. No more unpredictable garbage collection pauses messing with your query latency. It’s a completely different architecture, built for the modern, cloud-native world.

The Big Promise: Why Even Bother Leaving Spark?

Migrating from a tool as entrenched as Spark is a massive decision. It’s the data equivalent of moving your entire house. You don’t do it unless the new place is a mansion with a pool and a self-stocking fridge. So, what’s LakeSail’s mansion-level offering?

Speed, Glorious Speed

LakeSail's homepage hits you with a "4x Faster Execution" stat. Digging into their performance comparisons, they claim some queries can even be up to 8x faster. How? It all comes back to Rust. By running as a native binary, it sidesteps the overhead of the Java Virtual Machine. Think of it this way: Spark is like a powerful, reliable freight train. It can haul an incredible amount of cargo, but it takes time to get up to speed and has a lot of heavy machinery to maintain. LakeSail is positioning itself as a high-speed bullet train—sleek, efficient, and built for pure velocity from the get-go.

Your CFO Will Actually High-Five You

Okay, maybe not a high-five, but they’ll definitely be happier. The claim of a 94% lower cost is staggering. I’ve seen optimization projects that celebrate a 20% reduction. 94% sounds like a typo. But it starts to make sense when you look at the mechanics. Their comparison shows Spark using ~54 GB of memory and spilling over 110 GB to disk for a given workload, while LakeSail uses ~22 GB of memory with zero disk spill. Less memory and less disk I/O means you can run on smaller, cheaper instances. Over thousands of jobs, that's not just saving change, that's rewriting your budget.

Visit LakeSail

The "Zero Code Changes" Dream

This is the part that always makes an engineer’s eyebrow twitch. "Zero code changes" is a promise we've heard before, and it rarely pans out perfectly. However, LakeSail’s approach is very pragmatic. They've focused on providing parity with the most common Spark interfaces: Spark SQL and the DataFrame API. The goal is that for a huge chunk of existing Spark jobs, you can literally just point them to the LakeSail engine and they... just work. No rewrites, no weekend-long migration headaches. If they've pulled this off, it removes the single biggest barrier to adoption.

The Spark vs. Sail Showdown

Talk is cheap, so let's look at the numbers they're putting out there. I've put their comparison data into a quick table. It paints a pretty compelling picture.

| Metric | Apache Spark (The Old Guard) | LakeSail (The New Kid) |

|---|---|---|

| Query Time | Baseline | Up to 8x faster |

| Memory Usage | ~54 GB average | ~22 GB peak (less than half!) |

| Disk Spill | > 110 GB | 0 GB |

| Engine | JVM-based | Rust-native |

| Cluster Startup Time | Several minutes | A few seconds |

That zero disk spill is a huge quality-of-life improvement. Anyone who has spent an afternoon trying to figure out why their Spark job is suddenly spilling massive amounts of data to slow S3 or disk storage knows the pain. Eliminating that is a big, big deal.

Hold On, It Can’t Be Perfect, Right?

Okay, let's pump the brakes a little. No tool is a silver bullet. LakeSail is still the new kid on the block, and that comes with some caveats. Based on what I've gathered, here are a few things to keep in mind:

- A Growing Community: Spark has a massive, mature community. You can find a solution to almost any problem on Stack Overflow or in a decade-old blog post. LakeSail's community is new and growing. That’s not necessarily a bad thing—it’s a chance to get in on the ground floor and help shape the tool—but it means you might be more of a pioneer than a settler.

- Free Tier Focus: The open-source, free version seems to focus on the SQL and DataFrame API replacement. If you need more complex, custom integrations, you're likely looking at their commercial enterprise support. This is a pretty standard open-core model, but it's important to know where that line is drawn.

- It's Still Evolving: While the core is there, the ecosystem around it will take time to build out. The library of connectors and integrations that Spark has built over many years won't appear overnight.

Who Is LakeSail Actually For?

So, who should be dropping everything to check this out? In my opinion, there are a few key groups:

First, teams drowning in Spark-related cloud costs. If your AWS or GCP bill for data processing is making your eyes water, the potential for cost savings alone makes LakeSail worth a proof-of-concept.

Second, developers who value performance and a modern tech stack. If you're building a new data platform from scratch in 2025, starting with a Rust-native, cloud-native engine just feels... right. It's future-proofing your stack.

And third, any data engineer who's just plain tired of fighting the JVM. If the idea of a high-performance engine without garbage collection gives you a sense of profound relief, you've found your people.

Final Thoughts: Is it Time to Set Sail?

I’m genuinely excited about LakeSail. It’s ambitious, smart, and it’s targeting real, tangible pain points that data professionals face every day. It’s not just another incremental improvement; it's a fundamental rethinking of the engine that powers modern data.

Is it going to replace every single Spark installation tomorrow? No, of course not. But for the vast number of workloads that rely on Spark SQL and DataFrames, it presents a ridiculously compelling alternative. The combination of performance gains, cost reduction, and minimal migration friction is a potent one.

My advice? Keep a very close eye on this project. Join their community on Slack or GitHub. Since it's open-source, spin it up and run a few of your existing queries against it. See if the performance claims hold up for your use case. This might just be the tide that lifts all boats in the data world.

Frequently Asked Questions about LakeSail

- Is LakeSail completely free to use?

- Yes, LakeSail is an open-source project, so its core framework is free. They operate on an open-core model, which means they offer commercial support and enterprise-level features, like custom integrations, for paying customers.

- Do I need to be a Rust programmer to use LakeSail?

- Not at all. For its primary use case—as a Spark replacement—you'll be interacting with it through the familiar Spark SQL and DataFrame APIs in languages like Python or Scala. You don't need to write any Rust unless you want to contribute to the project itself or build highly advanced user-defined functions (UDFs).

- How difficult is the migration from Apache Spark?

- It's designed to be as painless as possible. For jobs using Spark SQL or the DataFrame API, the goal is near-zero code changes. You'd essentially change the execution engine your job points to. The difficulty would increase if your job relies heavily on Spark features not yet supported by LakeSail.

- What is the single biggest advantage of LakeSail over Spark?

- It’s a tie between speed and cost. Both benefits stem from its core architecture: being built in Rust and having no JVM. This leads to much more efficient use of memory and CPU, which translates directly to faster queries and the ability to run on smaller, cheaper hardware.

- Where can I get help or learn more?

- The best places to start are their official website, their GitHub repository for the code, and their community channels like Slack or Discord. Joining the community is a great way to ask questions and see how others are using teh tool.