User research can be a total slog. I’ve been in this game for years, and the cycle is always the same: you have a brilliant idea, a new feature you're dying to test, but then reality hits. The scheduling back-and-forth, the no-shows, the hours spent transcribing interviews, and then the monumental task of trying to find the actual, usable insights buried in a mountain of notes. It's enough to make you want to just ship the feature and pray.

So, when I see a platform like Wondering pop up, claiming to use AI to make the whole process 42x faster... my ears perk up. But so does my inner skeptic. Is this just another tool slapping an 'AI' label on something old, or is it genuinely a game-changer? I decided to take a proper look.

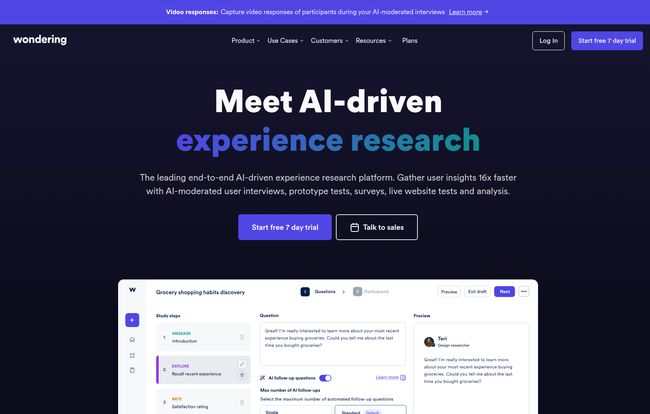

Visit Wondering

So, What Exactly is Wondering?

At its heart, Wondering is an experience research platform. Think of it as a central hub for figuring out what your users actually want. It's built for product teams, UX researchers, and even marketers who need to get inside their customers' heads without spending a whole quarter doing it. The big hook is its heavy integration of artificial intelligence to speed up the tedious parts of research.

Instead of you manually running every single interview, Wondering uses an AI moderator. Instead of you spending days coding qualitative data, Wondering’s AI pulls out themes and answers. It’s designed to help you conduct and analyze hundreds of user interviews, prototype tests, and surveys at the same time. It's an ambitious promise, for sure.

The AI Features That Genuinely Caught My Eye

Look, 'AI-powered' is the marketing phrase of the decade. But some of Wondering's features seem to have real substance behind the buzzwords.

AI-Moderated Interviews: Your 24/7 Research Assistant

This is the big one. The idea of scaling moderated interviews used to be a fantasy. You'd need an army of researchers. Wondering offers AI-moderated interviews that can run simultaneously, gathering feedback from users across the globe in multiple languages. I was initially imagining a clunky, robotic experience, but the goal here is to create a guided, conversational flow that feels natural to the user. It’s not about replacing the deep, empathetic connection of a human-led interview, but for getting directional feedback at scale, this is huge. It's the difference between talking to 5 users in a week and getting feedback from 50 overnight.

AI Study Builder and AI Answers: Cutting Out the Busywork

Ever stared at a blank Google Doc, trying to figure out the right questions for a study? The AI Study Builder is meant to solve that. You give it your goal, and it helps generate relevant questions and structure your study. It’s a great starting point to get you off the ground faster.

Then, after the data is collected, the AI Answers feature steps in. This is like a search engine for your research findings. Instead of re-reading every transcript, you can just ask questions like, “What were the main frustrations with the checkout process?” and the AI synthesizes the answers from all your user sessions. That’s not just a time-saver; it’s a way to reduce bias and spot patterns a single human might miss. It's like having a junior researcher who's had way too much coffee and can instantly sift through all the noise for you.

It's a Full Research Platform, Not Just an AI Toy

While the AI is the shiny object, Wondering is a pretty complete platform underneath. It’s not just for interviews. You can run a whole bunch of different studies:

- Prototype Tests: Get feedback on your Figma or Adobe XD designs before you write a single line of code.

- Live Website Tests: Send users to your actual website to see how they complete tasks in the wild.

- Surveys & Concept Tests: Quickly validate an idea or a new design concept with quantitative and qualitative feedback.

It also handles the recruitment side of things. You can bring your own users or tap into their global Participant Panel. This end-to-end approach is smart, because sourcing good participants is often half the battle.

Let's Talk Money: Wondering's Pricing

Alright, the all-important question: what's it going to cost? The pricing structure seems aimed at a couple of different audiences.

| Plan | Price | Best For |

|---|---|---|

| Explore | $149 per month | Startups, small teams, or those just getting started with regular user research. |

| Scale | Custom (Let's talk) | Larger organizations needing more studies, unlimited responses, and advanced features like SSO. |

| Participant Panel | $5 per response | Teams that need to recruit participants for their studies and don't have their own user pool. |

The Explore plan at $149/month feels like the sweet spot for a lot of businesses. You get one study a month with up to 50 responses, which is a solid amount of feedback to guide your next sprint. It includes most of the core AI features, which is great. The Scale plan is for the big players, with annual billing and all the enterprise bells and whistles you'd expect. The $5 per participant fee for their panel is also pretty competitive in the market.

My Two Cents: The Good and The... Cautious

No tool is perfect, right? After digging in, here's my honest breakdown.

What I love is the sheer speed. The potential to go from an idea to actionable insights in a matter of days, not weeks, is incredibly powerful. For agile teams, that's everything. The ability to run research in multiple languages without hiring a global team is another massive plus. It democratizes access to international users.

"Before using Wondering it would take a long time to organize and analyze user studies. We can now test with more participants and go from prototype tests to our development sprint in a matter of days. It has helped us to run user research studies more regularly." - Senior Product Designer (from their site)

On the other hand, my main hesitation is the natural reliance on AI. AI is amazing at spotting patterns, but it can miss nuance, sarcasm, or the subtle hesitation in a user's voice that a human researcher would pick up on. I wouldn't use this to replace all deep, foundational research, but rather to augment it and handle the high-volume, directional testing. The price for the Explore plan is reasonable, but for very small startups or freelancers, $149 a month might still be a bit of a stretch. But then again, one bad feature launch could cost way more than that.

Frequently Asked Questions about Wondering

1. Is Wondering meant to completely replace human researchers?

I don't think so. It's a tool to make researchers more efficient. It's best for scaling research and getting quick feedback, but for deep, empathetic, strategic research, you'll still want a human at the helm. Think of it as a force multiplier, not a replacement.

2. How does the AI moderator actually work?

It guides users through a set of questions and tasks you define in the Study Builder. It can ask follow-up questions and prompt users to elaborate, creating a more dynamic experience than a simple unmoderated test. It captures video, audio, and screen recordings for you to review.

3. Can I use my own customers for studies?

Yes. Wondering allows you to bring your own participants by sharing a study link. You can also use their Participant Panel for an additional fee if you need to reach a new audience.

4. What integrations are available?

The site mentions prototype testing integrations, which typically means tools like Figma, Adobe XD, and Sketch. For specific details on other integrations, you'd likely need to check their documentation or talk to their sales team for the Scale plan.

5. Is the Explore plan at $149/month worth it?

In my opinion, if you're a team that needs user feedback but is constantly blocked by time and resources, then yes. The cost of building the wrong thing is far greater than $149. It allows you to build a continuous feedback loop into your development process, which is invaluable.

The Final Verdict on Wondering

So, is Wondering the future of user research? It's definitely a huge leap in the right direction. It's not magic, and it won't solve all your product problems for you. But it tackles one of the biggest bottlenecks in product development: the speed of learning. It transforms user research from a slow, periodic event into a fast, continuous habit.

For any team that feels they're flying blind too often, a tool like Wondering could be the superpower they need to build better products, faster. It's an exciting time to be in this field, and tools like this are a big reason why.