Let’s have a little chat. You and me. If you’ve spent any time in the AI or machine learning space lately, you know the feeling. It’s that little pang of dread you get when you look at your monthly cloud bill. AWS, GCP, Azure... they're fantastic, powerful platforms. But running GPUs on them? It can feel like you’re feeding hundred-dollar bills into a woodchipper just to keep the lights on.

It's the silent killer of so many brilliant AI projects. A slow, expensive bleed that drains your runway before you've even hit your stride. So, whenever a new player steps into the ring promising to slay this dragon, my ears perk up. And lately, the name I keep hearing is GPUX.

They swagger in with a truly wild claim: cutting your GPU costs by 50-90%. Yeah, you read that right. My first thought? Sure, and I've got a bridge to sell you. But I've been in this game long enough to know that sometimes, the wildest claims are the ones you have to investigate. So I did.

So, What Exactly is GPUX Anyway?

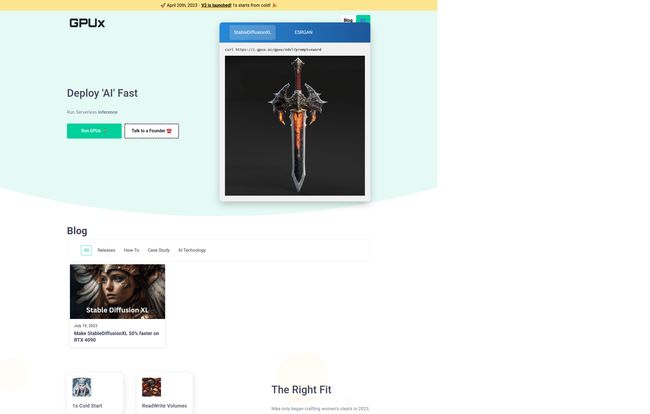

Strip away the buzzwords, and GPUX is a platform designed to do one thing really well: run your Dockerized applications on powerful GPUs, but without the cost of an always-on server. Think of it like Vercel or Netlify, but instead of deploying a simple website, you're deploying a heavy-duty, GPU-hungry AI model or application.

The core magic here is the term “serverless inference.”

Visit GPUX

The Serverless GPU Dream

For years, if you needed a GPU, you had to rent the whole machine by the hour. Didn't matter if you were using it for 30 seconds or 30 minutes, you paid for the full hour. It's like renting an entire movie theater just to watch the trailers. Inefficient. Expensive.

Serverless flips that script. With a platform like GPUX, you package your code (say, a Stable Diffusion model) into a Docker container, upload it, and they handle the rest. When a request comes in to generate an image, they spin up the necessary hardware, run your code, and then spin it back down. You only pay for the actual compute time you use, down to the second. That idle time? It costs you nothing. This is the entire basis for their audacious cost-saving claim, and honestly, it’s a compelling one.

Running Your Favorite AI Toys and Tools

Looking at their site, it’s clear they know their audience. They prominently feature support for popular models that developers and creators are actually using right now. We're talking about things like:

- StableDiffusion XL: The go-to for high-quality image generation.

- Whisper: OpenAI’s incredible speech-to-text model.

- ESRGAN: For upscaling images with frankly shocking clarity.

- Alpaca-LM: A popular open-source language model.

This isn't just some generic compute platform; it's being positioned for the modern AI stack. And the way they enable this is through the most beautiful, frustrating, and powerful tool in a developer's arsenal: Docker.

The Double-Edged Sword of Docker

GPUX's entire philosophy seems to be, “If you can Dockerize it, you can run it.” In my book, this is a massive plus. It gives you incredible flexibility. You're not locked into some proprietary framework or a limited set of pre-approved models. You control your environment. You manage your dependencies. You can bring your own weird, wonderful, custom-trained model to the party as long as it’s wrapped in a container. This is how you get private model deployment, a feature that should make businesses' ears perk up.

But—and it’s a big but—you have to be comfortable with Docker. If wrestling with Dockerfiles and container registries gives you hives, there’s going to be a learning curve. It’s a prerequisite, not a suggestion.

What I Really Like About This Platform

Okay, let's break it down. The main draw is obvious: the potential for drastic cost savings. For any startup or indie hacker, this isn't just a nice-to-have, it’s a lifeline. The ability to offer an AI-powered feature without taking out a second mortgage is a pretty big deal.

I'm also a huge fan of the private deployment angle. A lot of companies are hesitant to upload their proprietary models and data to a third-party service. By letting you run your own private Docker containers, GPUX offers a middle ground between building your own expensive infrastructure and handing your secret sauce over to a giant corporation.

The focus on real-world models like StableDiffusion and Whisper shows they have their finger on the pulse of the AI community. They're not stuck in academic theory; they're building for what people are creating right now.

The Things That Give Me Pause

Now, it can't all be sunshine and cheap GPUs. There are a few things that make the seasoned skeptic in me raise an eyebrow. First and foremost...

Where is the pricing page?

I looked. I looked again. I even found a 404 page, which was... an experience. But a clear, public pricing table? Nowhere to be found. This is a classic early-stage startup move. It usually means pricing is either still in flux or they want you to get on a sales call. I get it, but in 2024, I want transparency. I want to see the numbers without having to talk to a human. This is probably my biggest gripe.

There's also a bit of a black box feel to the underlying tech. They mention P2P and other concepts, but I’d love to see a more detailed whitepaper or some technical blog posts. How are they achieving this? What kind of GPUs are they running? What are the cold-start times really like? The promise is huge, but the technical details are a bit sparse for my liking.

So, Who is This Really For?

After digging in, a clear picture of the ideal GPUX user emerges. It's not for the absolute beginner who's never touched a command line. It’s for:

- Indie Hackers & Startups: Anyone building an AI-powered app on a tight budget. The serverless model is a perfect fit.

- Developers & ML Engineers: People who are comfortable with Docker and want to deploy models without becoming full-time infrastructure engineers.

- Businesses with Custom Models: Companies that have developed their own AI models and need a secure, scalable, and cost-effective way to serve them for inference.

It's an intriguing alternative for anyone who's looked at the complexity and cost of something like AWS SageMaker and thought, “There has to be a simpler way.”

Frequently Asked Questions about GPUX

How much does GPUX actually cost?

That's the million-dollar question! As of my review, GPUX doesn't have a public pricing page. You'll likely need to contact their team directly to get details. This is a major point of consideration.

What is serverless GPU inference in simple terms?

It means you only pay for the exact moment your code is running on a GPU. Instead of renting a GPU by the hour and letting it sit idle, the platform activates the GPU for your task and then shuts it off. You pay per second of use, not per hour of availability.

Can I run my own custom-trained AI models on GPUX?

Yes. This is one of its biggest strengths. Because it's built around Docker, you can package any model or application into a container and run it on their platform, which is ideal for private or proprietary models.

Is GPUX a direct replacement for AWS, GCP, or Azure?

Not for everything, no. The big cloud providers offer a massive suite of services. GPUX is a specialized tool focused on being a highly cost-effective platform for GPU-based inference. It competes with a slice of what they offer, not the whole pie.

What skills do I need to use GPUX effectively?

A solid understanding of Docker is non-negotiable. You'll need to be comfortable creating and managing Docker containers to package your applications for their platform.

My Final Verdict on GPUX

So, is GPUX the revolutionary cost-slayer it claims to be? The potential is absolutely there. The concept of serverless GPU for Dockerized apps is not just smart; it's exactly what a huge chunk of the market needs right now. Its a powerful proposition.

However, its still a bit of an enigma. The lack of transparent pricing and deep technical documentation holds it back from being an unqualified recommendation. It feels like a platform with immense promise that's still finding its footing.

My advice? Keep GPUX on your radar. If you're feeling the burn of high GPU costs and you're comfortable with Docker, reach out to their team. It might just be the solution you've been waiting for. It’s one I’ll be watching very, very closely.

Reference and Sources

- GPUX Official Website

- Annie - Marketing (via GPUX Website)

- Ivan - Tech (via GPUX Website)

- Forbes - The Escalating Costs Of AI And How To Control Them (Context)