The world of Large Language Models (LLMs) is getting a little… crowded. It feels like every other week there’s a new groundbreaking model from Google, Anthropic, a stealth startup, or OpenAI itself. And as a developer or a product manager, trying to keep up is one thing. Trying to integrate them all is a whole other beast.

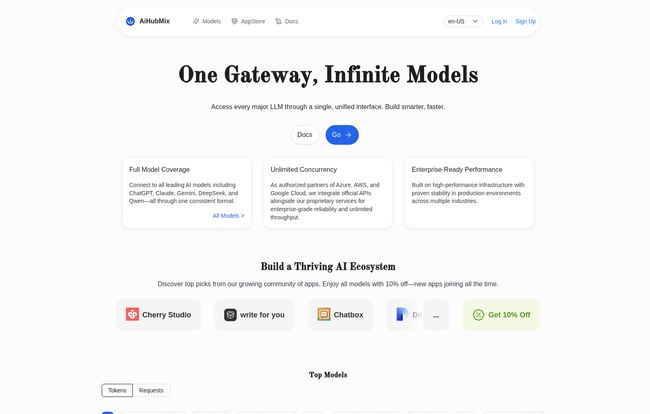

I’ve spent more nights than I’d like to admit wrestling with different SDKs, authentication methods, and documentation styles. One model wants a Bearer token, another needs a specific header, and a third has a response format that just breaks everything. It's a mess. A beautiful, innovative, chaotic mess. So when I stumbled upon a tool called AiHubMix, my curiosity was definitely piqued. The tagline on their site is “One Gateway, Infinite Models.” A bold claim. But could it actually be the universal translator for LLMs we’ve all been dreaming of?

So, What Exactly is AiHubMix?

Think of AiHubMix as the ultimate travel adapter for your AI projects. You know, that one little cube you have that lets you plug your US-based laptop into a socket in London or Tokyo? AiHubMix is that, but for code. It’s an LLM API router. In plainer English, it takes a whole bunch of different AI models—we’re talking the big guns like OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, and even others like Llama and DeepSeek—and lets you talk to all of them using a single, unified API. And which API did they choose as the standard? The one most of us are already familiar with: the OpenAI API.

This is a pretty smart move. It means you can write your code once as if you're calling GPT-4, and then with a simple change of a parameter, you can swap it out for Claude 3 Sonnet or Gemini 1.5 Pro. No re-writing authentication. No learning a new SDK. It just… works. That’s the promise, anyway.

Visit AiHubMix

Why a Unified API is Such a Big Deal

I remember a project last year where we wanted to A/B test a new feature using a model from Anthropic against our existing OpenAI implementation. The idea was simple, but the execution was a headache. It took our team the better part of a week to build out the second integration, handle the new error codes, and get everything running in parallel. It was a chore. A tool like AiHubMix would have turned that week-long task into an afternoon’s work.

The real power here is agility. You're no longer locked into one ecosystem. Did a new, cheaper, faster model just drop that you want to test? No problem. Want to route simple queries to a less powerful model to save costs and complex ones to a powerhouse like GPT-4o? This architecture makes that kind of intelligent routing possible. It transforms your AI stack from a rigid structure into something fluid and adaptable.

Diving into the Features That Matter

A unified API is great, but the devil is in the details. Let's look at what AiHubMix is really bringing to the table.

The All-Star Roster of Models

Looking at their dashboard, I was genuinely impressed with the model list. It wasn't just a few greatest hits. They have a ton of variants, including the very latest models like claude-3.5-sonnet-20240620 and gpt-4o. This isn't some dusty library of old tech; it's a constantly updated roster. Having access to models from DeepSeek, Llama, and even Chinese models like 阿里Qwen (from Alibaba) and 月之暗面 (Moonshot AI) all in one place is a massive advantage for anyone looking to find the absolute best model for a specific task, not just the most popular one.

Unlimited Concurrency: The Unsung Hero

This one might fly under the radar, but it's huge. Unlimited concurrency. Most API providers have rate limits—they only let you make a certain number of requests at the same time to prevent you from overwhelming their systems. AiHubMix claims to remove that bottleneck. It’s like going from a single-lane country road to an eight-lane superhighway. For applications with spiky traffic or enterprise-level needs, this could be the single most important feature, ensuring your service stays responsive no matter how many users hit it at once.

The OpenAI API Proxy Functionality

I touched on this before, but it's worth repeating. By acting as an OpenAI API proxy, AiHubMix makes integration a breeze. So much of the existing open-source software, from frameworks like LangChain to countless Github projects, is built to speak “OpenAI.” AiHubMix leverages this, lowering the barrier to entry to almost zero for anyone with existing code. You basically just change the base URL, and you’re off to the races.

The Not-So-Shiny Parts: A Realistic Look

Alright, no tool is perfect. As an SEO and tech guy, I’m naturally skeptical. It's important to look at the trade-offs. The main advantage of AiHubMix—that it sits in the middle of your requests—is also its main potential weakness. You're introducing a middleman, and that can mean a few things.

First, there's the potential for a tiny bit of extra latency. Your API call has to go from your server to AiHubMix, and then to Google or OpenAI. While this is likely measured in milliseconds, for real-time applications, every millisecond counts. Second, you’re placing your trust in another service. If AiHubMix has an outage, your AI features go down with it. That’s a risk you have to weigh, just like with any third-party dependency.

What About the Price Tag?

This is where things get a bit weird. When I navigated to find the pricing details... I hit a “page not exist” error. Whoops. This could mean a few things. They might be a very new service still figuring out their pricing tiers, or they might be focused on enterprise clients with a “contact us for a quote” model. It’s a bit of a letdown, as transparent pricing is always a plus. I'd imagine the model would be pay-as-you-go, where you pay the base cost of whatever model you use plus a small premium for the routing service AiHubMix provides. But for now, the cost remains a question mark.

Who is AiHubMix Actually For?

So, who should be jumping on this? In my opinion, the sweet spot is for startups and dev teams focused on rapid prototyping. The ability to swap models on the fly to find the perfect fit for a new feature is invaluable when you're trying to move fast. It's also perfect for developers adding AI features to existing applications who don't want the headache of multiple, complex integrations.

Who might want to hesitate? Perhaps a massive, hyper-scale company where direct-to-source API calls are mandated for security or latency reasons. But for the 90% of us in between, the convenience factor is incredibly compelling.

My Final Thoughts on AiHubMix

Despite the mysterious pricing page, I’m genuinely excited about AiHubMix. It solves a real, tangible problem that I and many other developers face every day. The fragmentation of the AI model market is only going to continue, and we need tools like this to act as the connective tissue. It’s not just a router; it’s an enabler of choice and flexibility.

It turns the daunting task of model selection and integration into a simple, elegant process. For that alone, it's a tool I'll be keeping a very close eye on. The promise of “One Gateway, Infinite Models” might not be just marketing fluff after all.

Frequently Asked Questions

- What is AiHubMix in simple terms?

- It's a universal adapter for AI models. It lets you use one single API (the OpenAI standard) to access dozens of different LLMs from providers like Google, Anthropic, and others, simplifying code and making it easy to switch between models.

- Do I still need accounts with OpenAI, Google, etc.?

- No, that's the beauty of it. AiHubMix handles the connections to the upstream models. You only need an account and API key from AiHubMix itself, which simplifies key management significantly.

- Is AiHubMix free to use?

- The pricing information is not currently available on their website. It's likely a usage-based model where you pay for the AI models you consume, possibly with a small service fee. You'll have to contact them for specifics.

- What happens if a model I'm using is updated?

- AiHubMix appears to stay on top of the latest model releases. When a new version like GPT-4o or a new Claude model is released, they add it to their platform. You can then switch to the new model by simply changing the model identifier string in your API call.

- Is there a risk of increased latency?

- Yes, there is a potential for a small amount of added latency because your request has to pass through AiHubMix's servers before reaching the final model's API. For most applications, this will be negligible, but for highly time-sensitive tasks, it's a factor to consider.

Conclusion

In a rapidly expanding AI universe, tools that provide simplicity and interoperability are worth their weight in gold. AiHubMix steps into this role beautifully, tackling the very real pain of API fragmentation. While some questions around pricing and reliance remain, its core value proposition is undeniable. If you're a developer who wants to experiment, iterate, and build with the best AI has to offer without getting tangled in a web of APIs, AiHubMix is absolutely worth a look.

Reference and Sources

- AiHubMix Official Website: aihubmix.com

- An Article on the Growing Complexity in the LLM Space: The Emerging Architecture of LLMs by Sequoia Capital