We've all been there. You're deep in a fascinating conversation with an AI chatbot. You're brainstorming, solving problems, maybe even getting some half-decent life advice. You feel like you're getting somewhere. Then you close the tab, come back an hour later to pick up where you left off, and... poof. Nothing. The AI stares back at you with a blank digital slate, asking, "How can I help you today?" as if it's never seen you before in its life.

It's frustrating, right? It's like having a brilliant assistant who has a severe case of short-term memory loss. This "stateless" nature is one of the biggest hurdles holding AI back from being truly, consistently useful. We're building these incredibly powerful language models, but we're forcing them to start from scratch with every single interaction. It’s a huge waste of potential.

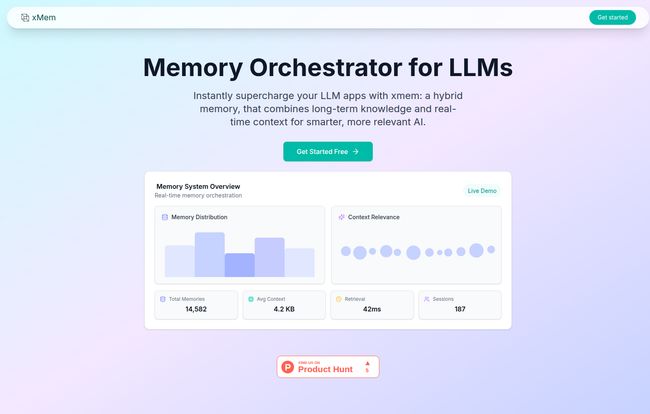

For years, developers have been cobbling together solutions with complex context windows and messy databases. But what if there was a cleaner way? What if you could just… give your AI a proper memory? That's the promise of a tool I've been looking at recently called xmem, and frankly, it's got me pretty excited.

So, What Exactly is this xmem Thing?

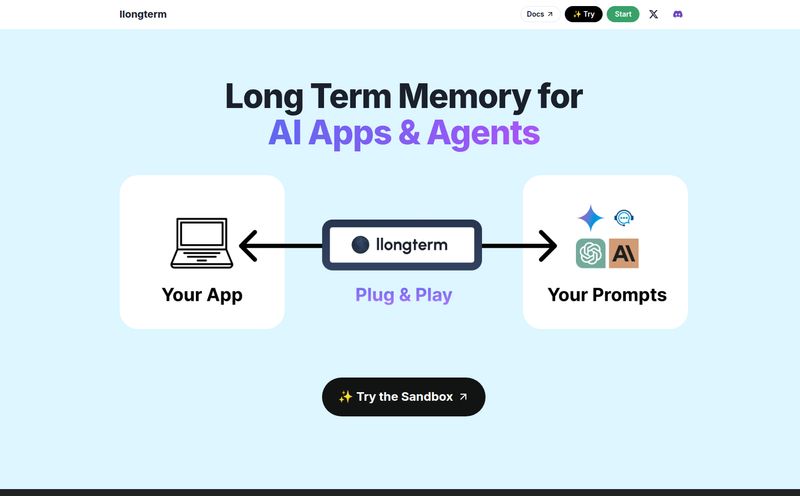

Think of xmem as a central nervous system for your AI application. It’s a dedicated Memory Orchestrator for LLMs. That sounds a bit jargony, I know, but the concept is simple. It sits between your user, your application, and the Large Language Model (like GPT-4, Claude, etc.) and its sole job is to remember things. All the things.

It acts like a digital hippocampus, creating and retrieving memories to make every conversation smarter and more personal. It's not just about remembering the last thing a user said; it's about building a rich, long-term understanding of context, documents, and user history. This is the stuff that separates a clunky bot from a genuinely helpful AI partner.

Visit xmem

The Core Problem xmem Solves So Elegantly

The headline on their site says it all:

LLMs forget. Your users notice.Oof. That hits home. This isn't just a minor inconvenience; it's a fundamental flaw in the user experience of many AI products today. Imagine a customer support bot that asks for your order number five times in one conversation. Or a personal coding assistant that forgets the programming language you’re working in. It completely breaks the flow.

xmem is built to be the single source of truth for your AI's knowledge. It ensures the AI is always relevant, accurate, and personal because it has access to a persistent memory. No more starting over. No more lost context. Just continuous, intelligent conversation.

How xmem Actually Builds an AI Brain

This is where it gets really interesting for us tech folks. It’s not just one big memory bucket. xmem intelligently separates memory into a few key types, which is what makes it so powerful.

Long-Term Knowledge and Session Context

First, you've got Long-Term Memory. This is the big stuff. It’s your company’s entire knowledge base, all your product docs, past user conversations—everything. xmem uses vector search to store and instantly retrieve information from this massive library. Then you have Session Memory, which is the short-term, in-the-moment context of the current chat. It tracks recent messages to maintain conversational flow and relevancy. By combining these two, the AI knows both the entire history of everything and what you're talking about right now. It's a game-changer.

The Magic of RAG Orchestration

If you're in the AI space, you've heard of RAG, or Retrieval-Augmented Generation. It's the technique of fetching relevant information before you ask the LLM to generate a response, which dramatically improves accuracy. The problem is, tuning RAG can be a real pain. You have to manually figure out what context to pull and when.

xmem automates this entirely with what they call RAG Orchestration. It automatically figures out what context is needed for any given LLM call and fetches it. This means developers can spend less time on tedious data plumbing and more time building cool features. This is a huge win for productivity.

My Favorite Things About xmem

Look, new tools pop up every day, but a few things about xmem really stand out to me from a developer's perspective.

First and foremost, it's Open Source First. This is massive. It means you aren't locked into a proprietary ecosystem. You can use xmem with any LLM, any vector database (like Pinecone or Chroma), and any framework you prefer. This flexibility is gold in today's fast-moving AI world.

Second, the promise of Effortless Integration seems legit. The site shows a simple Python SDK, and the code snippet to get started looks incredibly straightforward. This isn't some behemoth enterprise software that requires a team of consultants to install. It feels like something a small startup or even a solo dev could get running over a weekend.

And of course, there's security. Having Role-Based Access Control built-in is critical. When you're centralizing all your company knowledge, you need granular control over who can see what. It’s a non-negotiable feature for any serious business application.

What about the Price Tag?

This is the million-dollar question, isn't it? As of writing this, there isn't a public pricing page on the xmem website. This usually means one of a few things: they might be focused on custom enterprise plans, they could have a generous free tier for the open-source version, or they're still in an early launch phase. My advice? Head over to their site and get in touch. Given its open-source nature, I'm optimistic there will be accessible options for projects of all sizes.

| The Good Stuff | Things to Consider |

|---|---|

| Creates a central, single source of truth for your AI's knowledge. | Like any new tool, there will likely be a bit of a learning curve to get started. |

| Open-source philosophy means amazing flexibility with different LLMs and databases. | Requires some initial setup and potentially migrating existing data. |

| Automated RAG orchestration saves a ton of development time. | Relies on API integrations, so you'll need to manage those connections. |

| Boosts collaboration and productivity by ensuring everyone (and every bot) works from the same information. | It's a newer platform, so the community support system is still growing. |

My Final Take on Giving Your AI a Memory

I’ve always felt that memory is the missing link for AI. We've cracked the language part, but without memory, it's just a clever parrot. Tools like xmem are building that missing link. They're turning our forgetful digital assistants into true, stateful partners that can learn and grow with us.

Is it a silver bullet that will instantly solve all your AI development woes? Probaly not. You'll still need to put in the work to integrate it properly. But it feels like a genuinely foundational piece of the modern AI stack. If you’re building any kind of application that involves back-and-forth conversation with an AI, you should absolutely have xmem on your radar. I, for one, am seriously impressed and cant wait to see what people build with it.

Frequently Asked Questions

What is xmem in simple terms?

xmem is like a brain or a long-term memory for your AI. It helps your AI application remember past conversations, documents, and user details so it can have smarter, more personalized, and more helpful interactions.

Does xmem work with any LLM, like GPT-4 or Claude?

Yes. A major advantage of xmem is that it's designed to be model-agnostic. Because it's open-source, you can integrate it with pretty much any Large Language Model (LLM) or vector database you choose.

Is xmem difficult to integrate into an existing project?

It's designed for easy integration. It provides a simple Python SDK, which should make it relatively straightforward for developers to add to new or existing applications without a massive overhaul.

What is RAG Orchestration and why is it important?

RAG (Retrieval-Augmented Generation) is a technique where the AI retrieves relevant facts from a knowledge base before answering a question. xmem's RAG Orchestration automates this process, saving developers a lot of time and making the AI's answers much more accurate and context-aware.

Is xmem just for big companies?

While it has enterprise-grade features like role-based access control, its open-source nature and easy integration make it suitable for projects of all sizes—from solo developers and startups to large corporations.

How does xmem handle data security?

xmem includes Role-Based Access Control (RBAC), which is a critical security feature. This allows you to set granular permissions, ensuring that users and AI agents can only access the information they are authorized to see.