If you've ever tried to take a brilliant AI model from your Jupyter notebook and push it into a live, scalable production environment, you know the pain. It’s a journey, and not the fun, road-trip-with-friends kind. It's more like a trek through a jungle of Dockerfiles, YAML configurations, and that dreaded Kubernetes cluster that just won't behave.

I've been in the SEO and traffic game for years, and I’ve watched the shift. It's not just about keywords anymore; it's about leveraging data, about building smart systems. And the bottleneck is almost always the same: deployment. The gap between a data scientist saying "it works on my machine!" and an engineer saying "it's live and stable" is a chasm filled with sleepless nights and way too much coffee.

So when a tool like UbiOps comes along, promising to be a bridge over that chasm, my professional curiosity gets piqued. They talk a big game about simplifying AI production and letting you deploy models instantly. But as we all know, in the world of tech, talk is cheap. So, I dug in. What is this thing, really?

Visit UbiOps

So, What Exactly is UbiOps?

At its core, UbiOps is an MLOps platform built for model serving and orchestration. Think of it like this: you've built a fantastic custom car engine (your AI model), but you don't want to build the entire car, the factory, and the road network it runs on. UbiOps aims to be that factory and road network, all wrapped up in one neat package.

It's designed to take your AI and ML code—whether it's for some heavy-duty computer vision, a fancy new Generative AI model, or classic data science—and deploy it without you having to become a certified Kubernetes guru. It gives you a single, unified interface to manage all your AI workloads. And the really interesting part? It doesn’t care where you want to run it. Your local machine for testing, a private on-premise server, or a glorious multi-cloud setup spanning AWS, Azure and GCP. It promises to handle it all.

The MLOps Nightmare You Can Finally Wake Up From

Let's be honest for a second. The term 'MLOps' was born out of necessity because deploying machine learning is a fundamentally different beast than deploying a standard web app. You have versioning for not just code, but for models and data too. You need specialized hardware like GPUs. You need to monitor for things like model drift, not just server uptime.

Most teams either throw a bunch of DevOps engineers at the problem, who then have to learn the quirks of ML, or they ask data scientists to manage infrastructure, which… well, that’s usually a recipe for disaster. I’ve seen it happen. Projects stall, costs spiral, and brilliant models die a slow death in a Git repository.

"With UbiOps we have found a way to deliver computer vision results reliably in real-time and cope with changing workloads by scaling on-demand across GPUs rapidly."

- Alexander Roth, Data Scientist at Bayer Digital Crop Science

This is the pain point UbiOps is built to solve. It bundles all that messy-but-critical MLOps stuff into the platform. We're talking about automatic API endpoint creation, model versioning, auto-scaling algorithms, security, and monitoring dashboards. It’s the boring grunt work that no one wants to do, but everyone desperately needs.

Key Features That Actually Seem to Matter

A feature list is just a list until you see how it solves a real problem. Here’s my take on what stands out.

One Platform For Every Cloud

This is the big one for me. The idea of running your AI workloads anywhere from a single interface is incredibly powerful. It helps you avoid the dreaded vendor lock-in. Started on AWS but now Azure is offering a better deal on GPU instances? In theory, a platform like UbiOps makes that switch less of a monumental undertaking. This hybrid and multi-cloud capability isn't just a buzzword; it's a strategic advantage that gives companies flexibility and control over their infrastructure and costs. It's a concept many are still warming up to, but as thought leaders have noted, planning for this kind of flexibility is just smart business.

The MLOps Swiss Army Knife

It’s not just about deployment; it's about the entire lifecycle. UbiOps comes with a lot of tools in the box:

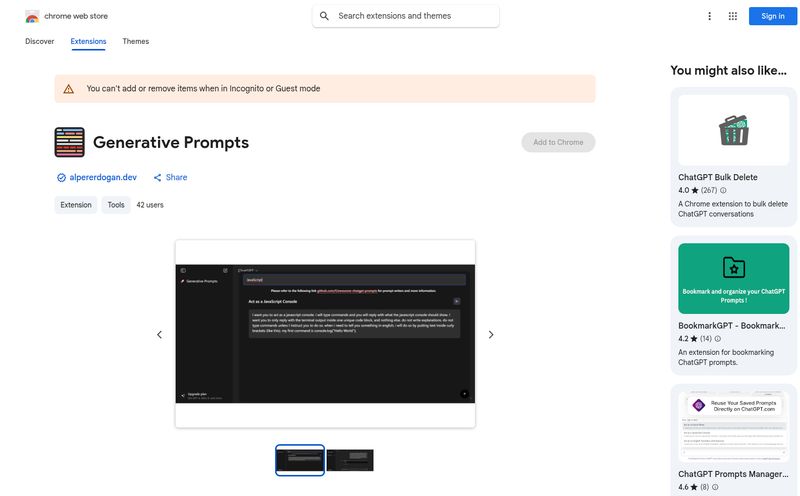

- Instant API Endpoints: You deploy a model, you get a live API. Simple as that. No more messing with Flask or FastAPI boilerplate just to get a proof of concept running.

- Smart Scaling: It can automatically scale your models up or down based on demand, even scaling down to zero so you're not paying for idle resources. This is huge for managing cloud costs.

- Versioning & Auditing: It keeps track of different versions of your models and who did what, when. This is a lifesaver for compliance and debugging when a new model version suddenly starts giving weird results.

The Good, The Bad, and The "Book a Call"

No tool is perfect, right? From what I can see, UbiOps has a lot going for it, but there are some things to keep in mind.

| The Good Stuff | Things to Consider |

|---|---|

| Drastically simplifies the deployment process. A huge time-saver. | There's probably a learning curve. It's a new abstraction layer to understand. |

| Reduces the need for dedicated, and expensive, DevOps/infra teams. | You're reliant on their platform. If UbiOps goes down, your models go down. |

| Freedom from vendor lock-in with its hybrid/multi-cloud approach. | The pricing isn't public. You have to talk to sales, which can be a hurdle for smaller teams who just want to try things out. |

That last point about pricing is a big one. It's a classic enterprise software move. On one hand, it signals that they're targeting serious, large-scale deployments where custom packages make sense. On the other, it can feel a bit like a black box. You have to invest time in a sales call just to find out if it's even in your budget. I get why they do it, but I always prefer transparent pricing.

Who Is This Really For?

After looking through their materials and the testimonial from a giant like Bayer, it’s pretty clear UbiOps is aiming at medium-to-large enterprises. These are the companies with dedicated data science teams that feel the pain of deployment at scale. They have the budget and the critical need to solve the MLOps problem reliably.

Could a startup use it? Absolutely. Especially a well-funded one that wants to focus its engineering talent on the core product, not on infrastructure. The 'UbiOps Cloud' plan, with its pay-per-use model, seems designed for this exact scenario—start small, and scale as you grow.

The two main packages they offer tell the story:

- UbiOps Cloud: This is their hosted solution. You just sign up and go. It's on-demand, pay-per-use, and comes with their standard support. Perfect for getting started quickly.

- UbiOps Private: This is the big kahuna. They install and manage the platform in your private environment, whether that's your own data center or your private cloud. This comes with a dedicated success manager and custom support, and is clearly for organizations with strict data governance, security, or performance requirements.

So, What's The Verdict?

Look, I get excited about tools that genuinely solve a frustrating problem. And the problem of AI deployment is one of the most frustrating out there. UbiOps appears to be a very strong contender in the MLOps space, taking a practical, all-in-one approach that could save teams an incredible amount of time and headaches.

Is it the right tool for everyone? No. If you're a hobbyist or a team with deep Kubernetes expertise and a love for building everything from scratch, this might feel like overkill. But if you're a business that sees AI as a critical function and you want your expensive data scientists to focus on building models instead of wrangling servers, then UbiOps is definetly worth a look. The lack of clear pricing is a small annoyance, but the potential upside—faster deployments, lower overhead, and happier teams—is massive. If you're drowning in YAML files, booking that call might just be the best thing you do all quarter.

Frequently Asked Questions about UbiOps

What is UbiOps in simple terms?

UbiOps is a platform that helps you run your AI and Machine Learning models in a live environment without having to manage complex server infrastructure like Kubernetes yourself. It packages your model with everything it needs to run and scale automatically.

What main problem does UbiOps solve?

It solves the 'last mile' problem in AI development: the difficult, time-consuming process of deploying, managing, and scaling models in production. It automates much of the MLOps (Machine Learning Operations) work so teams can release AI applications faster.

Can I use UbiOps with different cloud providers like AWS or Google Cloud?

Yes. One of its key features is its hybrid and multi-cloud capability. You can use their hosted cloud solution or deploy UbiOps in your own private cloud environment on AWS, Azure, GCP, or even on-premise servers, all managed from a single interface.

Is UbiOps suitable for small projects or only large enterprises?

It's built for both. The 'UbiOps Cloud' plan is a pay-per-use, on-demand service that's great for smaller teams, startups, or individual projects. The 'UbiOps Private' plan is geared towards larger enterprises with more complex security and infrastructure needs.

How is UbiOps priced?

UbiOps does not list its prices publicly. The 'UbiOps Cloud' plan is described as pay-per-use and on-demand, while the 'UbiOps Private' plan has custom pricing. To get specific numbers, you need to contact their sales team and book a call.

Does UbiOps lock me into its platform?

While you are using their system to manage deployments, its core design promotes avoiding vendor lock-in with cloud providers. Because it can run on any cloud or on-premise, it gives you the flexibility to move your workloads without being stuck in a single cloud provider's ecosystem. However, you are of course reliant on the UbiOps platform itself while you use it.

Reference and Sources

- UbiOps Official Website: The primary source for product information and features.

- Martin Fowler: Talking about the nuances of software lock-in.