We've all been there. You're knee-deep in a new machine learning project, the code is finally starting to make sense, and you decide to spin up a beefy GPU instance on one of the 'big three' cloud providers. "It'll just be for a few hours," you tell yourself. Famous last words. You get distracted, a weekend happens, and when you finally check your billing dashboard, you're greeted by a number so horrifying it makes you question all your life choices.

The cost of cloud GPU access has been a thorn in the side of developers, researchers, and startups for years. It's the gatekeeper, the tollbooth on the highway to AI innovation. So when a company like Thunder Compute pops up with claims of "on-demand GPUs for up to 80% less than AWS," my inner skeptic and my inner cheapskate both sit up and pay attention. Is this for real, or is it another case of 'too good to be true'?

I decided to take a look under the hood, kick the tires, and see if Thunder Compute is the answer to our cloud-bill prayers or just another flash in the pan.

So, What Exactly is Thunder Compute?

At its heart, Thunder Compute is a stripped-down, no-nonsense cloud provider focused on one thing: giving you raw GPU power without the sticker shock. Think of it less like an all-inclusive, five-star resort (like AWS or GCP, with their labyrinthine menus of a thousand different services) and more like a perfectly located, clean, and efficient Airbnb. It doesn't have a water park or a 24-hour buffet, but it has the one thing you came for: a powerful GPU ready to go at a moment's notice.

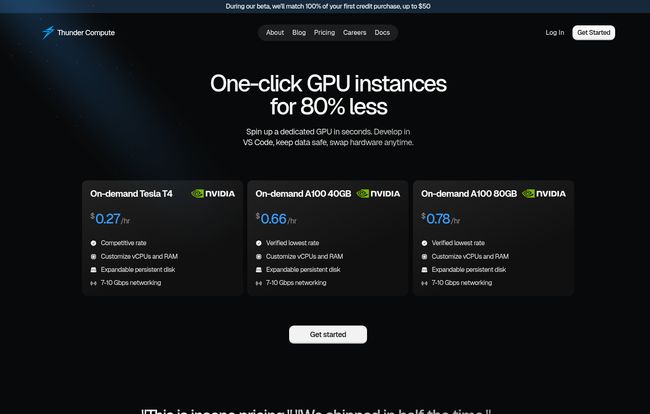

They offer on-demand instances with some of the most sought-after NVIDIA GPUs for AI and ML workloads. The whole platform is designed for developers who want to get in, do their work, and get out without needing a PhD in cloud architecture. No complex VPCs to configure, no confusing IAM roles to assign just to get a server running. It's a breath of fresh air, frankly.

The Main Event: Let's Talk About Pricing

This is the headline act, the reason we're all here. The pricing. Oh, the pricing. It’s the kind of pricing that makes you do a double-take, refresh the page, and then check to make sure you haven't accidentally time-traveled back to 2015.

Here’s a quick breakdown based on their pricing page as of this writing (always check their site for the latest, as things in this space move fast!):

| GPU Instance | Hourly Rate | Notes |

|---|---|---|

| On-demand Tesla T4 | $0.27 /hr | Great for inference and smaller model training. |

| On-demand A100 80GB | $0.78 /hr | The workhorse for serious deep learning. |

| On-demand H100 | $1.47 /hr | Top-tier performance for the most demanding jobs. |

To put that in perspective, one of the comparison charts on their site shows an A100 instance at roughly $0.68/hr versus an AWS equivalent at $3.40/hr. That's not just a little cheaper; that's a different galaxy of affordability. They also have add-ons like persistent disk storage for $0.15/GB per month and additional vCPUs, so you can spec up your machine as needed.

This pay-as-you-go model is fantastic for prototyping, running bursty training jobs, or for indie develepers who can't commit to long-term contracts. The flip side? You must remember to turn your instances off. I can't stress this enough. An hourly rate of $0.78 is amazing, but it's still about $18 a day if you forget about it. Set a reminder. Use their CLI to shut it down. Don't be that person.

More Than Just Cheap Hardware: The Developer Experience

Low prices are great, but if the platform is a nightmare to use, what's the point? This is where I was pleasantly surprised. Thunder Compute clearly has developers in mind.

A CLI That Doesn't Make You Want to Cry

Their command-line interface (CLI) is simple and to the point. Spinning up a new GPU instance is a single command. Changing specs, taking a snapshot, or shutting it down is just as easy. If you've ever wrestled with the hundreds of commands and sub-commands in the AWS or gcloud CLIs, this simplicity feels like a gift from the heavens.

Instance Templates to Get You Started Fast

This is a killer feature. Instead of starting with a bare-bones Linux install and spending your first hour installing CUDA, Python, and all the usual libraries, you can use their instance templates. They have pre-configured setups for popular tools like Ollama (for running LLMs locally) and Comfy-UI (for Stable Diffusion). This means you go from zero to productive work in minutes, not hours.

Visit Thunder Compute

Stay in Your Editor, Not Your Terminal

For me, this is a game-changer. Thunder Compute offers a VS Code/Cursor extension that provides real-time file syncing between your local machine and your cloud instance. No more clunky `scp` commands or setting up `rsync` scripts. You just edit your code locally in your favorite editor, and it's instantly there on the powerful cloud machine, ready to run. It's a seamless workflow that genuinely makes remote development feel local.

Alright, What's the Catch? The Not-So-Shiny Parts

No service is perfect, especially not one that's undercutting the market so aggressively. It's important to go in with eyes wide open. After digging around, I found a couple of things you should be aware of.

Location, Location, Location... or Lack Thereof

As of now, it seems their servers are located exclusively in U.S. Central. For developers in North America, this is probably fine. But if you're in Europe or Asia, that latency could be a real pain, especially for interactive work. It also means there's no geographic redundancy. If their data center has a problem, your instances are down. It's a tradeoff for the low cost.

The Learning Curve and the Ticking Clock

While the CLI is simple, the platform assumes a certain level of comfort with the command line. It's not designed for people who need a GUI for every single action. And as I mentioned before—and will mention again because it's that important—the hourly billing model is a double-edged sword. It's your responsibility to manage your resources. There's no AI assistant that's going to pop up and ask, "Hey, did you mean to leave this $1.47/hr H100 instance running while you went on vacation?"

Who Should Use Thunder Compute? (And Who Should Pass?)

So who is the ideal customer for this platform?

In my opinion, Thunder Compute is a perfect fit for:

- Indie Hackers & Startups: When every dollar counts, getting access to A100s and H100s at these prices is a massive competitive advantage.

- Students & Researchers: If you're working on a paper or a project and have a limited budget, this is a fantastic way to access serious compute power.

- ML Prototypers: Anyone who needs to quickly test an idea, train a proof-of-concept model, or run a bunch of experiments without taking out a second mortgage.

However, you might want to stick with the big guys if you are:

- A Large Enterprise: If you need global server locations, complex networking, dedicated support contracts, and a whole slew of compliance certifications (like SOC 2 or HIPAA), a bigger, more established provider is probably a safer bet.

- Someone Who is Allergic to the Terminal: If you live and die by graphical user interfaces, the developer-centric, CLI-first approach here might feel a bit intimidating.

My Final Verdict: A Resounding 'Yes, with an Asterisk'

After spending time with it, I'm genuinely impressed with what Thunder Compute is doing. It's a lean, mean, GPU-serving machine. They've focused on the things that matter to their target audience: raw performance, low prices, and a streamlined developer workflow. They've cut out the cruft and passed the savings on to the user.

The asterisk is simply this: know what you're getting into. This is a specialized tool for people who know what a GPU is and are comfortable managing their own cloud resources. It's not a full-service cloud ecosystem. But for its intended purpose? It's brilliant.

And with a $20 free credit for new users, there’s literally no risk in giving it a spin. Go try it out. Run a model. See how it feels. The worst that can happen is you get a taste of what affordable AI development feels like.

Frequently Asked Questions

- How does Thunder Compute pricing compare to AWS or GCP?

- It's significantly cheaper. On their own site, they show comparisons where an equivalent GPU instance can be up to 80% less expensive than on AWS. The pay-as-you-go hourly rates are some of the lowest I've seen for A100 and H100 GPUs.

- What kind of GPUs can I get on Thunder Compute?

- They focus on high-demand NVIDIA GPUs for AI/ML tasks, including the Tesla T4, the A100 (80GB), and the powerful H100. Availability can change, so check their site for the current lineup.

- Is there a free trial for Thunder Compute?

- Yes, they offer $20 in free credits for new users. This is usually more than enough to spin up an instance for several hours and get a real feel for the platform's performance and workflow.

- Can I use Thunder Compute for something other than AI?

- Absolutely. While it's optimized for AI/ML, a GPU instance is still a powerful virtual machine. You could use it for video rendering, scientific simulations, or any task that can benefit from massive parallel processing power. Just remember it's a Linux environment.

- Is it hard to get started with Thunder Compute?

- If you are comfortable with the command line, it's very easy. Their simple CLI and instance templates make deployment much faster than on more complex platforms. If you've never used a terminal before, there might be a small learning curve.

- What happens if I forget to turn off my instance?

- The meter keeps running! You are billed for the time the instance is active, whether you are using it or not. It's crucial to get in the habit of shutting down your instances using the `thunder stop` command when you're done working.

Conclusion

In a world where AI capabilities are exploding, the cost of entry has become a major barrier. We need more services that democratize access to high-performance computing. Thunder Compute is a fantastic step in that direction. It's not trying to be everything to everyone. It's a focused, powerful tool that does its job exceptionally well and at a price that feels fair. For the growing army of developers building the future of AI, a service like this isn't just welcome—it's necessary.