If you're in the AI space, you know the feeling. You're staring at a blinking cursor in a GPT-4 playground or your company's custom LLM interface, trying to summon magic. You tweak a word here, rephrase a sentence there, add some XML tags for good measure. Sometimes it works brilliantly. Other times, the output is... well, garbage. Prompt engineering often feels less like a science and more like a dark art, a weird mix of technical skill, linguistics, and just plain old luck.

So, when I first heard about a tool called Rompt.ai, my ears perked up. The premise was exactly what the industry needs: a platform to bring data-driven rigor to the chaos of prompt creation. No more guessing. No more 'I feel like this prompt is better'. Just cold, hard data through massive A/B testing. It sounded like the holy grail for anyone serious about building reliable AI products.

I was genuinely excited. This was the kind of thing that could separate the professional AI developers from the hobbyists.

What Was Rompt.ai Supposed to Be?

The core idea behind Rompt was simple but powerful. It was designed to help developers and companies fine-tune their AI applications by running extensive A/B testing experiments on their prompts. Instead of manually testing two or three variations, Rompt promised to leverage open-source infrastructure to generate and evaluate tons of prompt variations automatically. This would, in theory, help you uncover the highest-performing prompts with an unbiased rating system.

Think about that for a second. The ability to methodically test hundreds of prompts to find the one that gives you the most accurate, consistent, and cost-effective results. That's not just an improvement; it's a paradigm shift in how we build with LLMs.

The Core Idea: Why A/B Testing for Prompts is a Game-Changer

For years, in the world of web design and CPC campaigns, we've lived and died by A/B testing. Does the red button get more clicks than the blue one? Does this headline have a better conversion rate than that one? We never just guess. We test, measure, and iterate. It’s how we turn marketing from a guessing game into a science.

The Promise of Data-Driven Prompting

Rompt was bringing this same philosophy to the world of generative AI. The quality of an AI's output is almost entirely dependent on the quality of the prompt. A tiny change can be the difference between a perfect response and a nonsensical one. By A/B testing prompts, you're not just improving one interaction; you're improving the reliability and quality of your entire application. This means happier users, lower operational costs (fewer failed API calls), and a better product overall.

Structure in the Wild West of Prompting

Another thing that got my attention was the promise of version-controlled prompt collections. Right now, how do most teams manage their prompts? A messy Google Doc? A stray text file on a developer's machine? It's chaos. The idea of having a structured, version-controlled repository for prompts—like Git for your AI's brain—is just fantastic. It brings a much-needed layer of professionalism and collaboration to the process.

So, What Happened? The Elephant in the Room

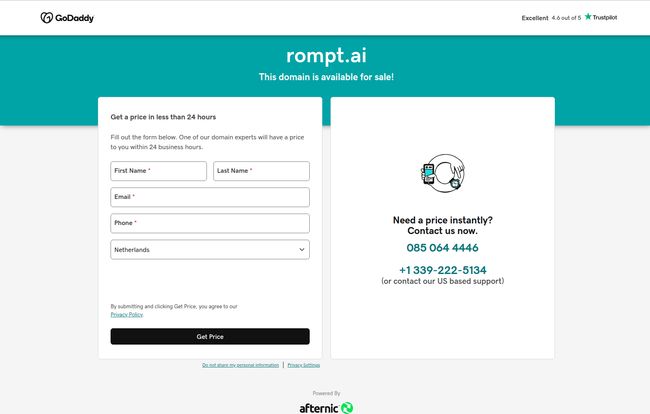

Alright, I've hyped it up enough. I was ready to dive in, maybe even write a glowing review. I typed `rompt.ai` into my browser, credit card metaphorically in hand, and was greeted with... this.

Visit Rompt.ai

Yep. The domain is for sale. Hosted on GoDaddy, powered by Afternic. It's the digital equivalent of showing up to a grand opening and finding a 'For Lease' sign on the door. Rompt.ai appears to be a digital ghost. A great idea that, for whatever reason, never made it or didn't last.

And honestly, while I'm disappointed, I'm not entirely surprised. It’s a stark reminder of the brutal reality of the current AI gold rush. For every success story, there are dozens of projects that burn bright and fade fast. An `.ai` domain doesn't guarantee a sustainable business.

A Look Under the Hood at Rompt's Promised Features

Even though the tool is gone, the idea is still worth examining. Let's look at the features it was supposed to have, as they form a blueprint for what a great prompt optimization tool should do.

| Feature | What It Means for a Developer |

|---|---|

| A/B testing for prompts | The core engine. The ability to systematically compare different phrasings, structures, and examples to see what works best. |

| Open-source infrastructure | This suggests it was built for scalability and customization. Instead of being a closed black box, developers could potentially tweak and extend it. |

| Version-controlled prompt collections | Treating your prompts like code. Track changes, revert to previous versions, and collaborate without overwriting each other's work. A godsend for teams. |

| Native templating language | A way to declare variables and create dynamic prompts easily. Essential for creating reusable prompt templates for different scenarios. |

| Output database for scoring | This is critical. It’s not enough to generate outputs; you need to store them, rate them (either by humans or other AIs), and analyze the results. |

The Hurdles: Why Building a Tool Like This Is Hard

Seeing that 'For Sale' page makes you wonder about the 'why'. And the list of potential cons gives us some clues. Building something like Rompt is not a walk in the park.

The Technical Lift

First off, it requires some serious technical chops. This isn't just a simple SaaS wrapper around an API. You're building a complex testing framework, a version control system, and a data analysis platform all in one. The onboarding alone could be a challenge, as users would need a certain level of technical expertise to integrate it into their workflows.

The Resource Drain

More importantly, think of teh computational resources. Running A/B tests on this scale means making a lot of LLM API calls. That can get expensive, fast. A single test run with hundreds of variations could cost a significant amount of money in API credits. The business model for such a tool is tricky; you have to provide more value than the cost of the API calls you're generating.

"The effectiveness of any prompt testing tool ultimately depends on the quality of the initial prompts and variations."

This is also a key weakness. The tool can't create genius prompts from scratch. It relies on the user to provide good starting points. If your initial ideas are flawed, you're just optimizing for the 'least bad' option, not finding the best one.

Are There Alternatives to Rompt.ai?

So, Rompt.ai is a ghost, but the problem it tried to solve is very real. What are developers supposed to do now? Luckily, the space isn't empty. There are other options, though they might require a bit more manual setup:

- Frameworks like LangChain and LlamaIndex: These powerful open-source libraries have modules for evaluating and testing prompts. It's more of a DIY approach, but it gives you immense flexibility. You can build your own Rompt-like system using their building blocks.

- PromptPerfect: This is a commercial tool that focuses on optimizing your prompts. It's less about A/B testing and more about automatically refining a single prompt to make it better, but it tackles a similar problem.

- Vercel AI Playground: Vercel's playground allows you to compare the outputs of different models and prompts side-by-side. It's a manual process but great for quick, qualitative comparisons.

Lessons from a Digital Ghost

The story of Rompt.ai, even in its absence, teaches us a few things. It validates that prompt optimization and management is a massive pain point for developers. The features it promised are a perfect roadmap for what a mature MLOps/LLMOps toolchain should include.

It also serves as a cautionary tale. Having a great idea in the AI space is one thing; executing it, finding a sustainable business model, and overcoming the sheer technical and financial hurdles is another entirely. I genuinely hope the team behind the idea pops up somewhere else. The industry needs their thinking.

For now, we're still in the wild west of prompt engineering. But tools and ideas like Rompt.ai are the signposts pointing toward a more civilized, data-driven future. I'll just be checking if the domain is for sale before I get my hopes up next time.

Frequently Asked Questions about Prompt A/B Testing

- What is prompt A/B testing?

- Prompt A/B testing is the process of comparing two or more versions of a prompt to see which one performs better for a given task. By showing the different versions to the AI model and evaluating the outputs, you can determine which prompt is more effective at generating the desired response.

- Why is version control for prompts important?

- Version control (like Git for code) allows teams to track changes to prompts over time, collaborate without conflicts, and revert to previous versions if a change proves to be ineffective. It brings order and accountability to the prompt management process, which is often chaotic.

- Was Rompt.ai an open-source tool?

- Based on the available information, Rompt.ai intended to use open-source infrastructure for scalability and customization, but it's unclear if the entire platform itself was going to be open-source or a commercial product built on open-source components. Given it was a .ai domain for a company, it was likely a commercial SaaS product.

- How much does prompt A/B testing cost?

- The cost is directly tied to the number of API calls you make to the language model. A large-scale test with hundreds of variations could cost anywhere from a few dollars to hundreds of dollars, depending on the model used (e.g., GPT-4 is more expensive than GPT-3.5-Turbo) and the length of the prompts and completions.

- Do I need a special tool for prompt testing?

- Not necessarily. You can start by manually testing variations and logging results in a spreadsheet. For more systematic testing, you can write scripts or use frameworks like LangChain. Dedicated tools like the one Rompt.ai aimed to be are designed to streamline and scale this process, saving you time and providing better analytics.

Reference and Sources

- Afternic Domain Marketplace - Where the rompt.ai domain is currently listed.

- LangChain Evaluation Docs - For a DIY approach to prompt testing and evaluation.

- PromptPerfect - An alternative tool for prompt optimization.