I'm going to be straight with you. For the past year, I’ve had a low-grade, persistent anxiety every time I upload a document to a cloud-based AI service. As an SEO guy, I deal with client strategies, proprietary data, and content plans that are, well, not for public consumption. The idea of feeding that into a mysterious, massive AI model owned by a tech giant? It gives me the heebie-jeebies.

We've all been there, right? You want the power of a large language model to summarize, analyze, or answer questions about your own stuff, but you don't want to hand over the keys to your digital kingdom. For ages, the answer was just… don’t. Or risk it.

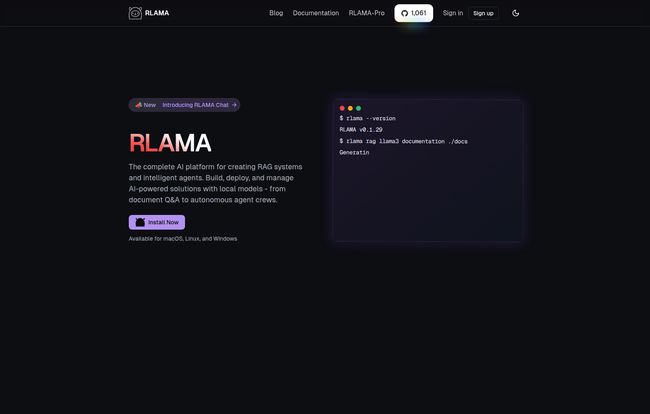

But the ground is shifting. The rise of powerful local AI models, run entirely on our own machines, has been a game-changer. And that’s where I stumbled upon a tool that genuinely got me excited: RLAMA. It’s a bit of a mouthful—Retrieval-Augmented Local Assistant Model Agent—but what it does is beautifully simple and incredibly powerful.

What Exactly is RLAMA, Anyway?

Okay, let's cut through the jargon. You’ve probably heard of RAG, or Retrieval-Augmented Generation. It’s a fancy term for a simple concept. Instead of asking an AI a question and letting it pull an answer from its vast, general training data (the entire internet, basically), you first give it a specific set of documents to read. A curated library. Then, it answers your question based only on that information.

Think of it like this: RAG is the difference between asking a random person on the street for your company's Q3 sales figures versus asking your head of finance who has the P&L report right in front of them.

RLAMA is the tool that builds that curated library and connects it to a local AI model running on your computer, like one you’d run with Ollama. It acts as the bridge, turning your collection of PDFs, text files, and even entire websites into a smart, private, searchable knowledge base. The best part? It’s all happening on your own hardware. Nothing gets sent to an external server. Your data stays your data.

Visit RLAMA

This, for me, is the whole point. It’s about taking back control.

My First Impressions and Getting Started

I’m comfortable in a command line, so I went straight for the free, open-source CLI tool. Getting it set up was pretty straightforward for anyone who has used a terminal before. It’s available for macOS, Linux, and Windows, which is a huge plus.

The first thing I did was feed it a folder of my old blog posts. I ran a simple command, watched it process the files, and then asked it a question: “What were my main points about the 2022 Google Helpful Content Update?”

Seconds later, it spat out a perfectly coherent summary, citing specifics from articles I’d completely forgotten I’d written. And it did it all without an internet connection. It’s a little bit of magic, honestly. A private brain for my own content.

The Features That Genuinely Impressed Me

Look, a lot of tools can do basic RAG. But RLAMA has a few tricks up its sleeve that show its developers really get the practical applications.

Beyond Simple PDFs: Web Crawling and Directory Watching

This is where my SEO brain started buzzing. RLAMA has a built-in web crawler. You can literally point it at a URL—your company's support documentation, a competitor's blog, a technical resource—and it will scrape the content to build a RAG system from it. The potential for competitive analysis and content research is… immense.

Then there's directory watching. You can tell RLAMA to watch a specific folder on your computer. Anytime you add or change a file in that folder, it automatically updates the knowledge base. This is perfect for creating an ever-green internal wiki or a knowledge base for a project with constantly changing documents. Set it and forget it. I love that.

The Power of Local Models and Open Source Freedom

RLAMA plays beautifully with Ollama, which means you have access to the thousands of open-source models on Hugging Face. Want a model that’s better at creative writing? There’s one for that. Need one that excels at code? You got it. You’re not locked into a single ecosystem like you are with the big cloud providers. It also supports OpenAI models if you need that raw power, but the local-first approach is clearly its heart and soul.

AI Agents and Crews are Not Just Buzzwords Here

This is a more advanced feature, but it shows the project's ambition. You can set up “AI Agents” with specific roles and have them work together in a “Crew” to solve complex tasks. Imagine having a “Research Agent” that pulls data from your documents and a “Writer Agent” that drafts a report based on the findings. We're moving beyond simple Q&A into automated workflows, which is seriously cool.

The Two Flavors of RLAMA: CLI vs. The Visual Builder

This is where the project gets really smart. The developers understand that not everyone wants to live in a terminal. So, they offer two paths.

The free, open-source CLI tool is the core. It’s powerful, flexible, and perfect for developers or tech-savvy users. It’s the heart of RLAMA.

But then there's RLAMA Unlimited. This is a paid subscription that gives you access to a visual, no-code interface. You can do all the cool stuff—create RAGs, manage documents, ask questions—through a clean web UI. It lowers the barrier to entry to almost zero. I tinkered with it, and it makes the whole process ridiculously simple. For a team or an individual who just wants the benefit without the technical setup, this is the way to go.

And the pricing? It's more than fair. It feels like they're trying to build a sustainable open-source project, not get rich quick.

| Plan | Price | Best For |

|---|---|---|

| RLAMA CLI | Free & Open Source | Developers and users comfortable with the command line. |

| RLAMA Unlimited (Monthly) | $4.49 / month | Individuals or teams who want a visual interface and ongoing use. |

| RLAMA Unlimited (One-time RAG) | $0.50 single payment | Trying out the visual builder for a single project. |

Let's Be Honest: The Not-So-Perfect Parts

No tool is perfect, especially not in the fast-moving world of AI. Blindly praising something helps no one. Based on my experience and what I've seen in the community, there are a few bumps in the road.

The most obvious one is that the free tool requires command-line knowledge. That's not a flaw, its a feature, but it will gatekeep some users. For them, the subscription is the only real option.

I've also seen reports of occasional hiccups. Sometimes Ollama can be a bit finicky to get connected, or the text extraction from a complex PDF might not be perfect. And like any RAG system, sometimes it struggles to find the most relevant snippet of information if your documents are poorly structured. These feel less like fundamental flaws in RLAMA and more like the general challenges of the entire RAG space right now. It's a young technology, and things are improving constantly.

Who Should Be Using RLAMA Right Now?

So, is RLAMA for you? I think it’s a fantastic fit for a few types of people:

- The Privacy-Focused Professional: If you handle sensitive information—legal documents, medical records, client data—and want to use AI without privacy nightmares, this is your tool.

- The AI Developer & Hobbyist: If you love tinkering with local models and want a solid framework to build custom AI applications, the free CLI is a dream.

- The Small Business Owner: Need a quick, cheap, and effective internal knowledge base for your team? The RLAMA Unlimited subscription is probably one of the most cost-effective solutions you'll find.

Tools like RLAMA aren't just another piece of software. They represent a shift towards a more democratic, private, and user-controlled AI future. It’s about owning your data and your tools. It’s not perfect, but it’s a massive step in the right direction, and for that, it has my full support.

Your RLAMA Questions Answered

- Is RLAMA completely free?

- The core command-line (CLI) tool is 100% free and open source. If you want the user-friendly visual interface, you'll need a RLAMA Unlimited subscription, which is a very affordable paid plan.

- Does my data stay on my computer when using RLAMA?

- Yes, absolutely. When using RLAMA with a local model provider like Ollama, all processing and data storage happens on your machine. Nothing is sent to external servers. This is its biggest selling point.

- What kind of AI models can I use with RLAMA?

- It integrates directly with local models through Ollama, giving you access to thousands of open-source models from places like Hugging Face. It also has support for connecting to OpenAI's models if you need that option.

- Do I need to be a programmer to use RLAMA?

- To use the free CLI tool, some familiarity with the command line is necessary. However, the paid 'RLAMA Unlimited' plan provides a no-code visual builder that anyone can use, no programming required.

- What if my RAG isn't giving good answers?

- This can happen with any RAG system. It might be due to issues with how text is extracted from your documents (especially complex PDFs) or the structure of the information itself. Fine-tuning your documents or trying different chunking strategies within RLAMA can often improve results.