Every now and then in the SEO and tech world, you stumble upon a ghost. A whisper of a tool, a fragment of a service, a brilliant idea that seems to exist only in cached descriptions and forum mentions. That was my week. I was digging around for new developer tools in the AI space—specifically, solutions for managing data persistence with Large Language Models—when I came across a name: ReLLM.

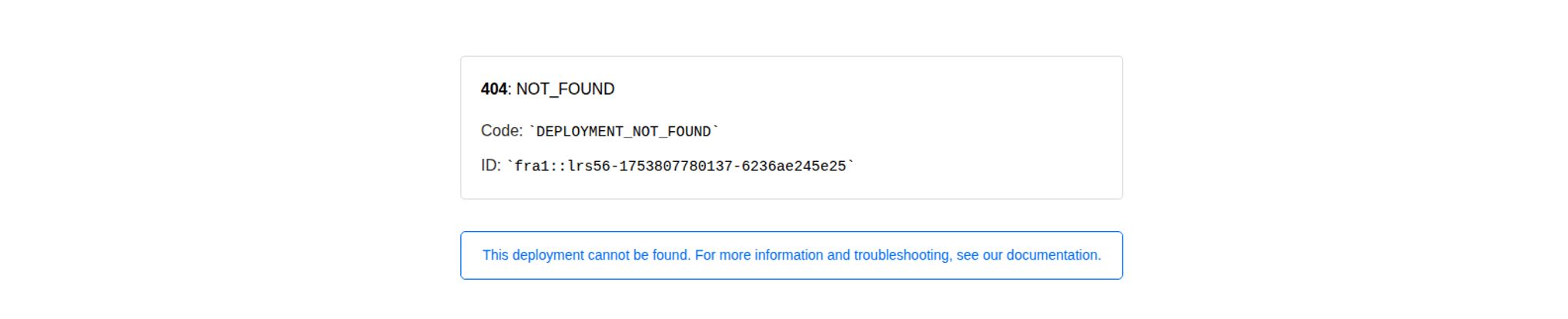

The promise was immediately compelling. Secure, permission-gated, long-term context for models like ChatGPT. My brain started buzzing with the possibilities. But when I tried to find their homepage? I was greeted by this:

Visit ReLLM

A cold, sterile `404 NOT_FOUND` error. A digital dead end. But the idea... the idea was too good to let go. So, I did what any self-respecting digital detective would do: I started digging into the breadcrumbs it left behind. What was ReLLM supposed to be, and why is a tool like this so desperately needed right now?

What Was ReLLM Supposed to Be?

Piecing together the information, ReLLM (presumably short for Relational LLM or Reliable LLM) wasn't another AI chatbot. It was infrastructure. It was the plumbing. Specifically, it was designed to solve one of the biggest headaches in building real-world AI applications: memory.

Think of most LLMs like a person with severe short-term memory loss. You can have a great conversation, but if you walk away and come back five minutes later, they might not remember who you are or what you discussed. This is because of the 'context window'—the limited amount of information the model can hold in its working memory at any one time. For building a simple one-off tool, that's fine. For building a sophisticated, user-aware application, it’s a disaster.

ReLLM's pitch was simple but profound: they would give your LLM a secure, long-term memory. They'd store the context of your user interactions, encrypt it, and—this is the crucial part—manage permissions. The LLM would only ever be given the context that the specific user it was talking to was allowed to see. Simple, right? Genius, actually.

Why Secure, Permission-Based Context Is A Game-Changer

If you're a developer or a product manager working with AI, you've probably had a few sleepless nights over this already. The problem ReLLM aimed to solve isn't just a technical inconvenience; it's a foundational issue of security and user trust.

The Goldfish Memory Problem in LLMs

Without a tool for long-term context, every user interaction starts from scratch. Imagine a customer support bot that doesn't remember your previous three support tickets. Or an internal knowledge base AI that you have to re-explain your department's function to every single time you ask a question. It's inefficient and frustrating. Developers often have to build complex, clunky systems with vector databases and retrieval-augmented generation (RAG) to solve this. ReLLM promised to simplify this integration, acting as a secure middle-man.

The Security Nightmare of Shared Context

This is the big one. Let's say you build an AI assistant for a team. User A uploads a sensitive financial document for analysis. A little later, User B asks a general question about company finances. If your context management is sloppy, the AI could inadvertently pull information from User A's document to answer User B's query. It’s a data breach waiting to happen.

"We store context encrypted, and with user permissions baked in. The LLM is only provided context that the user talking to the LLM is allowed to see."

That quote, pulled from one of the few descriptions of ReLLM I could find, is the holy grail. It means you could build multi-tenant applications without the constant fear of data cross-contamination. It’s the difference between a secure, professional-grade tool and a ticking time bomb.

The Promised Features of ReLLM

Based on the digital scraps, the platform was built on a few core pillars. It wasn't just about storing data; it was about doing it intelligently and securely. The system was designed to handle all the tricky ChatGPT communication, essentially acting as a secure proxy. It would also manage the entire chat history, taking that burden off the developer. But the two standout features were clearly its main selling points. Firstly, the secure context storage using proper encryption. This is non-negotiable in a post-GDPR world. Your user's data needs to be treated like gold. Secondly, and this is the really clever bit, was the granular user permission management. This feature alone would have set it apart, allowing developers to define exactly who sees what, turning the AI from a shared brain into a collection of private, secure minds.

So, Where Did ReLLM Go?

This brings us back to the 404 page. A `DEPLOYMENT_NOT_FOUND` error. This isn't just a missing page; it often means the entire application it's pointing to has been taken down or was never deployed correctly on its hosting service (in this case, the ID suggests it might have been on a platform like Vercel).

What does this mean? It could be anything.

- An Abandoned Project: Maybe it was a side project that a developer or small team couldn't get off the ground. It happens.

- An Acqui-hire: It's possible a larger company saw the genius of the idea and bought the company/team, absorbing the technology into their own platform and shutting down the original branding. This happens all the time in tech.

- A Pivot: The team might have shifted their focus to a different problem, leaving the original concept behind.

Whatever the reason, its disappearance leaves a hole in the market for a simple, elegant solution to a very complex problem.

The Good, The Bad, and The Missing

Even as a ghost, ReLLM gives us a good blueprint for what to look for in an AI infrastructure tool. Here's my take on its theoretical pros and cons.

| What We Liked (In Theory) | Potential Sticking Points |

|---|---|

| Iron-clad context security. Encryption and permissions are everything. | No public pricing. The lack of transparency on cost is always a red flag. |

| Simplified LLM integration. Taking the pain out of managing chat history and context is a huge win for developers. | It's not a standalone product. It requires integration into your own application, meaning it's a developer-focused tool. |

| Solves a real, painful problem. This wasn't a solution in search of a problem; it addressed a core need. | It, uh, doesn't seem to exist. The biggest con of all! |

Frequently Asked Questions about Secure LLM Context

What exactly is long-term context for an LLM?

Long-term context, or memory, is the ability for an AI to retain information across multiple conversations or sessions. Instead of starting fresh every time, it can recall past interactions, user preferences, and previously provided documents to provide more relevant and personalized responses. This is key for creating truly useful AI assistants.

Why is encrypting LLM context so important?

Users are constantly feeding AI models sensitive information—personal details, business strategies, financial data, you name it. If that context isn't encrypted both in transit and at rest, it becomes a prime target for hackers. A breach could expose vast amounts of private user data, leading to massive privacy violations and loss of trust.

Are there alternatives to a tool like ReLLM?

Yes, but they often require more manual work. Developers can build their own systems using a combination of technologies. This typically involves using a vector database (like Pinecone or Chroma) to store information and a framework like LangChain or LlamaIndex to manage the retrieval process (this is the RAG approach). ReLLM's appeal was that it offered to manage this entire complex process as a single, streamlined service.

Is ReLLM open source?

Based on the information available and the apparent business model, it's highly unlikely that ReLLM was an open-source project. It seemed to be positioned as a commercial Platform-as-a-Service (PaaS) tool for developers.

What does the 'DEPLOYMENT_NOT_FOUND' error typically mean?

This error, especially on modern hosting platforms, usually means that the specific application or website that the domain name was pointing to has been removed, or the link between the domain and the deployment has been broken. It's a step beyond a simple 404 error; it suggests the underlying code/application itself is gone.

A Great Idea in Search of a Home

In the end, the story of ReLLM is a bit of a frustrating mystery. It’s a perfect example of a tool that the industry genuinely needs. As more and more companies rush to integrate AI, the demand for secure, reliable, and easy-to-implement context management is only going to skyrocket. We're talking about the foundational layer for the next generation of personalized AI.

While ReLLM itself may be a ghost in the machine for now, the idea behind it is very much alive. I'll be keeping my eyes peeled for whoever picks up this torch next. Because the first company to truly nail this and make it accessible won't just have a great product, they'll be providing a fundamental building block for a more secure and intelligent AI future. And that's something worth getting excited about.

References and Sources

- What is a Vector Database? - Pinecone

- What is a Context Window? - Cohere