If you've spent any time in the trenches building products with Large Language Models (LLMs), you know the dirty little secret. It's not the model selection or the fine-tuning that drives you crazy. It's the prompts. They're everywhere. They're in Slack DMs, Google Docs with horrendous naming conventions like prompt_v4_final_for_real_Johns_edit.txt, and sometimes just rattling around in your head.

Managing prompts has felt like the wild, wild west of software development. A chaotic mess of creativity and desperation with zero version control. For years, developers have had Git to keep our code sane. It's our safety net, our single source of truth. So where the heck has that been for prompt engineering? It's a question I've asked myself on more than one occasion, usually late at night staring at a JSON response that makes absolutely no sense.

Then I stumbled across a tool making a pretty bold claim. A tool called Prompteams.

"Prompt Engineering isn't easy, and that's why we're here. Building a LLM Prompt Management System with version control, an end-to-end testing suite and prompt are your ultimate solution for iterating LLM prompts. The new Git of AI."

– The Prompteams Team

"The new Git of AI." That's a gutsy statement. As a professional skeptic (and SEO blogger), I had to see if it was just clever marketing or if they'd actually cracked the code. So, I took a look.

So, What Exactly Is Prompteams?

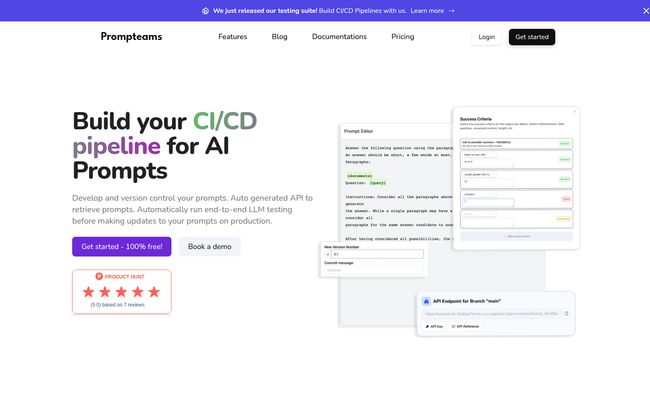

At its heart, Prompteams is an AI Prompt Management System. But that’s a bit of a mouthful. Think of it as a central hub for your entire team's prompt workflow. It’s designed to help you manage, test, and deploy your prompts in a structured, repeatable way. The big idea is to create a CI/CD pipeline, but for your prompts instead of your application code.

But the real magic, in my opinion, is who it's for. It’s not just for us engineers. It’s built to bridge the gap between the technical folks (the developers implementing the AI) and the industry specialists (the marketing gurus, the legal experts, the product managers who actually know what the prompt should say). It gets everyone out of their siloed documents and onto the same page. Finally.

Visit Prompteams

The Features That Actually Matter

A pretty landing page is one thing, but the proof is in the pudding. I poked around the features, and a few things really stood out as solving real-world headaches.

Version Control That Feels Familiar (Like, Git Familiar)

This is the core of their "Git for AI" claim, and honestly, they nailed the analogy. Prompteams uses concepts that any developer will instantly recognize: repositories, branches, and commits. You can create a repository for each project. Need to experiment with a new prompt idea without breaking the production version? Just create a new branch. Test it, tweak it, and when it's ready, merge it into your main branch.

This is a complete game-changer. Gone are the days of trying to decipher which version of a prompt is the actual one being used in production. It introduces a level of sanity and accountability that has been sorely missing from prompt engineering. I remember one project where a last-minute tweak to a prompt in a shared doc by a well-meaning stakeholder completely broke a feature, and it took us hours to figure out why. A system like this would have prevented that entire fiasco.

An End-to-End Testing Suite for Your Prompts

Writing a prompt is just step one. How do you know it works consistently? How does it handle edge cases? What about when you want to check for things like hallucinations, tone, or specific formatting in the output? Prompteams has a built-in testing suite that lets you create unlimited test cases and criteria for every single prompt.

You can set up tests to ensure the LLM's response doesn't contain certain toxic words, or that it always includes a JSON object, or that it's under a certain character count. This moves prompt creation from a creative art to a repeatable engineering discipline. You build a library of tests that ensures any future changes don't cause unexpected regressions. For any team putting AI into a production application, this isn't a luxury; it's a necessity.

Auto-Generated APIs and Real-Time Updates

Okay, this part is just plain cool. Once you have a prompt you're happy with in your main branch, Prompteams automatically generates an API endpoint for it. Your application code just calls this API to get the prompt. Simple.

But here’s the kicker: the API is updated in real-time. Let's say your marketing team needs to update the promotional language in a prompt. They can go into Prompteams, make the change on a branch, get it approved, and merge it to main. The moment it's merged, the API endpoint starts serving the new prompt. No code deployment. No asking an engineer to 'quickly push a change'. That's a workflow that saves an incredible amount of time and friction.

Bridging the Great Divide Between Experts and Engineers

I've touched on this already, but it's worth its own section. The typical AI workflow is so broken. The subject matter expert who knows the business logic writes a prompt in a Word doc. They email it to an engineer. The engineer has to copy, paste, format, and integrate it, often spotting issues the expert missed. They email back. The expert replies. It's a slow, painful game of telephone.

Prompteams acts as a shared workbench. The domain expert can write and iterate on the prompt in a user-friendly interface. The engineer can see those changes, review the test results, and grab the API endpoint, all from the same platform. It’s like giving the restaurant's chef direct access to update the digital menu board instead of having them scribble changes on a napkin for a waiter to run to the IT guy. It just makes sense.

Let's Talk About the Money: Prompteams Pricing

This is often the point where my enthusiasm for a new tool hits a brick wall. So many great platforms are locked behind expensive enterprise plans. But Prompteams surprised me.

Their pricing model is refreshingly simple. There are two tiers: Starter and Enterprise. The Starter plan is 100% Free. And I don't mean 'free trial' or 'free with major limitations'. I mean actually free. It includes unlimited test cases, unlimited team members, unlimited repositories, and access to the real-time API. That is an incredibly generous offering that lets any team, regardless of size or budget, get started with proper prompt versioning.

The Enterprise plan is a custom offering for larger organizations that need more control. This is where you get features like a Dockerised solution to run on your own infrastructure, a dedicated custom server, priority support, and the ability to request new features. It's a standard and sensible way to cater to big companies without crippling the tool for everyone else.

The Not-So-Perfect Parts (Because Nothing Is)

I wouldn’t be doing my job if I didn’t point out the potential downsides. No tool is perfect, especially a newer one.

First, there's a potential learning curve. While the Git analogy is a huge plus for developers, it might be slightly intimidating for non-technical team members at first. They'll need to understand the basic concepts of branches and merging. It's not rocket science, but it’s a hurdle.

Second, some features are still in the oven. The website mentions a Knowledge Base and Live Chat interfaces, which sound amazing for building internal tools, but they appear to be in development. This isn't a dealbreaker for me—I'd rather a company focus on getting the core right—but it's something to be aware of.

Finally, if you're a team that absolutely must self-host everything, you'll need the Enterprise plan for the Dockerized solution. That's a pretty standard business model, but it's a consideration for teams with strict data residency or security requirements.

My Final Verdict: Is Prompteams Worth Your Time?

Yes. Unreservedly, yes.

For my money, the chaos of prompt management has been a low-key source of anxiety on every AI project I’ve consulted on. A tool like Prompteams feels less like a 'nice-to-have' and more like a 'where-have-you-been-all-my-life' solution. It brings a desperately needed layer of professionalism and process to the art of prompt engineering.

So, does it live up to the "Git of AI" moniker? In spirit and in function, I think it does. It's the closest thing I've seen to a true, purpose-built version control and collaboration system for prompts. If your team is building anything serious with LLMs, you owe it to your own sanity to give the free Starter plan a try. It might just save you from the next prompt_v5_final_revised_FINAL.txt disaster.

Frequently Asked Questions About Prompteams

- How does the prompt versioning in Prompteams work?

- It works much like Git, a tool developers use for code. You have a central 'repository' for your prompts. You can create 'branches' to work on new ideas or fixes without affecting the live version. Once you're happy, you 'commit' your changes and 'merge' them back into the main branch, creating a full history of every change.

- Can non-technical team members actually use Prompteams?

- Absolutely. It's designed for them. While there's a small learning curve to understand the branching concept, the user interface is clean and focused on writing and testing prompts, not on complex code. It's far easier than trying to collaborate across multiple documents and email chains.

- Is the Prompteams Starter plan really free forever?

- According to their pricing page, yes. The Starter plan is listed as 100% Free and is remarkably generous, offering unlimited members, repositories, and test cases. It's designed to get you started with their core workflow without a credit card.

- How does the API integration work for developers?

- It’s very straightforward. Prompteams automatically generates a stable API endpoint for your prompts. Your application simply makes a standard API call to this endpoint to retrieve the current, production-ready prompt. This decouples the prompt content from your app's code.

- How is this better than just using a normal Git repository to store prompts?

- A standard Git repo can store the text of your prompts, but that's it. Prompteams is a purpose-built platform. It adds an integrated testing suite, a user-friendly UI for non-coders, real-time collaboration features, and the instant, auto-generated API on top of the version control.

- What LLMs does Prompteams support?

- Prompteams itself is model-agnostic. It manages the prompt text, not the connection to the LLM. You fetch the prompt from the Prompteams API and then send it to whichever LLM you're using (like OpenAI's GPT-4, Anthropic's Claude, etc.) in your own application code. This gives you the flexibility to switch models without changing your prompt management workflow.

Conclusion

The move from hobbyist tinkering to professional software development has always been marked by the adoption of better tools and processes. Prompteams feels like a significant step in that direction for the world of AI. It addresses a fundamental, messy problem with an elegant and powerful solution. By bringing version control, testing, and true collaboration to prompt engineering, it allows teams to build more reliable and scalable AI features. It's a tool that understands the problem space deeply, and I, for one, am excited to see where it goes from here.

Reference and Sources

- Prompteams Official Website

- Prompteams Pricing Page

- Emerging Architectures for LLM Applications - Andreessen Horowitz (a16z) - A good read on the evolving stack for AI development, which highlights the need for tools like this.