If you’ve spent any time building with LLMs over the past couple of years, you know the feeling. One minute you’re giddy with the magic of what you’ve created. The next, you’re staring at an API bill that could fund a small space program, or trying to figure out why your brilliantly crafted prompt suddenly started speaking in pirate slang. It’s the wild west out here, and we've all been riding bareback.

I’ve been in the SEO and traffic game for years, and I've watched trends come and go. But the AI wave is different. It’s less of a wave and more of a tsunami of pure, unadulterated potential mixed with utter chaos. We're building complex, non-deterministic systems on top of other complex, non-deterministic systems. It’s a miracle anything works at all, honestly.

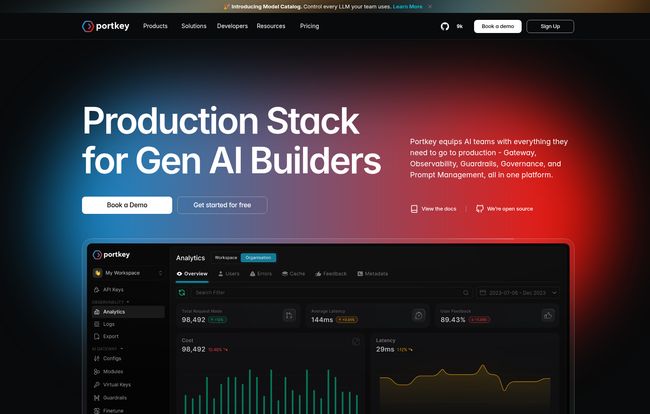

That's the exact headache that a tool like Portkey seems designed to solve. I’ve been keeping an eye on them, and they’re not just another wrapper library. They're positioning themselves as an actual control panel, an air traffic control system for your AI applications. A grown-up tool for a field that’s rapidly having to grow up. But does it live up to the hype? Let’s get into it.

So, What is Portkey, Really?

Think of it this way. When you build a standard web app, you have tools like Datadog, New Relic, or Sentry. You have dashboards, logs, and alerts. You know when things break and why they break. In the AI world... not so much. Debugging often feels like shouting into the void. Portkey aims to be that mission control for your AI stack.

The promise is deceptively simple: with just a few lines of code, you can route all your LLM calls through their gateway. Once you do that, you suddenly get a full suite of tools to observe, govern, and optimize what’s happening. It’s not hosting the models for you; it’s managing the requests to and from the models you already use (like OpenAI, Anthropic, Google, Cohere... you name it).

It integrates with the frameworks a lot of us are already using – think Langchain, CrewAI, Autogen. This is a big deal. It means you don't have to rip out your existing plumbing to get the benefits.

The AI Gateway: Your Smart Front Door for LLMs

This, for me, is the crown jewel. The AI Gateway is where the magic starts. It’s more than just a proxy; it’s an intelligent router for all your API calls.

Reliability Through Smarter Routing and Fallbacks

We’ve all been there. You’re running a demo for a big client, and suddenly GPT-4 slows to a crawl or the API throws a 503 error. Awkward. Portkey's gateway can be configured with automatic retries and, more importantly, fallbacks. If your primary model is down, it can automatically switch to a secondary one. For instance, if `gpt-4-turbo` is having a bad day, it can failover to a different model like a `Claude 3` variant or even `gpt-3.5-turbo` for less critical tasks. That alone can be the difference between a minor hiccup and a complete service outage.

Finally, Sensible Cost Optimization

Let’s talk about money. LLM costs are no joke. A complex task might require a powerhouse model like Claude 3 Opus, but a simple summarization task? Using Opus for that is like using a sledgehammer to crack a nut. It’s expensive and wasteful.

The gateway lets you set up a semantic cache, which stores the results of previous requests. If the same query comes in again, Portkey just serves the cached response. No API call, no cost. It also lets you build a smart logic layer. You can route simple, low-stakes queries to cheaper, faster models (hello, Claude 3 Haiku) while saving the expensive, top-tier models for the heavy lifting. This isn't just a nice-to-have; for any app at scale, it's a financial necessity.

Visit Portkey

Observability: Seeing Inside the Black Box

If the gateway is the front door, the Observability Suite is the security camera system, the accounting department, and the performance analyst all rolled into one. Before tools like this, tracking costs per user or per request was a nightmare of log parsing and spreadsheet gymnastics.

Portkey gives you a dashboard that breaks everything down. You can see:

- Costs: Track spending in real-time. You can even tag requests to see which feature, or which customer, is costing you the most. No more end-of-month bill shock.

- Latency: See exactly how long your calls are taking, identify bottlenecks, and figure out if it's your code or the model provider that's being slow.

- Quality: This is a cool one. You can attach user feedback (thumbs up/down) to responses or set up automated checks to score the quality of the LLM's output. You get an actual feedback loop to improve your prompts.

It turns the black box into a glass box. Not completely transparent, maybe, but you can at least see the moving parts.

Wrangling Prompts and Setting Boundaries

As AI apps mature, we're realizing that prompts are more than just text strings; they are valuable intellectual property. And leaving them scattered across a codebase is a recipe for disaster.

A Central Home for Your Prompts

Portkey provides a central repository for your prompts. Think of it like a Git for prompts. Your team can collaborate, version control them, and update them without a code deployment. You can A/B test different versions of a prompt to see which performs better. This is how you move from prompt crafting to actual prompt engineering.

Guardrails: The Rules of the Road

This is huge for any business that cares about brand safety and compliance. Guardrails are programmable rules that your requests and responses must follow. You can use them to automatically scrub personally identifiable information (PII), prevent toxic or inappropriate language, or even enforce a specific brand voice or format. It's a safety net that ensures your AI doesn’t go rogue and do something that could land you in hot water.

The All-Important Question: Portkey Pricing

Okay, great features, but what’s it going to cost me? Their pricing model is pretty straightforward and seems well-aligned with the journey of a growing company. I appreciate that.

| Plan | Price | Best For | Key Features |

|---|---|---|---|

| Free Forever | $0 | Solo devs, hobbyists, and early-stage testing. | Up to 50k logs/month, basic AI gateway, observability, 2 prompt templates. |

| Startup | $49/month | Startups and small teams putting apps in production. | Up to 1M logs/month, full AI Gateway features, unlimited prompts & guardrails, longer data retention. |

| Custom | Custom Pricing | Enterprises needing advanced security, support, and scale. | Everything in Startup plus SOC2/HIPAA, SSO, dedicated support, and private cloud options. |

The free tier is genuinely useful for getting your feet wet. The $49/month Startup plan feels like the sweet spot for any serious project, unlocking the most important scaling features. And the enterprise option is there for when you hit the big leagues.

The Good, The Bad, and The Codey

No tool is perfect, so let’s get real. In my experience, even the best platforms have their trade-offs.

What I really like is the focus on the whole problem. It's a comprehensive platform that understands the lifecycle of a production AI app. The cost optimization and reliability features aren't just add-ons; they are core to the product. For any team lead or product manager, that’s music to your ears.

Now, for the caveats. Integrating any middle-ware, even one as simple as Portkey, means adding another network hop. They do a lot with caching and edge compute to minimize this, but it’s a physical reality that could introduce a tiny bit of latency. You also have to modify your code to make the initial integration. It's not a huge lift – you're mostly just changing the base URL of your API calls – but it's not zero work. Also, if you want a fully managed version on a private cloud, you’ll need to be on the Enterprise plan, which is pretty standard practice but something to be aware of.

So Who Is This For?

If you're a student just tinkering with the OpenAI API for the first time, Portkey is probably overkill. But if you are part of a team trying to ship a real, reliable, and cost-effective AI product? This is for you.

It's for the startup that just raised a seed round and needs to prove its AI features can scale without bankrupting the company. It's for the enterprise team trying to bring governance and predictability to their AI initiatives. It’s for anyone who has moved past the “wow, it works!” phase and is now in the “oh no, how do we run this thing?” phase.

Bringing Some Order to the AI Frontier

Look, the AI space is still a bit of a chaotic gold rush. Tools like Portkey represent the next stage of maturation. It's the people selling the picks and shovels, the control panels and the guard rails. It’s the infrastructure that turns a cool tech demo into a sustainable business.

I’m genuinely optimistic about platforms like this. They address the real, often-unglamorous problems that developers and businesses face daily. By providing better visibility, control, and efficiency, Portkey gives teams a fighting chance to not just build innovative AI apps, but to run them successfully in the wild. And that, for me, is pretty exciting.

Frequently Asked Questions

What LLM providers does Portkey support?

Portkey supports a wide range of providers, including OpenAI, Anthropic, Google (Vertex AI & AI Platform), Cohere, Mistral, and many others. Their gateway is designed to be provider-agnostic, so you can mix and match models as needed.

Is it difficult to integrate Portkey into an existing project?

Not really. The basic integration typically involves changing the base URL for your LLM API calls to point to Portkey's gateway and adding your Portkey API key. For most SDKs, this is a one- or two-line change. They claim it can be done in minutes, and that seems about right for a simple setup.

Can I use Portkey with frameworks like Langchain or CrewAI?

Yes, absolutely. Portkey is built to work alongside these popular agent and orchestration frameworks. It acts as the observability and management layer for the LLM calls that these frameworks make, making your agent-based workflows much more production-ready.

Is my data secure with Portkey?

Security is a major focus. They are SOC2 Type 2 certified and offer features for GDPR and HIPAA compliance, especially on their Enterprise plan. The platform includes tools like PII redaction to help you manage sensitive data passing through the gateway.

What happens if I go over my plan's log limit?

Typically, SaaS platforms like this will either prompt you to upgrade or may have overage charges. Based on their pricing page, it seems designed to encourage you to move to the next tier as your usage grows. The jump from the free 50k logs to the Startup plan's 1 million logs is quite generous.

Does Portkey host the LLMs themselves?

No, and this is an important distinction. Portkey is not an LLM provider. It's a control panel that sits between your application and the LLM providers you choose to use (like OpenAI). You still need your own API keys for the underlying models.