I’ve been in the data and traffic game for a long time. I’ve seen trends come and go, I’ve seen “game-changers” that were anything but, and I’ve seen simple ideas completely reshape how we work. So when a company comes along with a claim as bold as “100x faster machine learning,” my internal skeptic, who’s been hardened by years of marketing fluff, immediately sits up and says, “Oh, really?”

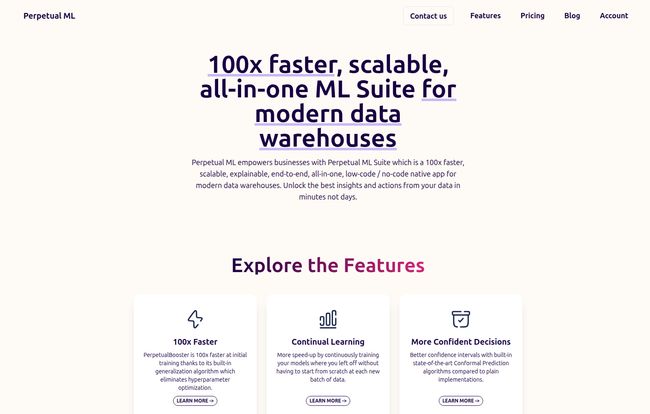

That company is Perpetual ML. And their claim isn't just a whisper; it's plastered right on their homepage. They’re offering an ML suite for modern data warehouses that promises to be not just faster, but scalable, explainable, and all-in-one. It sounds like the holy grail for anyone who's ever waited... and waited... for a model to train.

So, let's cut through the noise. Is this just another tool with big promises, or is there something genuinely different happening here? I decided to take a closer look.

What Exactly Is Perpetual ML?

At its core, Perpetual ML is a native app that lives directly inside your data warehouse. Right now, its home is Snowflake, but they've got their sights set on Databricks and other major platforms down the line. Think of it less like an external tool you have to pipe data to and more like a powerful upgrade you install directly onto your data engine.

The whole idea is to bring the computation to the data, not the other way around. This alone cuts down on a ton of the usual data engineering headaches. No more managing complex data pipelines just to get your information into a separate ML environment. It’s already there, ready to go. Simple. Elegant, even.

But the real magic, the secret to their audacious 100x speed claim, is how they handle one of the most tedious parts of machine learning.

The End of an Agonizing Ritual: Hyperparameter Tuning

If you've ever worked on an ML project, you know the pain. You build a great model, but to make it actually perform well, you have to tune its hyperparameters. This process feels less like science and more like a dark art. It involves endless cycles of guessing, testing, and tweaking dozens of settings in a process called hyperparamter optimization. It's a resource-hogging, time-sucking vortex that can bring projects to a grinding halt.

Perpetual ML’s big move is to just… eliminate it. They’ve developed a built-in generalization algorithm that basically does this work for you. By removing this massive, iterative bottleneck, they’re able to slash model training time from hours or days down to minutes. It’s a bold move, and if it works as advertised, it’s a genuine paradigm shift. Sorry, I know 'paradigm' is a bit of a buzzword, but I'm struggling to find a better word for it here.

Visit Perpetual ML

Breaking Down The Perpetual ML Toolkit

Okay, so it's fast. But what can you actually do with it? A fast car is useless if it can't steer. Let's look at the features they're packing into this suite.

The PerpetualBooster and Continual Learning

The PerpetualBooster is the star of the show, delivering that initial 100x faster training. But what I find even more interesting is the Continual Learning feature. In traditional ML, when new data comes in, you often have to retrain your entire model from scratch. It's wildly inefficient. Perpetual ML allows your models to learn from new data without hitting the reset button every time. It’s like teaching an old dog new tricks without it forgetting all the ones it already knows. This is huge for dynamic environments where data is constantly changing—think fraud detection or inventory forecasting.

More Than a Guess: Better Confidence with Conformal Prediction

Here’s where things get a bit nerdy, but stick with me because this is important. A standard ML model gives you a prediction. A great ML system tells you how confident it is in that prediction. Perpetual ML uses a technique called Conformal Prediction to provide statistically valid confidence intervals. In business terms, this means you don’t just know that a customer might churn; you know there's a 95% chance they'll churn within a specific timeframe. That kind of certainty is something you can build a real business strategy on.

A Full Toolbox for the Modern Data Stack

Beyond the headline features, there's a whole host of other capabilities. It handles geographic data natively, which is a specialized skill. It has built-in model monitoring, which means you don't need another third-party tool to tell you when your model's performance is drifting. It’s also versatile, supporting everything from tabular classification and regression to time series and even text classification.

The Strategic Wins: Portability and Hardware Freedom

Let's talk about the bigger picture. Two things stood out to me that any CTO or tech lead should care about.

First, it's portable. They're starting with Snowflake, but it's designed to run on different data warehouses. This is a subtle but massive point. It means you’re not locking yourself into a single vendor’s ecosystem for your ML needs. You can pick the right data warehouse for you, and your ML capabilities can come along for the ride.

Second, and this one is a budget-saver, is the no specialized hardware requirement. The race for GPUs is insane right now. Companies are spending fortunes on NVIDIA cards and TPUs just to keep their ML teams running. Perpetual ML is designed to run efficiently on standard CPUs. Let me repeat that: no GPU or TPU needed. This doesn't just cut costs; it democratizes access to powerful machine learning. Smaller companies that can't afford a fleet of A100s can suddenly play in the same league. That’s a pretty big deal.

The Catch: Pricing and Platform Availability

Alright, it can’t all be sunshine and roses. There are a couple of things to consider. The most obvious one is the pricing model. If you go to their pricing page, you’ll be greeted with the three words every person hates to see: “Contact us for pricing.”

Look, I get it. For enterprise software, pricing is often complex and tailored to the customer. But it’s also a barrier. I can’t give you a number, but I’d wager it’s a subscription model based on usage or compute. It’s a bit of a black box, and you’ll have to go through the sales process to find out what it’ll cost you.

The other current limitation is the focus on Snowflake. It's a smart go-to-market strategy—nail one platform before expanding—but if your company is a Databricks shop, you'll have to wait. They say it's on the roadmap, so it's one to watch.

Who Is This Really For?

After digging in, I see a few key profiles who should be paying attention:

- Overburdened Data Science Teams: For teams drowning in data and spending more time waiting than innovating, the speed here could be transformative. It lets you iterate, experiment, and deploy models at a pace that's just not possible with traditional methods.

- Ambitious Business and Data Analysts: The low-code/no-code interface is a genuine bridge. It empowers people who understand the business data but don't have a Ph.D. in computer science to build and deploy their own predictive models.

- Cost-Conscious Tech Leaders: For any CTO or VP of Engineering looking at their cloud bill, the idea of getting high-performance ML without a massive GPU expenditure has to be incredibly attractive.

Final Thoughts: Is Perpetual ML Worth the Hype?

Here’s the thing. The claims are big. And I’m still a healthy skeptic. But I’m also an optimist. Perpetual ML is targeting a very real, very expensive pain point in the machine learning workflow. By eliminating hyperparameter tuning and running natively in the data warehouse, they are offering a genuinely different approach.

Even if the speed-up is 50x or just 20x in some real-world scenarios, that's still a massive improvement. The combination of speed, accessibility through a low-code interface, and significant cost savings from ditching specialized hardware makes for a compelling argument. It feels less like another incremental tool and more like a foundational shift. I'll be watching this one closely, especially as they expand to other platforms. The future of MLOps might be getting a whole lot simpler.

Frequently Asked Questions (FAQ)

- What is Perpetual ML?

- Perpetual ML is a low-code/no-code machine learning suite that runs directly inside modern data warehouses like Snowflake. It's designed to accelerate model training by over 100x by eliminating the need for hyperparameter optimization.

- How does Perpetual ML actually speed up model training?

- The primary speed boost comes from its proprietary generalization algorithm, which removes the time-consuming and computationally expensive step of hyperparameter tuning that is required for most traditional machine learning models.

- Do I need a GPU or other specialized hardware to use Perpetual ML?

- No. One of its main benefits is that it's designed to run efficiently on standard CPUs, eliminating the need for expensive and often hard-to-acquire hardware like GPUs or TPUs.

- What platforms does Perpetual ML currently support?

- Currently, Perpetual ML is available as a native app for Snowflake. The company has stated that support for Databricks and other modern data warehouses is on their future roadmap.

- How much does Perpetual ML cost?

- Perpetual ML does not publicly list its prices. To get pricing details, you need to contact their sales team directly through their website for a custom quote based on your organization's needs.

- Is Perpetual ML a true no-code solution for anyone to use?

- It's a low-code/no-code platform, which significantly lowers the barrier to entry. While a business analyst could certainly build models with it, a foundational understanding of data and what you're trying to predict is still beneficial for getting the best results.