We've all been there. You're trying to use a voice assistant on a website or in an app, and it's… painful. The awkward pause after you speak, the stilted, robotic reply that completely misses the point. It’s clunky. It feels less like talking to a futuristic AI and more like talking to a very confused, very slow toaster.

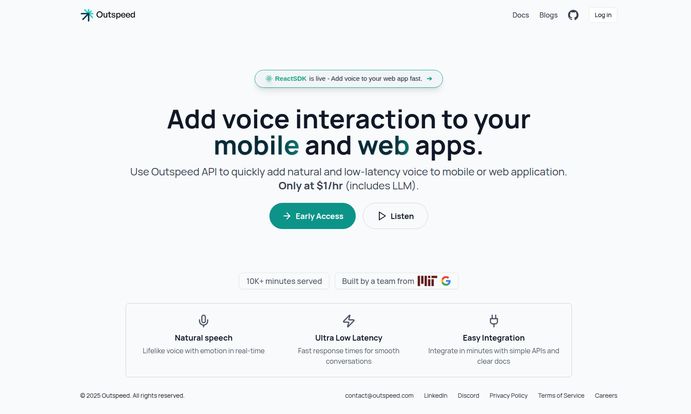

For years, as someone obsessed with user experience and traffic, I've seen how bad voice integration can absolutely kill engagement. It's a feature that should be magical but often ends up being a gimmick. So, when I stumbled upon a platform called Outspeed, my professional skepticism was on high alert. Their landing page hits you with a bold promise: "Add voice interaction to your mobile and web apps." And not just any voice interaction, but natural, low-latency conversations.

My first thought? Yeah, right. But then I saw it was built by a team from MIT and Google, and my interest was officially piqued. So I rolled up my sleeves and went down the rabbit hole. Is Outspeed just another drop in the AI ocean, or is it the tool that finally makes voice AI feel... human?

So, What is Outspeed, Really?

At first glance, Outspeed is about adding a voice to your app. Simple enough. But that's like saying a Ferrari is just about getting from A to B. The magic is in the how. Outspeed isn't just a text-to-speech API; it's an entire infrastructure for building AI applications on top of streaming data. Think live audio, video feeds, or even sensor data. It’s the plumbing, the wiring, and the brainstem all in one package.

The main attraction, the thing they're rightly shouting about, is the ability to create and deploy AI voice companions. These aren't your run-of-the-mill chatbots with a voice tacked on. We're talking about companions with emotion, memory, and most importantly, speed. It's the difference between asking a pre-programmed machine for the weather and having a genuine back-and-forth conversation.

The Low Latency Obsession

If there's one hill I'll die on in the world of UX, it's latency. Delay is the silent killer of good user experience. That fractional second of waiting is where immersion breaks and frustration begins. According to the usability gurus at Nielsen Norman Group, response times around 0.1 seconds feel instantaneous to a user. Anything longer, and the illusion of a direct conversation shatters.

This is where Outspeed seems to be focusing its energy. Their promise of "Ultra Low Latency" is, in my opinion, their biggest selling point. A fast response time means the conversation flows. It means your users aren't left wondering if the app crashed or if the AI is just taking a nap. It’s the foundational element that makes everything else—the emotion, the memory—actually work. Without it, you just have a well-written robot that takes too long to think.

Building AI That Actually Feels… Alive

This is where things get interesting. Outspeed isn't just about fast responses; it's about making those responses feel genuine. They do this in two pretty clever ways.

It's Not Just What You Say, But How You Say It

The platform boasts "lifelike voice with emotion in real-time." This is a subtle but massive differentiator. Think about how you talk to a friend. Your tone changes, your pitch varies, you convey sarcasm, excitement, or concern. Most AI voices are monotone, delivering every line with the same flat affect. Outspeed aims to bake that emotional context right into the speech, making the interaction feel significantly less artificial. It's the difference between an AI that reads you a fact and one that shares an idea with you.

The Power of Stateful Memory

Have you ever had to re-explain yourself to a chatbot three times in one conversation? It's maddening. This is because most systems are stateless—every interaction is a blank slate. Outspeed incorporates stateful memory, which is a complete game-changer. This means the AI voice companion remembers previous parts of the conversation. You can refer back to something you said earlier, and it gets it. This continuity is the bedrock of natural conversation. It's how humans talk. It's what makes you feel heard and understood, instead of just being processed.

Getting Under the Hood for the Devs

Okay, so it sounds cool, but is it a nightmare to implement? As someone who has wrestled with my fair share of APIs, this was my next big question. The claim of "Easy Integration" is a bold one.

From what I've seen, they seem to be backing it up. They provide simple APIs and what they claim are clear docs—a godsend for any developer. They even have a ReactSDK ready to go, which is a huge green flag. It shows they're thinking about the developer workflow and making it as frictionless as possible to add voice to a web app fast. No need to reinvent the wheel.

Another powerful feature is the unrestricted LLM access. This is huge. It means you're not locked into one specific Large Language Model. You have the freedom to choose the brain that powers your voice companion, giving you a ton of flexibility and control over the personality, cost, and capabilities of your final product.

The $1/hr Question: Let's Talk Pricing

Alright, let's address the elephant in the room. The price. Outspeed is currently in Early Access at $1 per hour. My initial reaction was a slight wince. For a hobbyist, that can add up. But then I looked closer.

That $1/hr includes the LLM. Let me repeat that: it includes the LLM.

Visit Outspeed

When you start to factor in the costs of speech-to-speech model inference, low-latency infrastructure, and the LLM API calls themselves, that $1/hr starts to look… surprisingly reasonable. Building and maintaining a similar stack on your own would be a significant engineering challenge and likely cost a whole lot more. For a startup or a company serious about implementing next-gen voice, this pricing model could actually be a bargain. It turns a massive capital and time expenditure into a predictable operational cost.

A Few Things to Keep in Mind

No tool is perfect, especially one in its early stages. It’s important to go in with eyes open. The platform is in Early Access, which is a double-edged sword. On one hand, you get to be on the cutting edge and potentially influence the product's direction. On the other, you might encounter some bugs or limitations. That's just the nature of the beast.

Also, this isn't exactly a plug-and-play tool for beginners. The documentation mentions that it helps to have some understanding of AI and streaming data concepts. This isn't a knock against Outspeed; it's just a reality of the powerful technology it provides. It's a professional tool for builders who know what they're doing, not a drag-and-drop website builder.

So, Who Should Be Using Outspeed?

After digging in, I have a pretty clear picture of the ideal Outspeed user. This is for the ambitious developer, the forward-thinking startup, or the established company that wants to leapfrog the competition in user interaction. It's for anyone who looks at the current state of voice AI and thinks, "We can do better."

If you're building an interactive educational tool, a next-gen customer support agent, an in-car assistant, or even a literal AI companion app, Outspeed should be on your radar. If you're just looking to add a simple "read this text aloud" button to your blog, this might be overkill. This is for building the starship, not the canoe.

My Final Verdict: Is Outspeed a Game-Changer?

I came in skeptical, and I'm walking away genuinely excited. That doesn't happen often. Outspeed is tackling the hardest parts of creating believable voice AI: latency and memory. They're not just offering a piece of the puzzle; they're offering a cohesive toolkit to build the whole thing.

The fact that it’s backed by a team with credentials from MIT and Google adds a thick layer of confidence. These aren't just some guys in a garage; they're people who have likely lived and breathed these technical challenges at the highest level.

Is it the final form of AI interaction? Probably not. But it feels like a significant step in the right direction. It's a tool that could finally allow developers to build the kind of fluid, responsive, and memorable voice experiences that sci-fi has been promising us for decades. And for me, that’s something worth keeping a very close eye on.

Frequently Asked Questions

- What exactly is Outspeed?

- Outspeed is a platform that provides the tools and infrastructure for developers to build low-latency AI applications, specifically focusing on creating AI voice companions for web and mobile apps. It handles things like speech-to-speech inference, stateful memory, and provides easy integration through APIs and an SDK.

- How much does Outspeed cost?

- During its Early Access period, Outspeed is priced at a flat rate of $1 per hour. This price is inclusive of the Large Language Model (LLM) usage, which is a significant part of the value.

- What makes Outspeed's voice AI different from others?

- The two main differentiators are its focus on ultra-low latency for smooth, natural conversations, and its use of stateful memory. This allows the AI to remember context from earlier in the conversation, making the interaction feel much more human and less robotic.

- Is Outspeed difficult for developers to use?

- While it's a powerful tool, Outspeed aims for easy integration with simple APIs, clear documentation, and a ready-to-use ReactSDK. However, having a foundational understanding of AI and streaming data concepts is beneficial to get the most out of the platform.

- Who is the team behind Outspeed?

- The platform was built by a team with professional backgrounds from top-tier institutions like MIT and Google, suggesting a high level of expertise in the fields of AI and software engineering.

- Can I use my own LLM with Outspeed?

- Yes, one of the key features is unrestricted LLM access. This gives developers the flexibility to choose and integrate the Large Language Model that best fits their application's needs and personality.