Building apps with Large Language Models (LLMs) right now feels a bit like the Wild West. It's exciting, chaotic, and frankly, a complete mess half the time. Your dev team is over in one corner cooking up prompts with the latest OpenAI model, your product manager has a Google Doc full of different ideas, and your compliance officer just heard the words "data privacy" and is about to have a full-blown meltdown.

I’ve seen it happen on multiple projects. Everyone’s running in different directions, prompt versions get lost in Slack threads, and nobody really knows how much that fancy new AI feature is costing until the bill from Azure or AWS arrives. It's a recipe for slow progress and spectacular budget overruns.

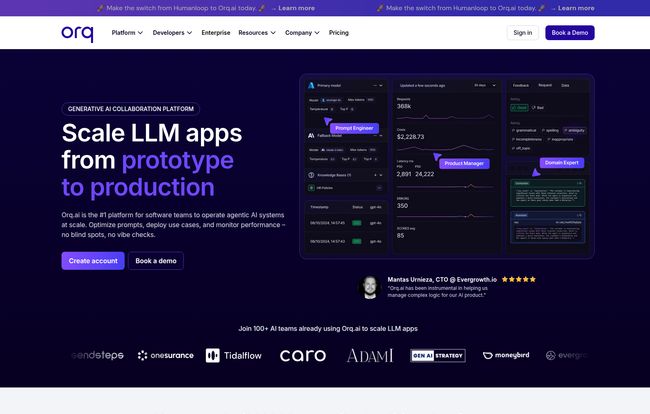

For a while, I’ve been on the lookout for something to bring a little... sanity to the situation. A platform that doesn't just give you more tools, but actually creates a central hub for the whole chaotic process. And I think I might have found a serious contender in Orq.ai.

So, What Exactly is Orq.ai?

On the surface, Orq.ai calls itself a "GenAI collaboration platform." But that's a bit of a corporate way of putting it. In my view, it’s more like a central command center for your entire LLM-powered application. Think of it as the shared kitchen where your developers, product managers, and even business folks can all come together to build, test, and monitor AI features without stepping on each other's toes.

It’s designed to manage the entire lifecycle of an LLM app, from that first crazy prompt idea to deploying it at scale and watching how it performs with real users. This end-to-end approach is what caught my eye. It’s not just another prompt playground or a simple API wrapper; it’s trying to solve the whole messy problem from start to finish.

Visit Orq.ai

The Core Features That Actually Matter

A platform is only as good as its features, right? Orq.ai has a bunch, but a few of them really stand out as solutions to problems I've personally faced.

A Single Source of Truth for Prompts

If you've ever tried to manage prompt versions in a spreadsheet, you know the special kind of pain I'm talking about. It’s impossible. Orq.ai gives you a centralized Prompt Management system. This means every prompt for every use case lives in one place, version-controlled and ready for collaboration. No more guessing which one is the “latest” or “the one that actually works.” A simple concept, but an absolute game-changer for team sanity.

The Ultimate LLM Playground for Experimentation

Should you use GPT-4 Turbo, or is Claude 3 Sonnet cheaper and good enough? How does Google's Gemini Pro stack up for your specific task? Answering these questions usually involves a lot of tedious code changes and testing. Orq.ai has a built-in experimentation layer where you can test the same prompt across multiple LLMs, side-by-side. You can compare outputs for quality, latency, and—most importantly—cost. This makes it so much easier to make data-driven decisions instead of just going with the model that has the most hype.

From Messy Code to Smooth Deployment

Juggling different API keys and model-specific request formats is a headache. Orq.ai’s AI Gateway acts as a universal translator. You code to their single API, and they handle the routing to whichever model you’ve chosen. This decouples your application logic from the specific LLM you’re using, making it a breeze to swap models later without rewriting half your app. It's the kind of smart abstraction that developers dream about.

Keeping an Eye on Everything with Observability

Once your feature is live, how do you know if it's working? Orq.ai provides detailed LLM Observability. This isn't just about server uptime; it's about tracking every request and response, monitoring costs per user or per call, and even collecting user feedback on the quality of the AI’s output. When an AI agent goes off the rails, you have detailed logs and traces to figure out why. This is how you move from a cool demo to a reliable, production-grade product.

RAG-as-a-Service, Simplified

Retrieval-Augmented Generation (RAG) is a powerful technique for letting LLMs access your own private documents and data. But setting it up can be... a lot. Orq.ai offers RAG-as-a-Service, which aims to simplify the whole process of ingesting your knowledge bases and making them available to your prompts. This is huge for building internal knowledge bots or customer support agents that can give specific, context-aware answers.

Who is Orq.ai Really Built For?

Let's be clear: this probably isn't the tool for a solo developer hacking on a weekend project. While their free plan is generous, the real power of Orq.ai unlocks when you have a team. A team of developers and non-technical stakeholders all trying to build something meaningful with AI.

It’s for the scale-up that’s moving its AI features from prototype to production. It’s for the enterprise that needs iron-clad security and wants to give its teams a safe, compliant sandbox to innovate in. The cross-functional collaboration is the secret sauce here. It bridges the gap between the people writing the code and the people defining the product.

Now, does it require some know-how? Yes. To really max this thing out, you'll want to be familiar with concepts like APIs, LLMs, and maybe a bit of MLOps. But it does a fantastic job of lowering the barrier to entry for things like model evaluation and observability.

Let's Talk Money: Orq.ai Pricing Breakdown

Pricing is always the elephant in the room. Orq.ai has a pretty standard tiered model, which I appreciate for its clarity. No hidden fees, just straightforward plans.

| Plan | Price | Best For |

|---|---|---|

| Free | Free | Small teams and individuals just starting out. It's capped at 1K logs and 1 user, so think of it as a very generous trial. |

| Pro | €250 / month | Growing teams ready to ship and monitor real AI systems. You get way more logs (25K), 5 users, and a higher rate limit. |

| Enterprise | Custom | Large organizations with serious scale and security needs. This is where you get features like on-premise deployment, role-based access control, and custom everything. |

In my opinion, the €250/month for the Pro plan is pretty reasonable when you consider the cost of the problems it's solving. A single bad prompt that runs up a huge API bill could cost you more than that in a day. It's an investment in efficiency and risk management.

My Honest Take: The Good and The Not-So-Good

No tool is perfect, and it’s important to see both sides. Here's my unfiltered take.

What I really like is the holistic, end-to-end vision. Having prompt management, experimentation, deployment, and observability all under one roof is a massive win. It drastically reduces the number of tools you have to stitch together. The emphasis on compliance and data protection, with options for self-hosted or hybrid deployment, is also a critical feature that many other platforms overlook. For any company handling sensitive data, this is non-negotiable.

On the flip side, that Pro pricing tier, while fair, could be a barrier for early-stage startups or small teams on a tight budget. There's also a potential learning curve. This isn't a plug-and-play solution that will magically build your app for you. To get the most out of it, your team will need to invest some time to understand its workflow and capabilities. It's a professional tool, and it requires some professional effort.

Frequently Asked Questions about Orq.ai

What is Orq.ai in simple terms?

Think of it as a central workbench for teams building with AI. It helps you organize your prompts, test different AI models easily, deploy your features, and monitor them to make sure they're working well and not costing too much.

Can I use my own fine-tuned models with Orq.ai?

Yes. According to their documentation and plan comparisons, the platform supports custom models. This is particularly relevant for the Enterprise plan, which is designed for more bespoke AI workloads.

How does Orq.ai handle data privacy and security?

This is one of their strong points. They offer deployment options like in-VPC or on-premise for Enterprise customers, which means your sensitive data never has to leave your own infrastructure. They are also aligned with EU AI Act principles, which is a big deal for companies operating in Europe.

Is Orq.ai difficult to learn for non-developers?

While developers will get the most out of the technical features, the platform is designed for collaboration. A product manager can easily use the experimentation and observability dashboards to understand performance and provide feedback without writing code. There's a learning curve, but it's not exclusively for engineers.

What's the main difference between the Free and Pro plans?

The main differences are scale and collaboration. The Free plan is great for a single developer to try things out, but the limits on logs, users, and API calls are quite restrictive. The Pro plan lifts these restrictions significantly, making it suitable for a proper team shipping a real product.

Does Orq.ai replace tools like LangChain?

Not necessarily. It's more of a complement. You might use a framework like LangChain to structure your AI agent's logic, and then use Orq.ai to manage the prompts for that agent, test it against different models, deploy it, and monitor its performance. Orq.ai provides the MLOps and management layer that frameworks like LangChain don't focus on.

My Final Verdict

So, is Orq.ai the answer to all your GenAI chaos? It just might be. If you're a serious team trying to build, ship, and scale real products with LLMs, this platform brings a desperately needed layer of structure, collaboration, and control. It turns the Wild West of AI development into a well-organized, observable, and scalable operation.

It’s not a magic wand, but it's a powerful control tower. And in the current AI climate, having a clear view from above is worth its weight in gold.

References and Sources

- Orq.ai Official Website

- Orq.ai Pricing Page

- Martin Fowler's article on Continuous Delivery for Machine Learning (CD4ML) - For context on the importance of MLOps.