If you've spent any time building with Generative AI or LLMs, you know the feeling. It’s a mix of pure magic and absolute, hair-pulling frustration. One minute you're marveling at the creative text your app is generating, the next you're staring at a surprisingly high bill from OpenAI and wondering where, exactly, all that money went. It’s like trying to debug a conversation – you know something went wrong, but you can’t rewind the tape to see the exact moment your AI went off the rails.

For years in the web dev world, we've had Application Performance Monitoring (APM) tools. They’re our safety nets. But the GenAI space? It’s still a bit of a wild west. That's why when a tool like OpenLIT pops up on my radar, I pay attention. Especially when it’s waving a big, beautiful “open-source” flag.

So, What's the Big Deal with OpenLIT?

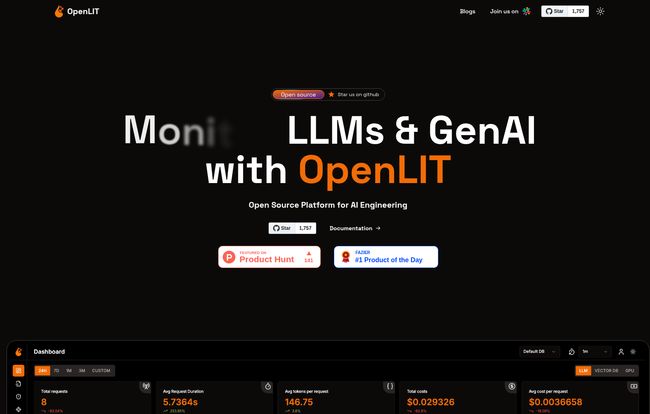

At its heart, OpenLIT is an observability platform specifically for the wild world of GenAI and LLM applications. Think of it as a mission control center for your AI. It’s built to be OpenTelemetry native, which is a nerdy detail that's actually a huge deal. It means it’s not some proprietary black box, but rather a tool that speaks the universal language of modern observability. No vendor lock-in. Music to my ears.

Instead of just telling you “your app is running,” it aims to show you how it’s running. Which prompts are costing you a fortune? Where are the bottlenecks in your RAG pipeline? Why did that one specific user query return gibberish? It unifies all those messy signals—traces, metrics, costs—into one dashboard. And you can host it yourself. For free.

The Features That Actually Move the Needle

A feature list is just a list until you see how it solves a real problem. I’ve seen enough flashy dashboards to know what’s fluff and what’s functional. Here’s my take on where OpenLIT shines.

Getting a Handle on Your Runaway AI Costs

The costs. Oh, the costs. This is the big one, isn't it? LLM APIs are priced by the token, and it's shockingly easy to burn through a budget. OpenLIT comes with built-in Cost Tracking that gives you a granular view of what you're spending, right down to the individual request. You can finally answer questions like, “Is our fancy new summarization feature costing more than it's worth?” For any startup or even an enterprise team experimenting with AI, this isn't just a nice-to-have; it's essential for survival.

I remember a project where our staging environment accidentally ran a script with a recursive loop calling a GPT-4 endpoint. The bill was… educational. A tool like this would have turned a four-figure mistake into a five-minute fix.

Peeking Inside the Black Box with Tracing

OpenLIT provides detailed Application and Request Tracing. This is your X-ray vision. It breaks down every single request into its component parts, or ‘spans’. You can see how long the LLM took to think, how long the database query took, how long the API call to your vector DB took. When a user complains about slowness, you can pinpoint the exact stage that’s causing the delay. It also includes Exception Monitoring, which is table stakes for any serious APM, but crucial nonetheless. It’s about finding the smoke before the fire starts.

Visit OpenLIT

Taming the Prompt Engineering Beast

And don't even get me started on managing prompts across a team. It quickly devolves into a mess of text files, Slack messages, and Google Docs. OpenLIT’s Centralized Prompt Repository is a godsend. It’s a single source of truth where you can store, version, and manage your prompts. There’s even a “Playground” to test out different models and settings side-by-side. This helps standardize what you’re sending to the LLM, which leads to more consistent—and less chaotic—results.

Stop Putting API Keys in Plain Text (Please!)

Security is often an afterthought in the rush to build something cool. OpenLIT’s Vault Hub provides a secure place to store and manage your secrets, like API keys. It’s a simple concept, but one that prevents a whole class of catastrophic security breaches. If you're still pasting API keys directly into your code or .env files, this feature alone is worth the setup time.

The Good, The Not-So-Good, and The Free

So, what’s the catch? Nothing is ever perfect. What I really like about OpenLIT is its philosophy. Being open-source and OpenTelemetry-native makes it a team player in your tech stack. It’s not trying to lock you into its ecosystem. The cost tracking, prompt management, and detailed tracing are exactly the tools developers are crying out for right now.

However, it’s not a magic wand. The main drawback is that it requires setup and configuration. This isn’t a slick, one-click SaaS product you just sign up for. You’ll need to get your hands a little dirty. If you’re not familiar with OpenTelemetry, there might be a bit of a learning curve. I also noticed that while the core functionality is there, the documentation could use a bit more fleshing out, which is pretty typical for a growing open-source project. It’s a small price to pay for the power and freedom you get, in my opinion.

And the pricing? Well, I went to find their pricing page, and it gave me a 404 error. Which is actually the most honest pricing page I've seen in a while. It's open-source. It's free. You host it yourself, so your only costs are the server resources you give it. For teams on a budget, this is a massive win.

So Who Is This For?

I see OpenLIT as a perfect fit for a few groups. Startups and small-to-medium-sized teams building GenAI products will find the cost-tracking and observability invaluable. Developers and teams who are already invested in the OpenTelemetry standard will be able to adopt this ridiculously fast. It's for the builders who want control, transparency, and aren't afraid to run their own infrastructure. It's probably not for the huge enterprise that wants a fully managed service with 24/7 white-glove support, at least not yet. But for the rest of us in the trenches? Its a very compelling option.

Frequently Asked Questions

- Is OpenLIT really free to use?

- Yes, it is. OpenLIT is an open-source project distributed under the Apache 2.0 license. You can download it, deploy it, and use it without paying any licensing fees. Your only costs will be for the infrastructure you host it on.

- What is OpenTelemetry and why does it matter?

- OpenTelemetry (or OTel) is an open-source observability framework—a collection of tools, APIs, and SDKs. By being OTel-native, OpenLIT adheres to an industry standard, ensuring it can integrate smoothly with a wide array of other tools and platforms without locking you into a proprietary system.

- How difficult is it to set up OpenLIT?

- It's more involved than a SaaS signup. You'll need some familiarity with deploying applications, possibly using Docker. However, for a developer comfortable with modern DevOps practices, the process should be straightforward. The project's documentation and community are your best resources here.

- Can OpenLIT actively reduce my LLM API costs?

- Indirectly, yes. It won't negotiate a better rate with OpenAI for you, but by showing you exactly which requests and prompts are the most expensive, it gives you the data you need to optimize them. You can identify inefficiencies, cache common requests, or switch to cheaper models for certain tasks, all based on the insights from OpenLIT.

- Does OpenLIT only work with OpenAI models?

- No, it's designed to be model-agnostic. It integrates with a variety of LLM providers and frameworks, including not just OpenAI but also platforms like Hugging Face, Anthropic, and more. Its foundation in OpenTelemetry makes it adaptable.

Final Thoughts

The GenAI space is moving at a breakneck pace, and our tooling is racing to keep up. OpenLIT feels like a significant step in the right direction. It’s a tool built by developers, for developers, addressing the real-world pains of building with large language models. It's not polished to a mirror shine just yet, but the foundation is solid, the core features are spot-on, and the price is unbeatable.

I’ve got it starred on GitHub, and I'll definitely be watching its development. If you're building with LLMs and feeling a bit lost in the dark, OpenLIT might just be the light you’re looking for.