Alright, let’s have a chat. As someone who’s been neck-deep in the SEO and digital marketing world for years, I’ve seen AI tools come and go. They all promise to revolutionize our workflow, generate mind-blowing traffic, and basically do our jobs for us. And some of them are pretty great! But they all share one, tiny, nagging detail: they live in the cloud.

Your prompts, your data, your brilliant, half-formed ideas… they all get sent off to a server owned by a massive corporation. And call me old-fashioned, but that’s always given me the heebie-jeebies. Every time I hit 'Enter' on a prompt, a little voice in my head wonders, "Who else is reading this?"

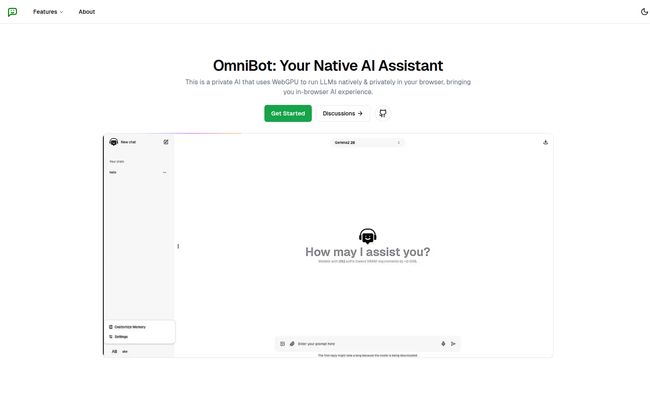

So, when I stumbled across a tool called Omnibot, my curiosity was definitely piqued. The promise? An AI that runs natively in your browser. Locally. On your machine. No servers, no data-snooping, no internet connection required after the initial setup. Could this be real? Let's get into it.

What Exactly is Omnibot? (And Why Should You Care?)

So what's the big deal? In the simplest terms, Omnibot is an AI chat platform that doesn't use the cloud. Think of it like this: most AI tools like ChatGPT or Claude are like ordering takeout. You send your order (your prompt) to a huge restaurant (their servers), they cook it up, and send it back. It’s convenient, but the restaurant knows what you ordered, when you ordered it, and they're probably using that data to sell more food.

Omnibot is like having a personal chef who lives and cooks exclusively in your own kitchen. Nothing leaves the house. Your secret family recipes (your data) stay secret. It runs Large Language Models (LLMs)—the brains behind the AI—directly inside your web browser using a neat piece of tech called WebGPU. This means all the processing happens on your hardware. It’s a fundamental shift in how we interact with AI.

Your conversations are yours. Period. That alone is a massive win for anyone concerned about privacy in this day and age.

Visit Omnibot

The Two Pillars: Absolute Privacy and Offline Freedom

Let's break down the two things that I think make Omnibot a really interesting proposition.

Your Data Stays YOURS

I can’t stress this enough. In an era of data breaches and constant surveillance capitalism, the idea of a truly private AI is a breath of fresh air. All the AI models Omnibot uses run locally on your PC. Your inputs are processed on your machine and never sent over the internet. This is a game-changer for developers working on proprietary code, writers drafting sensitive material, or just anyone who prefers their digital life to remain private. Honestly, it's how I feel these tools should have worked from the beginning.

Cutting the Cord with an Offline AI

Have you ever been on a flight, hit a great creative stride, and wanted to bounce ideas off an AI, only to remember… no Wi-Fi? Or maybe your home internet decided to take an unscheduled nap right on deadline day. It's infuriating. Omnibot sidesteps this whole problem. Once you download a model you want to use, it works completely offline. It's a self-contained powerhouse, ready to go whenever and wherever you are. For a digital nomad or frequent traveler, that kind of reliability is golden.

A Peek Under the Hood

As a tech enthusiast, I had to look into how this whole thing works. The secret sauce is WebGPU, a new-ish web standard that gives web pages more direct access to your computer’s graphics card (GPU). Think of it as the superhighway that finally lets heavy-duty applications run smoothly inside a browser tab.

Omnibot is built on what I'd call the modern AI developer's 'dream team' stack. It leverages the WebLLM project, which is the core library making this in-browser magic possible. It pulls models from places like Hugging Face and uses tech from giants like Meta and Microsoft (think Llama and Phi models). It also integrates LangChain, a framework for building complex applications with LLMs. This isn't some weekend project; it's built on a solid, forward-thinking foundation.

Making It Personal with Custom Memory

Another feature that caught my eye is the 'Custom Memory / Instructions'. This is where you can give the AI a bit of a personality or prime it with information about you and your needs. You can tell it, "I'm an SEO expert. When I ask for content ideas, focus on long-tail keywords and user intent." Or, "I'm a sarcastic writer from Texas, so match my tone."

Over time, this allows the AI to give you much more personalized and genuinely helpful responses, without you having to re-explain the context in every single chat. It's a simple feature, but one that makes the tool feel less like a generic robot and more like a real assistant.

Let's Be Real: The Requirements and Trade-Offs

Okay, it's not all sunshine and rainbows. Running a powerful AI on your own machine comes with some strings attached. This isn't for your grandma's 10-year-old laptop (unless your grandma is a hardcore gamer).

- GPU Power Needed: You need a decent graphics card. The site says you need a GPU with at least 6GB of VRAM for the 7B (billion parameter) models and 3GB for the smaller 3B models. Most modern gaming laptops or desktops will handle this just fine, but a standard work ultrabook might struggle.

- The Initial Download: You have to download the models before you can use them offline. These aren't small files; they can be several gigabytes. So you'll need a good internet connection for the initial setup and some patience.

- Mobile Experience: Mobile support is a bit of a question mark. They mention it depends on WebGL compatibility, which is a bit of a predecessor to WebGPU. This tells me the mobile experience might not be as smooth or universally supported as the desktop one. For now, I'd consider this a desktop-first tool.

What's the Price of This Privacy?

This is the million-dollar question, isn't it? The website is a little quiet on pricing. There's no pricing page. However, the FAQ section has a very telling question: "Do I get access for this for free?" This suggests that, at least for now, Omnibot is free to use. My guess? It's a classic product-led growth strategy. Get the tech out there, build a community of users who love it, and maybe introduce premium features or paid, more powerful models down the line. For now, it seems you can get in on the ground floor without opening your wallet, which is pretty compelling.

I'd say jump on it while you can. It's a great way to experience the next wave of AI without a subscription fee.

Frequently Asked Questions about Omnibot

- Is my data really 100% private with Omnibot?

- Yes. The core principle of Omnibot is local processing. The AI models run directly in your browser on your computer. Your prompts, conversations, and data are never sent to any external server, ensuring complete privacy.

- How can it work without an internet connection?

- You need an internet connection for the one-time download of an AI model. Once that model is saved to your device, Omnibot can run it locally, allowing you to use the AI completely offline.

- What kind of computer do I need to run it?

- You'll need a fairly modern computer with a capable GPU (graphics card). For larger, more capable models (7B), you'll want at least 6GB of VRAM. For smaller models (3B), 3GB of VRAM should suffice. Check your system specs before you start.

- Is Omnibot completely free?

- Currently, it appears to be free to use. The platform doesn't list any pricing, and the FAQs imply free access. This could change in the future, but for now, you can use it without any cost.

- What AI models can I use?

- The site indicates it's built using technology from Meta and Microsoft, which strongly suggests you'll have access to popular open-source models like the Llama family and the smaller, efficient Phi models.

- Why is the initial model download so slow?

- Large Language Models are... well, large! A model file can be several gigabytes in size. The download speed depends entirely on your internet connection. It's a one-time wait for each model, so it's best to do it when you have a stable, fast connection.

My Final Take

So, is Omnibot the future? For a certain type of user, I think it absolutely is. It's not going to replace the big cloud players for the average consumer overnight. But for developers, writers, researchers, and die-hard privacy advocates, it's a massive step in the right direction. It's a tool that respects the user.

Omnibot is a practical, working example of a trend I hope we see more of: local-first software. It puts power and control back where they belong—with the user. It’s a bit more demanding on your hardware, sure, but the trade-off is privacy and autonomy. And in my book, that's a trade worth making any day of the week.

Reference and Sources

- The official tool website: Omnibot.dev

- An overview of the underlying tech: WebLLM Project

- Learn more about the browser API: WebGPU API on MDN