I’ve been in the SEO and digital marketing world for what feels like a lifetime. I've seen trends come and go, from the keyword stuffing dark ages to the rise of semantic search. But nothing, and I mean nothing, has shaken things up like the current AI explosion. Every other week, it seems, we get a new model that promises the world. GPT-4o. Claude 3 Opus. Gemini 1.5 Pro. It’s a full-time job just keeping the names straight.

But here’s the thing that’s been bugging me. All the demos are so... polished. Perfect, even. They show the AI writing a flawless poem or analyzing a pristine chart. My reality? I'm usually trying to get a model to accurately count the number of products in a grainy user-submitted photo or transcribe text from a crumpled receipt. That’s where the marketing hype meets the road. And for those kinds of tasks—multimodal tasks that involve seeing and reasoning—how do we really know which model is best?

It's a question I've been wrestling with for months. Then, I stumbled upon a tool with a rather poetic name: Non finito.

So, What on Earth is Non finito?

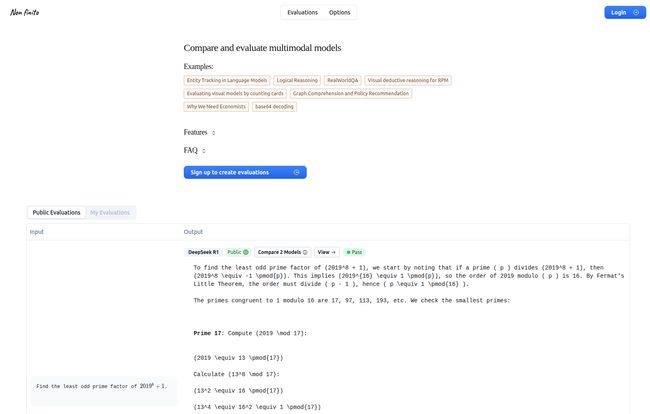

First off, the name. For those who didn’t ace Art History, “non finito” is an Italian term for a work of art that is left unfinished. Think of Michelangelo's slaves, powerful figures still emerging from raw stone. It's a fitting name, because the platform itself is a work in progress, and its whole purpose is to test AIs on tasks that are often messy and complex—the unfinished business of AI development.

In simple terms, Non finito is a platform for evaluating and comparing multimodal AI models. It’s not another chatbot arena where you just judge conversational flow. No, this is a proving ground. It’s designed specifically to poke and prod at how well these AIs can 'see' and 'understand' the world through images, diagrams, and data. This focus on multimodal capabilities is, frankly, its killer feature. Most evaluation tools are still obsessed with LLMs (Large Language Models) and their text-only skills, which is so 2023. The future is visual, and Non finito gets that.

Visit Non finito

A Peek Under the Hood: The Experience

My first impression of the Non finito interface was, honestly, relief. It’s clean, developer-centric, and refreshingly free of clutter. It looks like it was built by people who actually do this stuff. The core workflow is straightforward: you set up an evaluation, which looks like it's done via a simple script or SDK. You feed it a prompt, often including an image, and define what a 'correct' answer looks like.

Then, you run it. Non finito throws your test case at a lineup of today’s heavy-hitters—GPT-4o, Claude 3 Opus, Gemini 1.5 Pro, you name it—and presents the results side-by-side. Each model's response is graded with a simple, satisfyingly clear green check for a pass or a red 'x' for a fail. No ambiguity, just a clear verdict.

The Multimodal Obstacle Course

This is where it gets really interesting. The public evaluations on their site give a taste of the kinds of gauntlets these AIs are being put through. We're talking about tasks like:

- Object Counting: "Count the faces and price factor in 2018" on a dense, chart-filled image. A surprisingly tricky task that many models still fumble.

- Mathematical Reasoning: Solving an algebraic equation presented in a blurry photograph of a whiteboard.

- Spatial Awareness: Looking at a seating chart diagram and figuring out who sits next to whom.

- Real-World OCR: Reading the text on a weathered, angled street sign.

These aren’t theoretical benchmarks. They're practical, sometimes frustratingly specific problems that developers and businesses face every single day. Seeing a powerful model like Claude 3 Opus fail to count objects correctly while Gemini 1.5 Pro nails it (or vice-versa) is incredibly insightful. It's the kind of direct comparison that helps you make real-world decisions about which API to call for a specific feature in your app.

Why Public, Sharable Evaluations Are a Game Changer

One of the most powerful features here is the emphasis on sharing. The "Public Evaluations" section is more than just a gallery of examples; it's the beginning of a community-driven, transparent benchmarking movement. For too long, we've relied on academic benchmarks that don't always reflect real-world performance, or we've taken the AI labs' own marketing materials at face value. I'm sorry, but I'm not gonna base my multi-thousand dollar API bill on a slick demo video.

Non finito allows anyone to create a test and share it. It’s like a GitHub for AI model testing. This creates a collective intelligence. Found a specific type of image that trips up GPT-4o's vision? You can create an evaluation, share it, and see if others can replicate your findings or if a new model update fixes the issue. This is how we move from hype to reproducible science.

The Good, The Bad, and The Unfinished

Like any tool, especially one that wears its 'in-development' status on its sleeve, Non finito has its highs and lows. Let's break it down.

The Strong Points

The biggest pro is its unapologetic focus on multimodal evaluation. It’s tackling the hardest part of the problem head-on. The side-by-side comparison is pure gold for anyone who’s ever had 15 browser tabs open trying to manually test the same prompt. And the public sharing feature fosters a sense of community and transparency that the AI space desperately needs. For a dev tool, it also looks surprisingly easy to pick up and start running tests.

Some Areas for Growth

The platform's name is a clue: it's not finished. And that's okay! I even hit a "Something went wrong" page while exploring, which I found oddly charming. It's a reminder this is built by humans, for humans. A more concrete point of feedback is that while the pass/fail system is clear, I'm curious about more nuanced evaluation metrics. How is correctness programmatically defined in the backend? For some tasks, a more granular score might be useful. But for now, the directness is a feature, not a bug.

What's the Price Tag?

Here’s the million-dollar question. As of my writing this, there's no pricing information available on the Non finito site. This is typical for a platform in its early stages. My educated guess? It’s likely free to use while it’s in this beta or development phase. The goal right now is probably to build a strong user base and gather feedback. I wouldn't be surprised to see a tiered or freemium model emerge down the line, perhaps with private evaluations or team features for paying customers. For now, it seems to be an open invitation to come in and start testing.

So, Who Is This For?

I see a few key groups getting a ton of value from Non finito:

- AI/ML Engineers: The primary audience. People building applications who need to know if Model A is better than Model B for their specific visual task.

- AI Researchers: A fantastic tool for quickly setting up experiments and gathering data on model capabilities for papers and studies.

- Product Managers & Decision-Makers: You don't have to be a coder to understand the results. This gives you concrete data to justify choosing one AI provider over another.

- The Deeply Curious: SEOs, marketers, and tech enthusiasts like me who want to look behind the curtain and see what these AIs are actually capable of.

Conclusion: An Unfinished Symphony Worth Hearing

In a world of overly polished AI demos and grandiose claims, a tool that calls itself "Unfinished" feels refreshingly honest. Non finito isn’t trying to be everything to everyone. It’s a focused, practical platform for the critical task of multimodal model evaluation. It provides clear, direct comparisons that can help developers build better products and help the community understand the true strengths and weaknesses of the models shaping our future.

It may be a work in progress, but so is the entire field of artificial intelligence. Sometimes, the most exciting things are the ones that are still being created. Non finito is definitely a project to watch, and for anyone serious about working with multimodal AI, it’s a tool worth trying out today.

Frequently Asked Questions (FAQ)

- What is Non finito?

- Non finito is a specialized platform for evaluating and comparing the performance of multimodal artificial intelligence models, such as GPT-4o, Claude 3, and Gemini, on tasks that involve both text and images.

- Is Non finito free to use?

- Currently, there is no public pricing information, suggesting it is likely free to use during its development phase. This may change in the future as more features are added.

- What kind of AI models can I test on Non finito?

- The platform focuses on multimodal models. The examples show popular models like OpenAI's GPT-4o, Anthropic's Claude 3 family (Opus, Sonnet), and Google's Gemini 1.5 Pro being tested.

- How does Non finito differ from other AI benchmark platforms?

- While many benchmarks focus on text-only (LLM) performance or academic metrics, Non finito emphasizes practical, real-world multimodal tasks and provides a simple, direct side-by-side comparison with a clear pass/fail outcome.

- Can I share my evaluation results publicly?

- Yes, a key feature of Non finito is the ability to create and share your evaluations publicly, contributing to a community-driven knowledge base of model performance.

- What does the name "Non finito" mean?

- "Non finito" is an Italian term used in art to describe a work that has been left intentionally unfinished. It reflects the platform's nature as a work-in-progress and its focus on testing the complex, "unfinished" challenges of AI.

Reference and Sources

- Non finito Official Website: https://nonfinito.ai/

- OpenAI's GPT-4o Introduction: https://openai.com/index/hello-gpt-4o/

- Anthropic's Claude 3 Family: https://www.anthropic.com/news/claude-3-family