For the past few years, I’ve seen a parade of AI video tools march across my screen. Each one promises the world—turn any image into a Hollywood-level scene!—and most of them deliver something… well, something more like a flickering, wobbly mess. You know what I'm talking about. The character's face melts and reforms every three frames, the background warps like it’s having a bad trip, and the whole thing just screams

I was made by a robot.

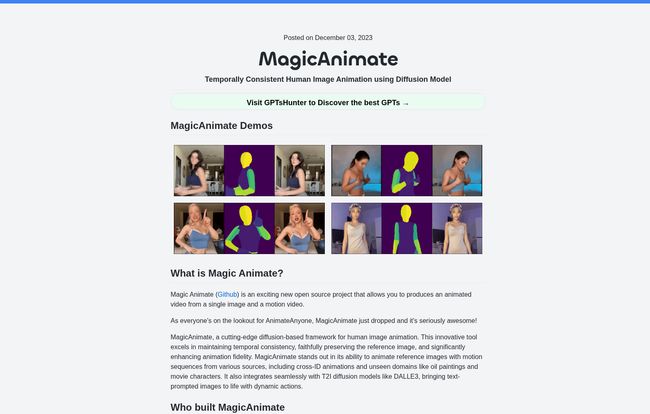

It's been a bit of a running joke in the community. So when I first heard about Magic Animate, I’ll admit, I was skeptical. Another one? But then I saw the demos. And then I saw who was behind it—a team from TikTok's parent company, ByteDance, and the National University of Singapore. Okay, now you have my attention.

This isn't just another flash in the pan. Magic Animate feels different. It’s an open-source project that takes a single still image, a motion video, and mashes them together to create a surprisingly coherent animation. And its main claim to fame, the one thing that makes it stand out from the crowd, is temporal consistency. A fancy term, I know, but it just means the video it creates doesn’t have a seizure. The character actually looks like the same person from start to finish. A low bar, you’d think, but in the world of AI video, it’s a massive leap.

So What is This Magic Animate Thing, Really?

Think of it like a high-tech digital puppeteer. You give it a puppet (your reference image—say, a photo of an astronaut) and you show it a dance (your motion video—a clip of someone doing the Macarena). Magic Animate then makes the astronaut do the Macarena. It intelligently maps the motion onto the still image, preserving the original character’s appearance while applying the new movement.

Visit Magic Animate

Under the hood, it’s a “diffusion-based framework,” which is the same family of tech that powers image generators like DALL-E 3 and Midjourney. This allows it to be incredibly flexible. It’s not just for photorealistic people. You can feed it an oil painting, a cartoon character, or even a sketch, and it will do its level best to bring it to life. That’s where the real fun begins, in my opinion.

What Makes It a Potential Game Changer

I don't throw around terms like “game changer” lightly. I've been in the SEO and digital content game long enough to see trends come and go. But a few things about Magic Animate have me genuinely excited.

Its Uncanny Consistency

This is the big one. As I mentioned, the temporal consistency is its superpower. In a space where competitors often produce jittery results, Magic Animate’s output is remarkably stable. The identity of the subject is faithfully preserved. If you animate a photo of your friend, it will still look like your friend at the end of the video, not their melted-candle cousin. For anyone trying to create usable content, this is everything. It's the difference between a cool tech demo and a practical tool.

The Power of Open Source

Magic Animate isn’t some walled-off, expensive SaaS platform. It's open-source. This means the code is out there for anyone to see, use, and modify. For developers and creators, this is huge. It means you can integrate it into your own projects, fine-tune it for specific tasks, and you dont have to pay a monthly subscription for the privilege. It fosters a community of innovation that closed-source tools just can't match.

Incredible Versatility

This tool is a true chameleon. Because it can integrate with other popular models like Stable Diffusion, the possibilities are wild. You can generate an image from a text prompt using DALL-E 3 and then immediately animate it with Magic Animate. Want to see a cyberpunk Mona Lisa breakdancing? You can actually do that now. It works on photos, paintings, and character designs from domains the model has never even seen before, which is seriously impressive.

Let’s Be Real, It’s Not Perfect

Alright, time for a dose of reality. As promising as Magic Animate is, it’s still a new technology and has its quirks. I’m a big believer in transparency, so let’s talk about the areas where it stumbles.

- The Dreaded AI Hands and Faces: Yup, the classic curse of AI art strikes again. While the overall consistency is great, there can still be some weird distortion in the finer details, especially the hands and face. It's a common problem across all diffusion models, but something to be aware of.

- Weird Style Shifts: I noticed that in its default mode, it sometimes takes an anime-style character and makes it look a bit more... realistic. This style drift can be a bit jarring if you’re trying to maintain a specific aesthetic.

- Anime Proportions Get Funky: On that note, when working with anime characters, which often have stylized body proportions, the model can sometimes struggle. It might try to apply realistic human skeletal motion, resulting in some slightly wonky-looking limbs.

These aren't deal-breakers, especially for an open-source project. They're just the current limitations of the tech, and I fully expect them to improve as the community tinkers with it.

How You Can Try Magic Animate Today

This is not a slick, one-click app for your phone... yet. It's a tool for tinkerers, developers, and the genuinely curious. But that doesn’t mean it’s inaccessible.

The easiest way to dip your toes in is through the online demos. There are free demos available on platforms like Hugging Face. You can also run it using cloud services like Replicate or a Google Colab notebook, which provide the necessary computing power without you needing a monster PC. For the more technically adventurous, you can clone the entire project from GitHub and run it on your own machine, giving you full control.

What About the Price Tag?

Here’s the best part. Because it's an open-source project, Magic Animate itself is free. Zero, zip, nada. Your only potential costs come from the computing power needed to run it. If you use a service like Replicate, you'll pay for the processing time, but it's a far cry from a hefty monthly software subscription.

My Final Thoughts on Magic Animate

So, is Magic Animate the holy grail of AI video? No, not yet. But it’s one of the most promising and practical steps forward I’ve seen in a long time. It solves the single biggest problem plaguing AI animation: consistency. By making the code open-source, the creators have invited the entire world to help them build the future of this technology.

For content creators, animators, and developers, this is a tool to watch. It’s a peek at a future where bringing your wildest visual ideas to life is no longer a matter of budget or technical skill, but purely of imagination. It has its flaws, for sure, but its potential is undeniable. I, for one, am incredibly excited to see what people create with it.

Frequently Asked Questions

- What is Magic Animate used for?

- It's primarily used to animate a static human image using a motion sequence from a separate video. This allows you to make a character in a photo or painting appear to dance, run, or perform other actions.

- Is Magic Animate free to use?

- Yes, the project itself is open-source and free. You might incur costs if you use third-party cloud computing services like Replicate to run the model, but the software itself costs nothing.

- Can I use Magic Animate without knowing how to code?

- Absolutely. The easiest way for non-coders is to use the public demos available on platforms like Hugging Face. These web-based interfaces let you upload an image and motion file to test it out.

- How is Magic Animate different from other AI video tools?

- Its key differentiator is temporal consistency. It does a much better job of keeping the character's appearance and identity stable throughout the video, avoiding the flickering and morphing common in other tools.

- Who created Magic Animate?

- It was developed through a research collaboration between ByteDance (the parent company of TikTok) and the National University of Singapore (NUS).

- Does Magic Animate have an API?

- Yes, you can access an API for Magic Animate through platforms like Replicate. This allows developers to integrate its animation capabilities into their own applications and workflows.

Reference and Sources

- Magic Animate on GitHub

- Magic Animate Demo on Hugging Face

- Magic Animate on Replicate

- Official Magic Animate Project Page