As someone who's been neck-deep in SEO and digital trends for years, I've seen the AI wave build from a ripple to a full-blown tsunami. We're all using it, from drafting emails to analyzing massive datasets. But there’s this nagging feeling in the back of my mind, and maybe in yours too: where is all our data going?

Every prompt we type into a cloud-based LLM, every document we upload for analysis... it's all being processed on someone else's servers. For most of us, that’s a fine trade-off for the convenience. But what if you're a lawyer handling sensitive case files? Or a financial analyst looking at confidential market data? Suddenly, the cloud feels less like a fluffy white productivity-booster and more like a... well, a storm cloud of risk.

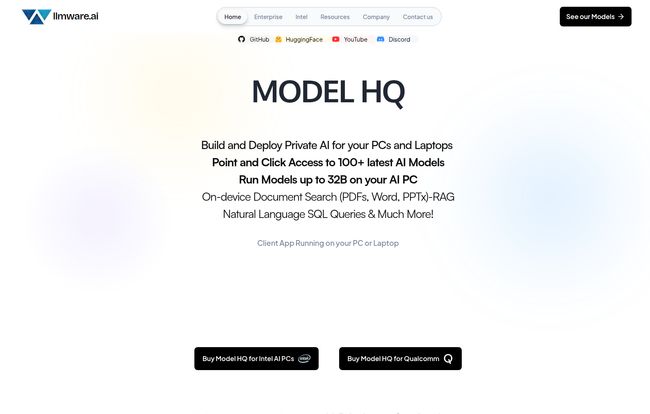

This is the exact problem that a fascinating company called LLMWare AI is trying to solve. They're championing a different path. A more private one. One that runs right on your own PC or laptop. Intrigued? I certainly was.

Visit LLMWare.ai

So, What Exactly is LLMWare AI? (And Why Should You Care?)

At its core, LLMWare is an end-to-end platform for building and deploying AI models that live on your own hardware. No constant phoning home to a massive data center. No sending your proprietary information across the internet. It's designed specifically for what they call "complex enterprises"—think finance, legal, and any other industry where compliance and data security aren't just best practices, they're the law.

Think of it this way: using a typical cloud AI is like hiring a world-famous chef who works in a bustling, public kitchen. You get amazing results, but you have to bring your secret family recipe out in the open. LLMWare is like having that same world-class chef come to your house and cook in your kitchen, using your ingredients, behind locked doors. The secret recipe never leaves the building.

The Big Deal About "Private AI" on Your Own Machine

The term "Private AI" gets thrown around a lot, but LLMWare seems to be taking it literally. By enabling models to run locally, they're tapping into a huge, and frankly, justified, anxiety in the corporate world.

Data Privacy That Isn't Just a Buzzword

This is the big one. When your AI workflows are running on-device, your data stays on-device. It’s that simple. For regulated industries, this is a game-changer. You're not just hoping you're compliant with GDPR or HIPAA; you're building a workflow that is inherently more secure. There's no risk of a data breach on a third-party server if the data never gets there in the first place. It fundamentally changes the security posture from 'trust us' to 'verify it yourself'.

Cutting the Cord (and the Cloud Bill)

Let's be real for a second, API calls to powerful cloud models can get expensive. Fast. I’ve seen the monthly bills, and they can make your eyes water. By performing inference on local devices, you're essentially pre-paying for your compute with the cost of your hardware. This makes AI-driven tasks more predictable in cost and, in many high-usage scenarios, significantly cheaper over the long run. It's a shift from a variable operational expense to a fixed capital one, which is music to any CFOs ears.

Key Features That Caught My Eye

So how do they actually pull this off? It’s not just vaporware; they have some genuinely interesting tech under the hood.

The Magic of On-Device RAG

You've probably heard of RAG, or Retrieval-Augmented Generation. It’s the tech that lets you “chat” with your documents. You feed an AI a library of your own information, and it uses that info to answer your questions. LLMWare allows this whole process to happen locally. You can point it at a folder of legal contracts, financial reports, or research papers on your hard drive, and start asking it complex questions in natural language—all without an internet connection. That's powerful stuff.

Built for the New Wave of AI PCs

This is where LLMWare is really skating to where the puck is going. With Intel, Qualcomm, and others launching new chips with dedicated Neural Processing Units (NPUs), we're at the dawn of the "AI PC" era. LLMWare is already building demos and optimizing its platform for this new hardware. This means their tools are designed to be incredibly efficient on the laptops and desktops that will become standard in the next few years. It's a smart, forward-thinking move.

Compliance and Audit Trails? They've Got You Covered.

This part is admittedly less sexy, but for their target audience, it's absolutely critical. The platform includes tools for AI explainability and safety, along with the ability to generate compliance-ready audit reports. When a regulator comes knocking and asks, "How did your AI arrive at this conclusion?" you can actually provide an answer. In high-stakes fields, that's not a nice-to-have; its a necessity.

Let's Get Real: The Upsides and the Hurdles

No tool is perfect, and a balanced look is always best. I appreciate that LLMWare isn’t trying to be a one-size-fits-all solution. They have a specific mission, and with that comes a specific set of strengths and weaknesses.

The Good Stuff

The advantages are pretty clear. The enhanced data privacy is second to none. If your work involves sensitive information, this is probably the single biggest reason to look into their platform. The potential for cost-effective inferencing on local devices is another huge plus, moving AI from an unpredictable operational cost to a stable one. And for IT departments, the promise of simplified AI deployment without complex cloud configurations is a welcome relief. It’s all about control, security, and predictability.

The Not-So-Good Stuff

Of course, there are trade-offs. Running things locally means you're responsible for the hardware. The initial setup requires downloading models and getting everything configured, which isn't as simple as just logging into a website. To get the best performance, you'll likely need modern AI PC hardware, so older machines might struggle. And because you’re not tapping into a city-sized data center, you're probably limited to smaller, more specialized models rather than the behemoth, do-everything models in the cloud. It's a classic power-vs-portability debate, just with AI.

Who is LLMWare AI Actually For?

After digging through their site and offerings, it’s pretty clear this isn't for the hobbyist looking to create funny poems. LLMWare is a serious tool for serious business. If you are a:

- Law Firm handling confidential client information.

- Financial Institution analyzing proprietary market data.

- Healthcare Organization bound by strict patient privacy laws.

- Government Agency dealing with classified or sensitive documents.

What's the Damage? A Look at LLMWare's Pricing

And now for the question on everyones mind: how much does it cost? Well, if you go looking for a pricing page on their website, you'll be met with a friendly 404 error. Don't worry, your browser isn't broken.

This is actually very common for enterprise-grade B2B software. Pricing is rarely a simple three-tiered SaaS model. It almost certainly depends on the scale of your deployment, the level of support required, and the specific models and tools you need. The bottom line is you'll have to contact their sales team for a custom quote. It’s not for the faint of heart, and it signals that they are focused on substantial enterprise contracts, not individual licenses.

My Final Take: Is LLMWare the Answer?

The push for everything to be in the cloud has been relentless for over a decade, but I think we're starting to see a gentle, intelligent swing back in the other direction. A hybrid approach, where sensitive workloads are brought back in-house, is starting to make a lot of sense. LLMWare is not just part of this trend; it's a pioneer of it.

Is it the right solution for everyone? Absolutely not. But for the organizations it targets, it's not just an interesting piece of tech—it's a potential solution to one of the biggest headaches of the modern digital age: balancing innovation with security. It’s a compelling proposition, and one I’ll be watching very closely.

Frequently Asked Questions about LLMWare AI

- 1. What is LLMWare AI in simple terms?

- LLMWare AI is a platform that lets businesses run powerful AI models directly on their own computers (PCs and laptops) instead of in the cloud. This is ideal for industries like finance and law that need maximum data privacy and security.

- 2. Do I need an internet connection to use LLMWare?

- You'll need an internet connection for the initial setup and to download the AI models. However, once that's done, many of its core functions, like searching your own documents with AI (RAG), can be performed completely offline.

- 3. What is on-device RAG?

- RAG (Retrieval-Augmented Generation) is a technique that lets you ask questions about your own documents. On-device RAG means the entire process—analyzing your documents and generating answers—happens locally on your machine, so your sensitive files never leave your control.

- 4. Will LLMWare work on my old computer?

- While it might work, it's optimized for modern computers, especially new "AI PCs" that have specialized hardware (NPUs) to run AI tasks efficiently. For the best performance, up-to-date hardware is recommended.

- 5. How much does LLMWare cost?

- LLMWare does not publish its pricing publicly. It's an enterprise solution, so you will need to contact their sales department directly to get a quote based on your organization's specific needs.

- 6. Is LLMWare a replacement for cloud AI like ChatGPT?

- Not exactly. It's more of a specialized alternative for specific use cases. While cloud AI is great for general-purpose tasks, LLMWare is designed for scenarios where data privacy, security, and compliance are the absolute top priorities.

Reference and Sources

- LLMWare AI Official Website: https://llmware.ai/