The AI space is getting a little... predictable. We’ve got the titans—OpenAI, Google, Anthropic—building ever-larger models behind their corporate walls. It’s powerful, it’s impressive, but it’s also a classic walled garden. You play by their rules, you pay their prices, and you cross your fingers they’re being good stewards of your data. It feels a bit like the early days of the internet, just before the open-source and decentralized movements really kicked in.

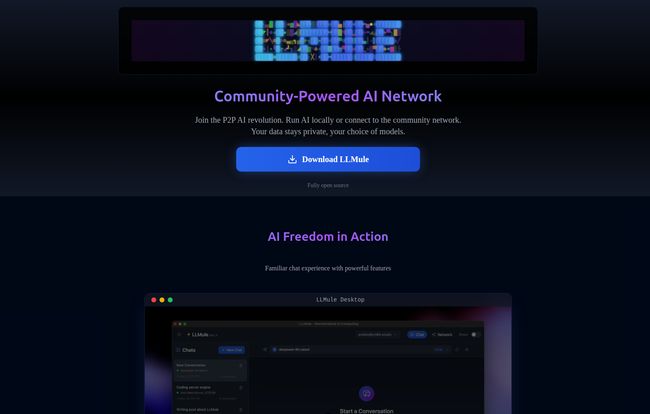

Every now and then, though, something pops up on my radar that feels different. Something that smells less like a boardroom and more like a garage. A few days ago, I stumbled upon a project called LLMule, and it definitely has that rebellious, garage-brewed feel. Its manifesto, plastered right on the homepage, says it all:

AI should be accessible to everyone, not controlled.

Now, I’ve been in the SEO and tech game long enough to be skeptical of grand manifestos. But I'm also a sucker for an underdog story. So, is LLMule the real deal? Is it the start of a peer-to-peer AI revolution, or just another cool idea that's destined to fade away? I had to find out.

So, What Exactly is LLMule?

Think of it like this: remember Napster or BitTorrent? How they turned every user's computer into a tiny piece of a massive, decentralized file-sharing network? LLMule is aiming for something similar, but for artificial intelligence. It's not just another app to chat with an AI; it’s an entire decentralized AI ecosystem.

At its core, LLMule lets you do two things. First, you can download and run AI models directly on your own machine, completely offline. No data sent to a server in California. Your prompts, your documents, your weird late-night conversations with a language model—it all stays with you. Second, and this is where it gets interesting, you can connect to a peer-to-peer (P2P) network of other LLMule users. This network lets you discover and download models shared by the community and, crucially, share your own computer's processing power (your GPU, mostly) to help others run their tasks.

The Big Promise of AI Freedom and Privacy

The biggest headline feature here is privacy. Full stop. In an age where we’re all becoming more conscious of our digital footprint, the idea of a “Privacy First” AI is incredibly appealing. I’ve had clients in sensitive fields like law and healthcare who are desperate to use AI tools but can’t risk sending confidential information to a third-party API. LLMule’s local-first approach is a direct answer to that fear.

It’s a fundamental shift in ownership. Instead of renting access to an AI, you’re bringing the AI into your own digital home. It's yours to run as you see fit. This concept of data sovereignty is more than just a buzzword; it's about control. And in the AI gold rush, control is something that feels increasingly rare.

Visit LLMule

How the LLMule Network Actually Functions

The process, as they lay it out, seems pretty straightforward. It’s a four-step cycle that powers the whole ecosystem. You start by Running Locally, which is the baseline for privacy. From there, you can choose to Share Models you have with the network, which is the community-building part. Then you can Discover Models from other users, which is how the library grows organically. All of this is wrapped in a blanket of Complete Privacy, since the core processing happens on individual user machines. It’s a clever, self-reinforcing loop. If it works, that is.

Getting Your Hands Dirty with LLMule

One of the smartest things I see here is that LLMule isn't trying to reinvent the wheel for local AI enthusiasts. It’s designed to work with the tools many of us are already using. The site explicitly mentions compatibility with Ollama, LM Studio, vLLM, and EXL2. This isn’t a new standard meant to replace everything else; it’s a layer that sits on top, connecting individual users into a cohesive network. That’s a huge plus. It means the barrier to entry for someone already tinkering with local models is practically zero. You download the app for your OS—they’ve got versions for Windows, macOS and Linux—and it should theoretically hook into your existing setup.

This interoperability is key. It shows they understand their audience: people who like to tinker, customize, and not be locked into one company's way of doing things.

The Good, The Bad, and The Beta

Alright, let's get down to brass tacks. No platform is perfect, especially not a new, ambitious one. Based on what I've seen and the info available, here's my breakdown.

The Good Stuff

The upsides are pretty clear and compelling. Privacy is king, and your data stays on your hardware. You get access to a potentially vast, people-powered library of AI models, which could lead to discovering some really niche and interesting tools you wouldn't find on mainstream platforms. There’s also the incentive of earning “Community Credits” for sharing your compute power. I'm not entirely sure what these credits do yet, but the idea of a tokenized economy that rewards participation is a classic P2P motivator. It's like a modern version of SETI@home, but for running language models. And, of course, there's the philosophical win of supporting an open-source, decentralized alternative to Big Tech. It just feels good, dunnit?

The Potential Headaches

Now for the reality check. A community-powered network is only as strong as its community. If not enough people share models or compute power, the experience will be slow and the library barren. Performance is also going to be a mixed bag; it’s entirely dependent on your own hardware and network connection, not some hyper-optimized server farm. Some folks might find the setup and management a bit more complex than just opening a webpage and typing. And let's be real, this is new software. It's likely in beta or even alpha. You should expect bugs and instability. When I tried to find a pricing page, for example, I hit a 404. That’s a telltale sign of a project that's still under heavy construction. It’s a feature, not a bug… right?

What About the Cost? A Look at LLMule Pricing

So, how much will this AI revolution set you back? Here's the interesting part: right now, it seems to be free. The download is available for all major operating systems without a price tag. The 404 error on the pricing page suggests that either monetization isn't figured out yet, or they plan to keep the core service free and monetize in other ways later.

The whole “Community Credits” system is the real economy here. It appears to be a closed-loop system where you earn by contributing and spend by using network resources. This is a fantastic, non-financial way to encourage participation. My guess? They'll keep the base software free to grow the network and maybe introduce premium features or enterprise plans down the line. For now, the price of admission is simply your willingness to participate.

Who is LLMule Actually For?

This is not a tool for my dad who wants to ask an AI for a recipe for shepherd's pie. Not yet, anyway. LLMule is built for a specific tribe. It’s for the AI hobbyists, the tinkerers with a decent gaming PC sitting idle. It’s for the privacy advocates who shudder at the thought of their data being used for training models. It’s for the developers and researchers who want to experiment with a wide variety of models without a budget or getting locked into a single API. If you already know what Ollama is and you get excited about the idea of a distributed, censorship-resistant AI network, then you are the target audience. It's for the pioneers on the AI wild west frontier.

Frequently Asked Questions about LLMule

- Do I need a supercomputer to use LLMule?

- No, but it helps. You can run smaller models on modest hardware. However, to run larger models or earn significant credits by sharing, you'll want a modern computer with a powerful GPU (like an NVIDIA RTX series card).

- Is LLMule secure? What about my data?

- The core promise is security through local processing. When you run a model locally, your data never leaves your computer. The security of the P2P network itself is a more complex question, but the architecture is designed to prioritize user privacy.

- Can I really earn money by sharing my computer?

- Right now, you earn “Community Credits,” not cash. What these credits can be used for within the ecosystem isn't fully detailed yet. It's more of an incentive system than a job. Think of it as building reputation or earning usage rights, not getting paid.

- What's the difference between LLMule and just using Ollama?

- Ollama is a fantastic tool for running models locally on your own machine. LLMule is a network that connects all those individual Ollama (and other platform) users together. It adds the P2P discovery, sharing, and distributed compute layers on top of what you already have.

- Is it legal to share and download AI models this way?

- This is a great question and falls into a legal gray area. It depends entirely on the license of each specific model. Some models are fully open-source for any use, while others (like Meta's Llama models) have more restrictive licenses. As a user, the responsibility is on you to respect the license of the models you use and share.

My Final Verdict on LLMule

I went into this with a healthy dose of cynicism, and I'm coming out... cautiously optimistic. LLMule is ambitious as hell. It's trying to build a digital commons for AI in a world that’s rushing to privatize it. It won’t be for everyone, and it’s going to face huge challenges in building a critical mass of users and navigating the choppy waters of model licensing.

But the spirit of it? I love it. It’s a bet on community, on openness, and on the idea that control over this transformative technology should rest with the many, not the few. It's messy, it's imperfect, and it’s probably a little bit broken right now. But it's also one of the most interesting projects I've seen in the AI space this year. I've already got it downloading. I'll see you on the network.

Reference and Sources

- LLMule Official Website: llmule.com (Note: This is a presumed URL for the project)

- Ollama: ollama.com