The AI space right now feels a bit like the Wild West. Every week, a new model drops, claiming to be faster, smarter, and more capable than the last. You’ve got OpenAI, Anthropic, Google, Mistral, Cohere… the list goes on. It's exciting, absolutely. But it's also… a lot.

I’ve been in the SEO and traffic game for years, and I’ve seen hype cycles come and go. But this AI wave is different. It’s not just hype; it’s a fundamental shift in how we build products, create content, and process information. The problem? Every one of these powerful new tools comes with a price tag, usually measured in something called 'tokens.' And trying to compare them is a masterclass in tab-shuffling and spreadsheet headaches.

A few months ago, I found myself in that exact spreadsheet-fueled nightmare. I was speccing out a new content automation tool and needed to choose a foundational model. Should I go with the latest GPT-4 variant? Or was Claude 3 Opus a better fit? What about the more budget-friendly options like Haiku or Gemini Pro? The answer was buried in a dozen different pricing pages, each with its own weird nuances. I nearly threw my laptop out the window.

Then, I stumbled across a tool that genuinely changed my workflow. And no, this isn't a sponsored post. It's just me, sharing something that’s saved me a ton of time and, more importantly, a ton of money. It’s called LLM Pricing.

So, What Exactly is This LLM Pricing Tool?

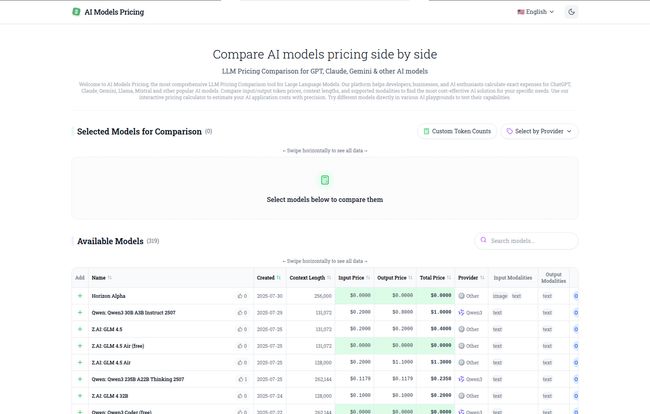

Think of it like Kayak or Google Flights, but for Large Language Models. In a nutshell, LLM Pricing is a simple, no-fluff website that aggregates the specs and, crucially, the cost of pretty much every major AI model on the market. It pulls data from the official providers and cloud vendors like AWS Bedrock or Google's Vertex AI and lays it all out in one massive, sortable table.

It’s a one-page command center for anyone who needs to make an informed decision about which AI model to use. No more hunting. No more spreadsheets. Just a clean, comprehensive overview. It feels like someone, in this case a developer named Claude Sonnet, finally got fed up with the chaos and decided to build the solution we all needed.

Visit LLM Pricing

The Features That Actually Matter to Developers and Marketers

A tool can have a million features, but only a few ever really count. Here’s what makes LLM Pricing so darn useful in the real world.

The Big Board: Comparing Every Model Under the Sun

The first time you load the page, you might feel a little jolt. The list is… long. Like, really long. It's a phone book from the 90s, but for robots. You’ll see everything from the heavy hitters like GPT-4o and Claude 3 Sonnet to more specialized or older models.

But this is where the magic is. You can instantly compare not just the price, but the all-important context window. For those not in the weeds, the context window is basically the model's short-term memory. A bigger window means you can feed it larger documents or maintain longer conversations without it forgetting what you talked about five minutes ago. Seeing this spec side-by-side with price is, frankly, a game-changer. You can also see a model's modalities—whether it handles just text, or if it can process images (vision) too.

The Magic Number: The Interactive Price Calculator

This is my favorite part. At the top of the page, there's a simple calculator. You plug in the number of input tokens and output tokens you expect to use, and it instantly calculates the estimated cost for every single model in the list. It's brilliant.

Why does this matter? Because AI pricing isn't straightforward. You're charged one rate for the information you send to the model (input) and a different rate for the information it sends back (output). For tasks like summarizing, your input is huge and your output is small. For content creation, it might be the other way around. This calculator does the math for you, saving you from costly miscalculations. A word of caution: it relies on your token estimation, which can be a bit of a guess. But for ballpark figures, it’s invaluable.

Try Before You Buy: Direct Links to Demos

Numbers on a screen are one thing, but the 'feel' of a model is another. Some models are more creative, others more factual and dry. LLM Pricing includes direct links to demo platforms or 'playgrounds' for many of the models. So, before you commit your precious API credits, you can click over and have a quick chat with the model to see if its personality and output style fit your project. It's a small touch that shows a deep understanding of what users actually need.

A Real-World Walkthrough: How I Use LLM Pricing

Let me make this concrete. Just last week, I was working on a project to analyze customer feedback from a bunch of long-form interviews. We’re talking dozens of documents, each about 10,000 words long.

Here was my process:

- Step 1: Check the Context Window. I immediately went to LLM Pricing and sorted the table by context length. I needed a model that could handle at least a 32k context window to process the documents without having to chunk them up into tiny pieces. This narrowed my options down from 60+ models to about 15.

- Step 2: Compare Input Pricing. Since my task was mostly summarizing (lots of input, less output), I focused on the 'Input Price' column. I could see that some of the newer, more powerful models were actually cheaper on input than some older ones. For example, Claude 3 Sonnet offered a massive context window for a surprisingly competitive input cost.

- Step 3: Run the Numbers. I used the interactive calculator. I estimated an average of 15,000 input tokens and 1,000 output tokens per document. Plugging this in gave me a cost-per-document estimate for each of my top contenders. The differences were stark—some models were nearly twice as expensive for my specific use case.

- Step 4: Make the Call. Armed with this data, the decision was easy. I chose a model that was the perfect blend of capability (big context window) and cost-effectiveness for my specific task. The whole process took about 10 minutes. Before this tool, it would have been at least an hour of research.

The Good, The Bad, and The Honest Truth

No tool is perfect, right? While I obviously love this platform, it’s only fair to give you the full picture.

The biggest pro is the sheer amount of time and mental energy it saves. It turns a complex, multi-variable research problem into a quick scan-and-sort. The fact that it's regularly updated is a huge plus in this fast-moving industry.

On the flip side, the comprehensiveness can feel a bit overwhelming at first. Some might argue there are too many models listed. Also, since providers can change pricing on a whim, the data might occasionally have a slight lag. I’ve always found it to be accurate, but my advice is to always use it as your primary guide and then click through to the official source for a final confirmation before locking in a decision for a massive project. It's just good practice.

So, Is This Tool Right for You?

If you're a developer building an AI-powered app, a startup founder trying to manage burn rate, a product manager, or even an SEO like me experimenting with AI for content, then yes. Absolutely.

If you're just casually using ChatGPT for fun, you probably dont need it. But for anyone making professional decisions that involve an API key and a credit card, this tool is non-negotiable. It helps you move beyond just using what's popular and start using what's smart for your budget and your project's specific needs. It's about finding the best value, not just the lowest price.

Frequently Asked Questions about LLM Pricing

Is the LLM Pricing comparison tool free to use?

Yes, as of now, the website is completely free to use. It's a community resource created to help navigate the complex AI model market.

How often is the pricing information updated?

The platform is updated regularly to reflect new model releases and pricing changes from providers like OpenAI, Anthropic, and Google. However, it's always a good idea to cross-reference with the official source for mission-critical applications.

What's the difference between input and output token pricing?

Input tokens refer to the data you send to the model (your prompt, a document for summarization, etc.). Output tokens are the data the model generates for you (the answer, the summary). Most providers charge different rates for each, and the interactive calculator on the site helps you estimate this blended cost.

Why is the context window so important to compare?

The context window is a measure of how much information a model can 'hold in its memory' at one time. A model with a large context window can analyze very long documents or remember the details of a long conversation, which is critical for complex tasks like legal document analysis, detailed research, or multi-turn customer support bots.

Making Smarter AI Choices

In an industry that moves at lightning speed, having tools that provide clarity is a massive advantage. The LLM Pricing website isn't flashy. It doesn’t have a slick marketing campaign. It just does one thing, and it does it exceptionally well: it demystifies the cost of using artificial intelligence. It helps you make smarter, more informed, and more cost-effective decisions. And in this AI gold rush, being smart is how you find the real gold.