Building with generative AI is a wild ride. One minute you’re amazed by what your new RAG-powered chatbot can do, and the next you're pulling your hair out because it’s confidently making stuff up. Again. We’ve all been there, staring at a response and thinking, “Is this… good? Is it accurate? How do I even measure that?” For a long time, a lot of GenAI development has felt more like black magic than engineering. A pinch of prompt engineering, a dash of hope, and pray it doesn’t go off the rails in production.

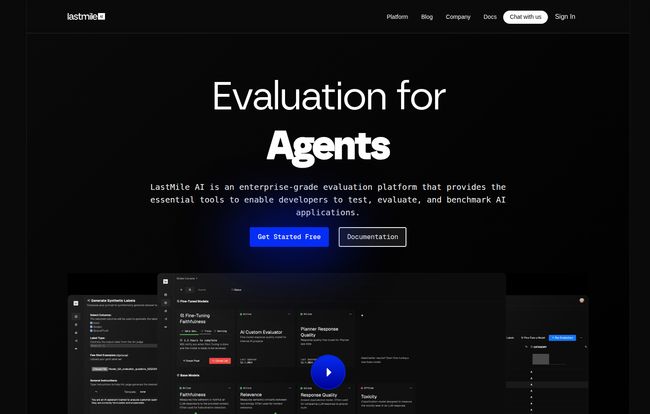

It’s this chaotic, “throw it at the wall and see what sticks” approach that has a lot of us in the dev world feeling a bit antsy. We need tools. We need metrics. We need to turn this art form back into a science. And that’s the promise of a platform I’ve been digging into lately: LastMile AI.

It claims to be a full-stack developer platform to debug, evaluate, and improve AI applications. Big words, I know. But after spending some time with it, I think they might actually be onto something.

So, What's the Big Deal with LastMile AI?

At its core, LastMile AI is a toolbox designed to stop you from guessing. It's built for developers who have moved past the initial “wow, it can write a poem!” phase and are now asking the hard questions. How do I know my AI is factually correct? How do I prevent it from spewing toxic nonsense? How can I run experiments and actually track if my changes are making things better or worse?

Instead of just giving you APIs to build things, LastMile gives you the instruments to measure what you've built. Think of it less as a box of LEGOs and more as a set of calipers, a voltmeter, and a high-speed camera for your AI projects. It's particularly focused on the tricky worlds of RAG (Retrieval-Augmented Generation) and multi-agent systems, which, as many of you know, are notoriously difficult to get right.

Visit LastMile AI

Why We Suddenly Need AI Evaluation Platforms

The honeymoon period with large language models is officially over. In 2023, just getting an LLM to work was enough to impress your boss. In 2024 and beyond, the game has changed. Now it's about reliability, accuracy, and safety. Businesses can’t run on models that hallucinate financial advice or create customer service nightmares.

This is where the whole concept of LLMOps is taking center stage. It’s the grown-up version of playing in the AI sandbox. And a huge piece of that puzzle is evaluation. You wouldn’t ship code without unit tests or performance monitoring, so why are we shipping AI with our fingers crossed? LastMile AI is stepping directly into this gap, offering a structured way to approach quality control for GenAI.

Breaking Down The Key Features

Alright, let's get into the meat and potatoes. What does LastMile AI actually do? It's not just one thing, but a collection of tools that work together.

AutoEval: Your AI's Report Card

This is the heart of the platform. AutoEval provides a suite of out-of-the-box metrics to score your AI's outputs. You get checks for things like:

- Faithfulness: Does the answer stick to the source material you gave it, or is it making things up? Crucial for RAG.

- Relevance: Is the answer actually, you know, relevant to the question asked?

- Toxicity: A check for harmful or inappropriate language.

- Correctness: Is the information factually accurate?

This isn't just a pass/fail. It gives you a score, so you can track improvements over time as you tweak your prompts, your model, or your data sources.

Synthetic Data Generation Says Goodbye to Manual Labeling

Anyone who's ever had to create a test dataset for an AI model knows the pain. It’s tedious, mind-numbing work. LastMile’s synthetic data generation tool is a godsend. You can give it your own documents and it will automatically create high-quality question-and-answer pairs to build a robust test set. This means you can build a comprehensive evaluation in a fraction of teh time. A huge win for speeding up development cycles.

Fine-Tuning and Custom Evaluators for the Power Users

While the standard metrics are great, sometimes you have a very specific need. Maybe you're building a legal AI and need to check for a specific tone or the inclusion of certain clauses. LastMile allows you to fine-tune evaluator models on your own data. This is where it gets really powerful. You're not just using their ruler; you're building your own highly-specialized measuring stick, tailored perfectly to your application's needs.

Real-Time Guardrails and Monitoring

Testing in a lab is one thing. What happens when your AI is out in the wild, interacting with real users? LastMile offers online guardrails for continuous monitoring. This means you can get real-time alerts if your application starts to drift, produce low-quality responses, or exhibit risky behavior. It's the safety net that lets you sleep a little better at night after a big deployment.

The Good, The Bad, and The Nitty-Gritty

No tool is perfect, so let’s get real. Here’s my take after kicking the tires.

What I Genuinely Like

First off, the comprehensive nature of the toolkit is fantastic. It’s not just an eval library; it’s an entire workflow from synthetic data generation to experiment tracking and live monitoring. The focus on RAG and Agents is also a massive plus, since that's where so many of us are working right now. But the real showstopper? The Expert tier is completely free. And it's not a useless, stripped-down version either. You get a ton of functionality without paying a dime, which is incredible for individual developers, researchers, or small teams trying to build quality products on a budget.

Potential Hurdles to Consider

This isn't a tool for absolute beginners. The website shows code snippets right on the homepage, which tells you its audience. You’ll need some coding knowledge to integrate and make the most of it. Also, like any specialized platform, there’s a bit of a learning curve. If you’re not already familiar with concepts like AI evaluation, you’ll need to spend some time in their documentation. Finally, for large organizations that need the on-premise deployment and unlimited scale, the Enterprise pricing is “Contact Us,” which usually means it’s a significant investment.

Let's Talk Money: LastMile AI Pricing

The pricing structure is refreshingly simple and a huge part of the story here. There are basically two tiers.

| Tier | Cost | Key Features & Limits |

|---|---|---|

| Expert | Free | Cloud Deployment, 10 Model Fine-Tunes, 100 Evaluation Runs, 10,000 Rows of Synthetic Data. |

| Enterprise | Contact for Pricing | VPC & On-Prem Deployment, Unlimited Everything, White-Glove Onboarding, 24/7 Support. |

Honestly, that Expert tier is one of the most generous free tiers I’ve seen in the MLOps space. It gives you more than enough firepower to seriously evaluate and improve a real-world project.

So, Who Is This Actually For?

I wouldn't point a first-time coder to LastMile AI. This is for the developer or the team that has a GenAI application and is now facing the “what’s next?” problem. It’s for people who understand that a good demo isn't the same as a good product. If you're building with RAG, wrestling with agentic workflows, or are simply tired of subjectively judging your AI’s performance, then LastMile AI is built for you. It's for the engineers who want to bring rigor and data-driven decisions to their AI development process.

Frequently Asked Questions

- Is LastMile AI free to use?

- Yes! The Expert tier is free and offers a substantial set of features, including evaluation runs, model fine-tuning, and synthetic data generation.

- What kind of AI applications can I evaluate?

- It's versatile, but it really shines for complex applications like Retrieval-Augmented Generation (RAG) and multi-agent systems.

- Do I need to be a machine learning expert to use it?

- You need to be comfortable with code, but the platform is designed to streamline the evaluation process, not complicate it. The main learning curve is in understanding the concepts of AI evaluation itself.

- How is this different from a library like OpenAI Evals?

- OpenAI Evals is a library, a set of tools. LastMile AI is an end-to-end platform. It includes experiment tracking dashboards, synthetic data generation, real-time monitoring, and collaboration features all in one place.

- Can I use my own models with LastMile AI?

- Absolutely. A key feature is the ability to fine-tune and use your own custom evaluator models to measure what matters most to you.

- Is my data secure with LastMile AI?

- They offer a standard cloud deployment for the free tier, but the Enterprise plan provides options for Virtual Private Cloud (VPC) and on-premise deployment for maximum security and privacy.

My Final Take: Is LastMile AI Worth Your Time?

In a word, yes. The world of generative AI is moving incredibly fast, and the tools are struggling to keep up. For a long time, we've been missing this crucial layer of measurement and quality control. LastMile AI feels like one of the first platforms to seriously and comprehensively tackle this problem for the modern AI stack.

It’s not a magic button that will instantly fix your AI, but it is a powerful set of instruments that can help you understand it, measure it, and systematically improve it. By turning the vague feeling of “I think this response is better” into hard data, it helps push AI development out of the realm of guesswork and into the world of engineering. For any team serious about shipping production-grade GenAI, that’s a pretty big deal.