Building with Large Language Models is... chaotic. One minute you're marveling at the magic, the next you're staring at a nonsensical output, wondering which part of your beautifully crafted multi-step chain went completely off the rails. It’s like trying to debug a bowl of spaghetti that can talk back to you. We've all been there, lost in a sea of API calls, token counts, and inexplicable latency spikes. It’s the wild west of tech right now, and frankly, we need a better sheriff.

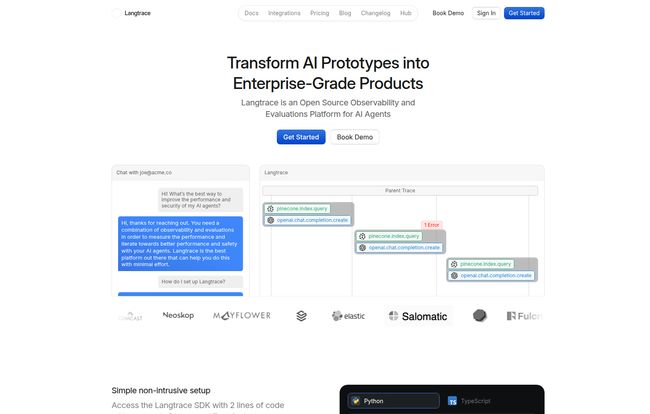

For weeks, I’ve been hunting for a tool that doesn’t just add another layer of complexity. I wanted something that would pull back the curtain, not just hang a new one. Something that gives me visibility without locking me into a proprietary ecosystem. And I think I've found it. It's called Langtrace AI, and it’s one of the most promising open-source LLM observability platforms I've seen in a long time.

So, What is Langtrace, Really?

Forget the marketing fluff for a moment. At its heart, Langtrace is a flight recorder for your AI applications. It’s an open-source tool designed to watch everything your LLM app does—from the initial prompt to the final output and every single step in between. It captures the data, organizes it, and presents it in a way that actually makes sense. You get end-to-end visibility into your entire GenAI stack.

Think about it. You're using LangChain or maybe CrewAI to build a complex agent. Langtrace automatically traces the entire flow, showing you how data moves, where the bottlenecks are, and how much each step is costing you. It supports a ridiculous number of integrations right out of the box – all the big LLM providers you can think of, a bunch of vector DBs, and the most popular frameworks. Its just a breath of fresh air.

Visit Langtrace AI

The Features That Actually Matter

A lot of platforms throw a kitchen sink of features at you. But with Langtrace, the tools feel deliberate and designed to solve the real, hair-pulling problems we face every day.

Seeing the Whole Picture with End-to-End Tracing

This is the core of it all. Langtrace gives you a trace for every single execution. You can see the parent-child relationships between different calls, check the exact inputs and outputs for each step, and immediately spot where an error occurred. No more `print()` statements littered throughout your code. This is a clean, surgical view of your app's inner workings. For anyone who has spent hours trying to figure out why their RAG pipeline is suddenly slow, this feels like a superpower.

Your Mission Control Dashboard

Data is useless if you can't understand it. Langtrace provides a set of dashboards that are genuinely useful. We're talking about vital metrics that directly impact your sanity and your wallet:

- Token Usage & Cost: See exactly which models and which parts of your application are burning through your OpenAI credits.

- Latency: Pinpoint performance bottlenecks. Is it the LLM call? The database query? The tool usage? Now you know.

- Evaluated Accuracies: Track how well your prompts and models are performing against your own standards.

This isn't just a vanity dashboard; it’s a command center for optimizing performance and cost before they spiral out of control.

Beyond Watching: The Playground and Evaluations

Monitoring is great, but improving is better. This is where Langtrace really starts to shine. It has an integrated Playground where you can test different prompts against different models side-by-side. See how GPT-4o stacks up against Claude 3 Opus for your specific use case, right within the same interface. It even has prompt version control, so you can track your changes and revert if a new “brilliant” idea turns out to be… not so brilliant.

On top of that, the Evaluations feature lets you measure the baseline performance of your app and curate datasets for automated checks. This is how you move from “I think it’s working” to “I can prove it’s working well.”

Why Open Source is a Game Changer Here

I have a strong bias, and I’ll admit it: I love open-source. In an industry moving so fast, with so many black-box APIs, trusting the tools you build on is paramount. Because Langtrace is proudly open source (check out their GitHub), you get a few massive advantages.

- Trust and Transparency: You can literally read the code. You know exactly what data is being collected and how it's being handled. No secrets.

- Customization: If Langtrace doesn’t do something you need, you can build it yourself. You have the freedom to extend and adapt the platform to your specific requirements.

- No Vendor Lock-In: You're not tied to a single company's roadmap or pricing whims. The community and the codebase are yours to use as you see fit.

This commitment to openness extends to security, where they offer enterprise-grade protocols and are SOC 2 Type II certified. It’s the best of both worlds: community-driven transparency and corporate-level security.

How Easy is the Setup?

Their site claims a "simple non-intrusive setup" with just two lines of code. I'm always skeptical of claims like this, but in this case, it’s pretty darn close to the truth. For a basic Python setup, it really is as simple as importing the library and initializing it with your API key. It's designed to be low-friction, and it shows. You can have meaningful traces showing up in your dashboard within minutes, not hours.

Let's Talk Money: The Langtrace Pricing

Ah, the question everyone's waiting for. For a while, their pricing was a bit of a mystery, but they've recently clarified it, and the structure is fantastic. I’ve laid it out in a simple table below.

| Plan | Price | Key Features |

|---|---|---|

| Free Forever | $0 | Up to 5k spans/month. Includes Tracing, Metrics, Annotations, and Evaluations. Perfect for solo devs and small projects. |

| Growth | $31 /user/month | Up to 500k spans/month. Everything in Free, plus cloud evaluations (soon) and priority support. |

| Enterprise | Custom | For large orgs. Adds custom SLAs, custom data retention, and SOC 2 Type II Compliance. |

In my opinion, this pricing is incredibly fair. The Free Forever tier is unbelievably generous and more than enough for any individual developer to get immense value. The Growth plan is reasonably priced for startups and small teams who are scaling up. It’s a pricing model that encourages adoption rather than creating a barrier.

The Other Side of the Coin

No tool is perfect for everyone. The biggest potential hurdle with Langtrace is the same as its biggest strength: it's open-source. If you want to contribute to the project or do some really deep customization, you'll need some technical chops. This isn’t a drawback so much as a reality. It's not a completely hands-off, no-code platform. It's a powerful tool for developers, and with that power comes a slight learning curve for the most advanced use cases.

My Final Take on Langtrace AI

I’m genuinely excited about Langtrace. It strikes a rare balance between power and simplicity, transparency and security. It solves a real, frustrating problem for anyone building in the AI space. It helps you stop guessing and start knowing.

If you're a developer working with LLMs—whether you're a hobbyist, a startup founder, or part of an enterprise team—you owe it to yourself to give Langtrace a look. The free tier is a no-brainer. It might just be the tool that tames the beautiful chaos of AI development and lets you focus on what you do best: building amazing things.

Frequently Asked Questions

- Is Langtrace AI really free to use?

- Yes, Langtrace has a "Free Forever" plan that is quite generous, offering up to 5,000 spans per month along with core features like tracing, metrics, and evaluations. It's ideal for individual developers and small-scale projects.

- What frameworks and libraries does Langtrace support?

- It supports a wide range of popular tools right out of the box. This includes major frameworks like LangChain, LlamaIndex, CrewAI, and DSPy, as well as numerous LLM providers (OpenAI, Anthropic, etc.) and Vector Databases.

- Is Langtrace secure enough for enterprise applications?

- Absolutely. Security is a major focus. The platform is built with enterprise-grade security protocols, and the Enterprise plan offers SOC 2 Type II compliance, making it suitable for organizations with strict security and data handling requirements.

- How difficult is it to integrate Langtrace into an existing project?

- It’s surprisingly easy. The basic integration typically involves just a couple of lines of code to import and initialize the SDK in your Python or TypeScript project. You can start seeing data in your dashboard very quickly.

- Can I contribute to the Langtrace project?

- Yes! As an open-source project, community contributions are encouraged. You can contribute to the code, suggest features, or report bugs on their official GitHub repository.

- How is Langtrace different from other observability tools?

- Its main differentiators are being open-source, its developer-first approach with a very simple setup, and its comprehensive support for the entire GenAI stack. While other tools exist, Langtrace's combination of transparency, ease of use, and powerful features is pretty unique.