Every week, my inbox gets flooded with the “next big thing” in AI. Honestly, most of it is just noise. Another wrapper, another chatbot builder, another tool promising to revolutionize my workflow without actually understanding it. It gets tiring. But every now and then, something pops up on my radar that makes me lean in a little closer. This week, that something is called KitchenAI.

The name itself is brilliant, isn't it? It immediately conjures up images of experimentation, of mixing ingredients, of getting your hands dirty to create something amazing. It’s not called “AI Boardroom” or “Prompt Synergizer.” It’s a kitchen. A place for creation. And from what I’ve seen, that’s exactly what they’re building.

For years, we've had this frustrating gap in the AI development process. You can spend hours, even days, perfecting a prompt in a playground environment. You get the tone just right, the output is perfect, the logic is sound. And then... what? You’re left with a chunk of text and the messy, often complicated task of building a reliable, scalable API around it so your app can actually use it. It’s like crafting a Michelin-star recipe but having no way to actually serve it to your customers. KitchenAI seems built to solve exactly that problem.

So What Exactly Is Cooking at KitchenAI?

At its heart, KitchenAI is a workbench for AI developers. Think of it as your professional-grade chef's station. It’s designed to take you from a rough idea to a fully functional, production-ready AI solution. The platform seems to understand that AI development is a two-part process: the creative, experimental part (crafting the prompt) and the engineering part (deploying it as a service).

It’s a platform for AI developers to turn their carefully crafted code and prompts into APIs. But it’s also for app developers who just want to integrate powerful AI features without having to become prompt engineering wizards themselves. It’s the bridge between the AI lab and the real world.

The Main Course: A Look at the Prompt Engineering Studio

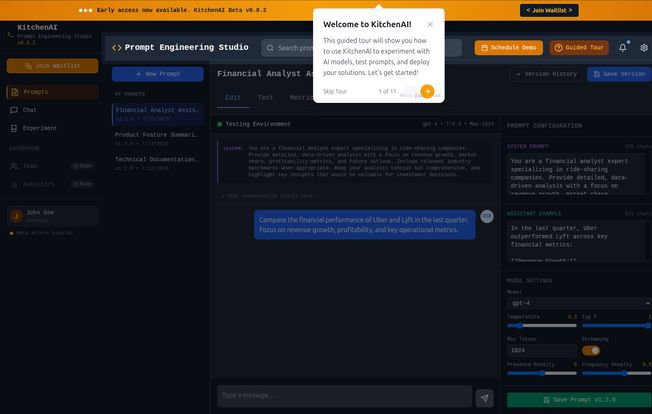

This is where the magic happens. The screenshot gives us a fantastic look into the Prompt Engineering Studio, and I have to say, it looks clean and incredibly functional. This isn't just a simple text box. It's a proper Integrated Development Environment (IDE) for prompts.

On the left, you've got your saved prompts, versioned and dated. I love that. How many times have you tweaked a prompt, lost the old version, and then realized the original was better? Version control for prompts is a simple idea, but a genius one.

The main window is split into a few key areas:

- The Testing Environment: This is your interactive chat interface. You can see the conversation flow and test how your AI assistant responds in real-time.

- Prompt Configuration: This is the control panel. You’ve got your System Prompt, which is basically the AI's constitution or core instructions. In the example, it's a detailed persona for a financial analyst. This is the secret sauce that defines the AI's behavior.

- Model Settings: Here's where you get granular. You can choose your model (the screenshot shows gpt-4, which is great), tweak the 'temperature' for creativity, set max tokens to control cost and length, and adjust other parameters like presence and frequency penalty. This is the kind of control serious developers need.

It’s a comprehensive setup. You can literally see the cause and effect of your changes in one unified view. Tweak the system prompt, and immediately see how it alters the AI's response in the test environment. It’s the tight feedback loop we’ve been missing.

Visit KitchenAI

Not Just One Chef: Multi-Model Support is Key

One of the first things that stood out to me in the docs was the mention of multi-model support. The screenshot shows GPT-4, but the platform also supports models like GPT-3.5-Turbo and Llama-2. This is huge.

Why? Because getting locked into one ecosystem is a massive risk. What if OpenAI's prices go up? What if a new open-source model like Llama suddenly outperforms everything else for your specific task? A platform that lets you experiment and deploy with different foundational models is not just convenient; it's a smart, future-proof strategy. It gives you flexibility. You can use the big, powerful (and expensive) model for complex tasks, and a smaller, faster model for simpler ones, all within the same workflow. That’s smart business.

Some Dishes Are Still in the Oven

Let's be real for a moment. KitchenAI is still in its early stages. The interface clearly states it's in Beta (v0.9.2 as of this writing). This means you're getting in on the ground floor, which is exciting, but you also need to expect some unfinished corners.

The navigation shows features like 'Team' and 'Analytics' are marked as 'Soon'. These are pretty critical for any serious business use case. Team collaboration is a must, and you absolutely need analytics to monitor performance and costs. Seeing them on the roadmap is reassuring, but it's something to be aware of if you're looking to deploy for a large team tomorrow.

It’s the classic beta tradeoff: you get early access to cool new tech, but you have to be patient as it fully matures. For solo developers or small teams looking to experiment, this is probably not a deal-breaker. For large enterprises, it might be a 'wait and see' situation.

What's the Price of Admission?

Ah, the big question. What does it cost? Well, right now, that's a bit of a mystery. I went looking for the pricing page, and... well, it seems they're still cooking that up too. The link I had led to a 404 page (props to Netlify for the friendly error message!).

This is completely normal for a platform in beta. They're likely still figuring out their pricing model. Will it be a subscription? Usage-based? A mix of both? My guess is we'll see a tiered system, perhaps with a generous free tier for experimentation and paid plans based on API calls and team features.

For now, the only way to get in is to Join the Waitlist on their site. If you're intrigued by what you see, I'd say it's worth putting your name down. You'll be the first to know when it opens up and what the cost structure looks like.

So, Should You Step into the Kitchen?

Despite being in beta, KitchenAI feels like it has a very clear sense of purpose. It’s not trying to be everything to everyone. It's laser-focused on one of the most significant pain points in applied AI: operationalizing prompts. It's building the tools to bridge the gap from idea to production.

The thoughtful UI of the Prompt Engineering Studio, the multi-model support, and the clear path to API deployment are all incredibly promising signs. It’s a tool built by people who seem to genuinely understand the developer workflow.

Is it perfect? No, it’s a beta. But is it one of the most interesting platforms I've seen in the AI development space this year? Absolutly. If you're a developer who has felt that frustration of having a great prompt stuck in a playground, you should definitely keep an eye on KitchenAI. It might just be the professional-grade kitchen you've been waiting for.

Frequently Asked Questions About KitchenAI

- What is KitchenAI in simple terms?

- KitchenAI is an online platform that helps developers test, refine, and perfect their AI prompts (like instructions for ChatGPT) and then easily turn them into usable APIs for their applications.

- Which AI models does KitchenAI support?

- It supports several leading AI models, including OpenAI's GPT-4 and GPT-3.5-Turbo, and Meta's open-source Llama-2 model. This allows developers to choose the best model for their specific needs.

- Is KitchenAI free to use?

- Currently, KitchenAI is in an early access beta, and pricing information has not been publicly released. You can join a waitlist on their website to get access and be notified about future pricing plans.

- How is this different from the OpenAI Playground?

- While the OpenAI Playground is great for experimenting with prompts, KitchenAI is designed to take the next step. Its main advantage is the ability to directly deploy your finished, version-controlled prompt as a production-ready API, which is a much more involved process to do on your own.

- What is prompt engineering?

- Prompt engineering is the art and science of designing effective inputs (prompts) to get desired outputs from an AI model. It involves crafting clear instructions, providing context, and refining the prompt based on the AI's responses to achieve a specific goal.

- When will the full version with all features be available?

- There's no official release date for the full version. Since the current version is 0.9.2, it's likely progressing steadily. Features like Team management and Analytics are listed as 'Soon', suggesting they are a priority for the development team.

Reference and Sources

For further reading and to check out the technologies mentioned:

- Information on GPT-4 can be found at the OpenAI website.

- Details about the Llama family of models are available from Meta AI.

- Troubleshooting for a certain type of 404 page can be found in Netlify's support guide.