We all know the feeling. You've just built this amazing AI-powered feature, you're watching the user numbers climb, and then you get the bill from OpenAI or Anthropic. Oof. That feeling in the pit of your stomach when you realize your genius idea is costing more than a small car to run. API bills are the silent killer of so many great AI projects, forcing developers to make tough choices between innovation and, well, solvency.

For months, the community has been buzzing about the need for smarter ways to manage these costs. We've been cobbling together our own solutions, writing complex logic to switch between `gpt-4-turbo` for heavy lifting and maybe a cheaper model for simple summarization. It’s a mess. So when a tool pops up that claims to solve this exact problem, my ears perk up. Enter Inferkit AI.

So, What Exactly is Inferkit AI Supposed to Be?

On paper, Inferkit AI is the answer to a developer's prayer. It’s an LLM (Large Language Model) router. Think of it like an intelligent, cost-aware traffic cop for your AI requests. Instead of hitting the OpenAI API directly, you send your request to Inferkit. It then looks at your request and intelligently routes it to the best and most cost-effective model for the job, whether that's from OpenAI, Llama, Anthropic, or others.

The idea is beautifully simple. One API endpoint to manage, potentially massive cost savings, and the ability to experiment with different models without rewriting your entire backend. It’s a powerful proposition, especially for startups and indie hackers who live and die by their burn rate.

The Big Idea: A Universal Remote for LLMs

The core promise here is all about efficiency and cost-effectiveness. In a world where a dozen different AI models all excel at slightly different things, being locked into one ecosystem feels… dated. Inferkit AI wants to be your universal remote.

Imagine your app needs to summarize an article. You don't necessarily need the horsepower (and price tag) of GPT-4 for that. A model like Claude 3 Haiku or Llama 3 8B might do it just as well for a fraction of the cost. Inferkit is designed to make that switch for you, automatically. And right now, during their beta phase, they're reportedly offering a hefty 50% discount. That’s not just a small saving; that’s a game-changer for budget-conscious development.

The best part? It's built to be a drop-in replacement for the OpenAI API. In theory, you just change the base URL, keep your existing code structure, and you're good to go. No steep learning curve. No weeks spent refactoring. That, my friends, is how you get developers to pay attention.

Okay, A Quick Reality Check...

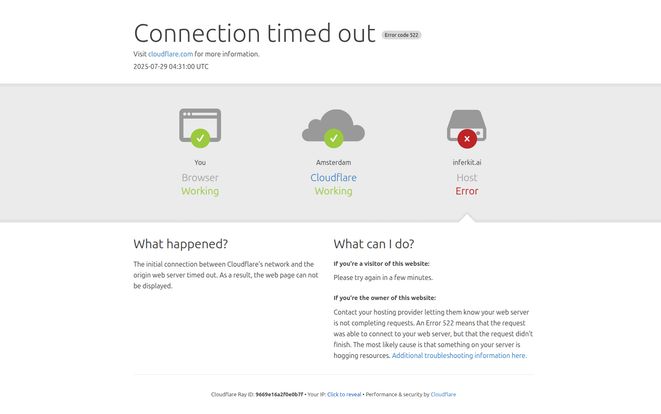

So, naturally, I was excited to get my hands on it. I rolled up my sleeves, fired up a new browser tab, and navigated to their site to sign up. And I was greeted by… well, this:

Visit Inferkit AI

A Cloudflare 522 error. Connection timed out. For the non-technical folks, that means my browser could reach Cloudflare (the service that protects their website), but Cloudflare couldn't get a response from Inferkit's actual server. I tried again a few minutes later. Same thing.

Now, let's be fair. The platform is explicitly in beta. Hiccups, downtime, and bugs are part of the territory. It's the wild west. I've seen bigger companies have worse outages. But it does inject a healthy dose of caution into my initial excitement. An API router needs to be rock-solid. If it's down, your entire AI functionality is down with it. It's a stark reminder that while the idea is fantastic, the execution might still be a work in progress.

Assuming the Lights Are On: Key Features

Let's give them the benefit of the doubt and assume the 522 error was just a temporary blip. What does Inferkit AI bring to the table when it's up and running?

- Multi-LLM Support: This is the main event. Access to models from OpenAI, Anthropic, and Llama through a single point is a huge win for flexibility.

- Cost-Effective Routing: The platform's 'secret sauce' is its ability to route your requests to the cheapest model that can handle the task, drastically cutting down on your API spend.

- OpenAI API Compatibility: This is huge. The fact that it's designed to be a drop-in replacement means implementation should be incredibly simple for anyone already using OpenAI's tools. Big plus.

- Free Quota for New Users: They offer a free quota to let you kick the tires and see if it works for you before you commit. Always a welcome gesture.

The Good, The Bad, and The Beta

The Upside: Why I'm Still Watching

Despite the connection issue, I'm not writing Inferkit AI off. The core concept is just too strong. If they can nail the reliability, a service that simplifies multi-model AI development and slashes costs is something the entire industry is crying out for. For a small team or a solo developer, this could be the difference between a project launching or getting shelved due to budget concerns. The promise of a 50% beta discount is a massive carrot on a stick.

The Downsides: Potential Roadblocks

The biggest red flag is, obviously, reliability. The beta tag explains it, but doesn't entirely excuse it. I wouldn't bet my production application on it just yet. There's also the question of what happens after the beta. Will the pricing remain competitive? And because it's a middleman, you are introducing another point of failure and adding reliance on their engineering. It's a trade-off between convenience and control.

Who Should Give Inferkit AI a Try?

So who is this for, right now? I'd say it's perfect for a few groups:

Indie Hackers & Solopreneurs: If you're building a project on your own dime, every penny counts. The potential savings here are enormous.

Startups in Development: For teams building their MVP, this is a great way to keep development costs low before you have revenue coming in.

Developers Experimenting with AI: If you just want to play with different models without signing up for five different services and managing a dozen API keys, this could be a fantastic sandbox.

I probably wouldn't recommend it for a mission-critical, enterprise-level application that requires 99.999% uptime. Not yet, anyway.

Frequently Asked Questions about Inferkit AI

- What is an LLM router?

- An LLM router is a service that acts as an intermediary for your AI requests. Instead of sending a request directly to a specific model (like GPT-4), you send it to the router, which then decides the best (often cheapest or fastest) model to forward the request to based on pre-defined rules or its own logic.

- Is Inferkit AI free to use?

- It appears to have a freemium model. They offer a free quota for new users to test the service. Beyond that, it's a paid service, but they are offering a 50% discount during their beta phase. Specific pricing details weren't available at the time of writing.

- What AI models does Inferkit AI support?

- According to their information, it supports major models from leading providers, including OpenAI (the GPT series), Anthropic (the Claude series), and open-source models like Llama.

- What does a 'beta' status mean for a tool like this?

- Beta means the product is still in a testing phase. Users can expect to encounter bugs, performance issues, or even occasional downtime (like the 522 error I saw). It's a chance to try a new product early, often at a discount, but it comes with the understanding that it's not a fully polished, final product.

- Is the 'Connection timed out' error a deal-breaker?

- Not necessarily, but it is a major concern. For a hobby project, it's an annoyance. For a business, consistent uptime is non-negotiable. It's something to monitor closely if you decide to try the service.

My Final Take: Cautiously Optimistic

So, where do I land on Inferkit AI? I'm planting my flag firmly in the "cautiously optimistic" camp. The idea is a 10/10. It solves a real, expensive problem that I and many other developers face every single day. The approach—a simple, drop-in API—is smart.

The execution, however, seems to have some early-stage turbulence. This isn't a condemnation, just an observation. Almost every great tool has a rocky start. If the team behind Inferkit AI can stabilize the platform and deliver on their promise of reliable, cost-effective LLM routing, they won't just have a good product; they'll have an indispensable tool for the modern AI developer. I’ll definitely be keeping an eye on this one. For now, maybe just use it for your side projects.

Reference and Sources

- Inferkit AI Official Website (Note: Website was experiencing connection issues at time of publication)

- Cloudflare Documentation on Error 522