Building applications on top of Large Language Models feels like the wild west all over again. One minute you’re a genius, your agent is spitting out pure gold. The next, it’s hallucinating like it just came back from a Grateful Dead concert, and you have absolutely no idea why. We're all building the plane while flying it, and the cockpit is just a mess of scattered tools, Google Docs for prompts, and a whole lot of prayer.

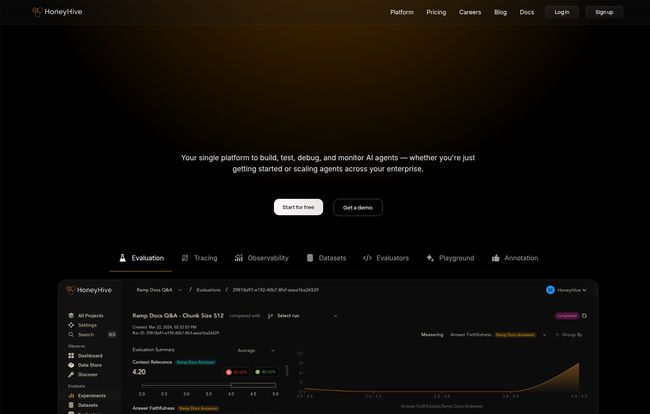

I’ve seen dozens of tools pop up claiming to be the “all-in-one” solution for this chaos. My skepticism meter is pretty well-calibrated by now. So when I came across HoneyHive, I was prepared for more of the same. But... I was pleasantly surprised. This one feels different. It feels like it was built by people who have actually been in the trenches, who've felt the pain of a production model going sideways at 3 AM.

So, What Problem is HoneyHive Actually Solving?

The core issue in LLM development isn't a lack of power; it's a lack of control and insight. It’s observability. We’ve got our code in Git, our infrastructure in Terraform, but our prompts? Our evaluation datasets? Our model performance history? It’s often a horrifying mix of spreadsheets, Slack messages, and someone's local Jupyter notebook. It’s not scalable, and it’s definitely not collaborative.

HoneyHive steps in as a sort of mission control for your AI applications. It’s an LLMOps platform that aims to unify the entire lifecycle: testing, debugging, monitoring, and optimizing. And crucially, it’s built for the whole team—not just the hardcore engineers. The goal is to get your product managers, domain experts, and engineers speaking the same language and working from the same dashboard. A noble goal, if you ask me.

A Closer Look at the HoneyHive Features

Okay, let's get into the meat and potatoes. What does it actually do? I spent some time poking around, and a few areas really stood out.

Beyond 'Does It Work?': Systematic AI Evaluation

"The new prompt feels better." How many times have we heard that? It's the bane of my existance. Gut feelings don't scale. HoneyHive tackles this head-on with a structured evaluation framework. You can build golden datasets, run your agent against them, and systematically measure quality. This means you can actually do regression testing for your AI. Think about that. You change a prompt, and you can see, with data, if it broke something else downstream. It’s like switching from just tasting your food to having a full nutritional analysis done. Suddenly, your choices are informed, not just instinctual.

Finding the Ghost in the Machine with Tracing

When an LLM call fails, trying to figure out why can feel impossible. Was it the prompt? The context? The model itself having a bad day? HoneyHive’s distributed tracing gives you an end-to-end view of your agent’s request. It's like a black box flight recorder for your AI. You can see the full context, the chain of thought, the tool usage, and exactly where things went wrong. For any developer who has spent hours just trying to reproduce a single weird output, this is an absolute godsend.

Visit HoneyHive

Keeping an Eye on the Bottom Line: Cost and Performance Monitoring

Let's not forget, these models cost money. A rogue agent making too many calls or using an expensive model can burn through your budget with terrifying speed. HoneyHive provides a dashboard to monitor cost, latency, and token usage. But it goes deeper, also tracking critical quality metrics in production, like whether your model's outputs are grounded in the provided context or if it's generating toxic content. This isn't just about whether the app is up; it's about whether the app is good and safe and not bankrupting you.

The Collaborative Core: Prompts and People

This might be my favorite part. The days of the 'prompt engineer' being a lone wizard are over. Building great AI products is a team sport. HoneyHive provides a centralized prompt management studio. Your PM can go in and tweak the wording of a prompt, a domain expert can refine the examples, and the engineer can hook it into the production system—all in one place. It gets the prompts out of spreadsheets and puts them into a version-controlled, collaborative environment. The amount of friction this removes is hard to overstate.

The Big Question: HoneyHive Pricing and Plans

Alright, so how much does this mission control cost? The pricing structure is refreshingly straightforward, which I appreciate.

| Plan | Best For | Cost |

|---|---|---|

| Developer | Individuals and small projects | Free (no credit card required) |

| Enterprise | Scaling teams and large organizations | Custom (Contact Sales) |

The Free Developer plan is genuinely useful. It’s not just a demo. You can get started and do real work, which is fantastic for indie developers or small teams trying to get an MVP off the ground. Yes, there are usage limits, but that's to be expected. The fact that you don't need a credit card is a huge green flag for me. The Enterprise plan is the classic "let's chat" model, which makes sense for larger teams that need things like dedicated cloud hosting, self-hosting options, premium support, and custom integrations.

The Good, The Bad, and The Realistic

No tool is perfect. In my experience, the biggest win for HoneyHive is its unified platform. Not having to stitch together five different services is a massive productivity boost. The flexibility is also a huge plus—supporting any model, framework, or architecture means you aren’t locked into a specific ecosystem.

On the flip side, a platform this comprehensive will have a learning curve. The docs mention some initial setup and integration effort, and that’s just being honest. You don't just flip a switch and have perfect observability. You need to invest a little time to get it wired up correctly. Also, as with any freemium model, some of teh more advanced features are reserved for the Enterprise plan. That's the business model, and it's a fair one, but something to be aware of as you scale.

Who Should Actually Use HoneyHive?

I see a few clear groups that would benefit here:

- Scaling Startups: If you've moved past the prototype stage and are serious about building a reliable AI product, this is for you. This is the operational rigor you need.

- Large Enterprises: The security, collaboration, and flexible hosting options (especially self-hosting) are exactly what corporate IT and security teams look for.

- Indie Hackers & Solo Devs: The free tier is your best friend. It gives you enterprise-grade monitoring and evaluation for your passion project without the enterprise price tag.

Frequently Asked Questions about HoneyHive

- What is LLMOps and why do I need it?

- LLMOps is like DevOps but for Large Language Models. It’s a set of practices for managing the entire lifecycle of an AI application, from development and testing to deployment and monitoring. You need it to build reliable, scalable, and controllable AI products instead of just unpredictable prototypes.

- How does HoneyHive compare to open-source tools?

- Open-source tools are powerful but often require you to piece together and maintain a complex stack. HoneyHive provides a single, managed platform where evaluation, observability, and prompt management are already integrated. It's a trade-off between customization/cost and convenience/speed.

- Can I use HoneyHive with any LLM model?

- Yes. The platform is designed to be model-agnostic, meaning you can use it with models from OpenAI, Anthropic, Google, open-source models like Llama, or even your own fine-tuned models.

- Is HoneyHive difficult to set up?

- There's an initial setup phase, as with any observability tool. However, it integrates with standards like OpenTelemetry and provides a REST API, which should make it familiar for many engineering teams. It's not a zero-click setup, but it’s a well-trodden path.

- How does the collaboration feature work?

- It provides a shared workspace where different team members can log in. For example, a product manager can edit prompts in the Prompt Studio, an engineer can analyze traces from a production failure, and a data scientist can curate evaluation sets—all within the same platform.

Final Thoughts

Look, the era of 'winging it' with LLMs is coming to a close. To build real, lasting products, we need real, professional tools. HoneyHive is a serious contender for being a core part of that modern AI stack. It brings a much-needed layer of structure, collaboration, and insight to a field that can often feel like pure alchemy.

It’s not a magic wand, but it is a very, very good map and compass. For teams struggling to get a handle on their AI development lifecycle, I think it’s absolutely worth a look. I'm excited to see how platforms like this one continue to mature and shape the future of applied AI. Things are about to get a lot more stable, and for that, I am truly grateful.