How many of you currently have at least three browser tabs open, each with a different AI chatbot? One for ChatGPT, one for Gemini, maybe one for Claude just to keep things spicy. It's the modern-day version of channel surfing, a constant toggle-of-war to see who gives the best answer. I do it. You probably do it. It’s how we find the gold in this generative AI gold rush.

My workflow is a chaotic dance. I'll throw a prompt for a blog outline into ChatGPT-4, then toss the same prompt to Gemini to see if it catches a different angle. Sometimes I feel like a mad scientist, or maybe just a really inefficient project manager. “What if,” I’ve often thought, “there was just one screen? One prompt box that sent my request into a digital Colosseum and let the AI titans fight it out, side-by-side?”

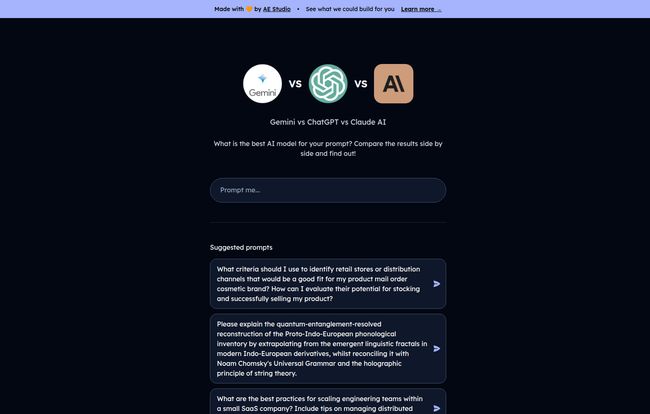

Well, a little while ago, I stumbled upon exactly that. A mirage in the desert? Maybe. A tool that promised to do the one thing I, and probably many of you, desperately wanted: a direct, no-nonsense comparison of the big AI models. Specifically, it was billed as a Gemini vs ChatGPT vs Claude arena. A simple idea, but a brilliant one.

The AI Arms Race and Why We Need a Referee

It feels like every week there's a new update, a new model, a new claim to the throne. OpenAI drops a new GPT version, and the internet goes wild. Then Google fires back with a souped-up Gemini that’s integrated into everything from search to your toaster. And let's not forget Anthropic’s Claude, the thoughtful, poetic one in the corner that often drops surprising bombshells of quality.

For us in the trenches—the SEOs, the content creators, the developers, the marketers—this isn't just academic. It's practical. The choice of model can be the difference between generic, soulless copy and a piece of content that actually connects with a human. It's the difference between buggy code and a clean script. Each model has its own… personality. Its own quirks. Its own strengths. Trying to keep track of it all is a full-time job.

This is precisely the problem a tool like the one I found, apparently built by a team at AE Studio, aimed to solve. No more copying and pasting. No more tab-flipping. Just one prompt, multiple simultaneous answers. The dream.

A Glimpse Into an AI Comparator

The interface I saw was beautifully simple. A clean, dark-mode layout with the logos of the contenders lined up: Gemini, ChatGPT, Claude. Below them, a single prompt box and a few intriguing suggestions, like:

“What are the best practices for coding engineering teams within a small SaaS company? Include tips on managing distributed teams, ensuring code quality, and fostering innovation.”

That’s a meaty, real-world prompt. The kind of thing you’d actually ask. The promise was that once you hit enter, you’d get three distinct responses, one from each AI, laid out for easy comparison. It’s not just about picking a “winner,” but about seeing the different paths each AI takes to get to an answer.

Visit Gemini vs ChatGPT

The Main Players in the Arena

To appreciate a tool like this, you have to know the fighters. Google's Gemini Pro is often seen as the data-crunching champion, with its direct line to the internet and Google's massive index. It's fantastic for timely topics and factual queries. Then there's OpenAI's ChatGPT, the model that started this whole craze. I've always found it to be the more creative wordsmith, a bit of a storyteller. And in the other corner, Anthropic's Claude AI, which prides itself on being more cautious and thoughtful, often producing longer, more structured, and arguably safer responses. Seeing them all tackle the same task is an education in itself.

What Makes a Good AI Comparison Anyway?

The tool I saw mentioned “performance metrics,” which got me thinking. What metrics even matter? Sure, response time is one thing. But who cares if an AI gives you a bad answer really fast? It’s the quality that counts. I’ve always believed the human element is the most important metric.

If I were building my own ideal comparison tool, here’s what I’d want to see beyond the raw text output:

| Metric | Why It Matters |

|---|---|

| Word/Token Count | Shows the verbosity of each model. Is it concise or does it ramble? |

| Readability Score | A Flesch-Kincaid or similar score would tell you how complex the language is. Crucial for SEO content. |

| Keyword Density | For SEO prompts, seeing which model naturally incorporates keywords better would be a game-changer. |

| Fact-Check Flags | A dream feature! Imagine a little flag if the AI mentions a stat that can't be verified. A guy can wish. |

But even with all that data, the final call comes down to you. Does the response feel right? Does it match the tone you were aiming for? That’s something no metric can fully capture.

The Curious Case of the 404 Error

Here’s the twist in the tale. When I went to find the tool again to write this, I was greeted by a dreaded 404 page. “This page could not be found.” A digital ghost. A fantastic idea that, for now, seems to have vanished.

Does this mean it was a failure? I don’t think so. In the hyper-speed world of AI development, projects pop up and disappear all the time. Sometimes they are proofs-of-concept. Sometimes they get acquired and integrated into something bigger. Sometimes teh servers just go down. My hope is that it’s being retooled, perhaps to add even more models or features. Or maybe it served its purpose and its creators moved on to the next big thing. It's a stark reminder of how fluid this space is. What’s here today might be a 404 tomorrow.

How to Compare AI Models Without a Magic Tool

So, with our magic comparison tool potentially out of commission, are we back to the stone age of tab-flipping? Pretty much, but we can be smart about it. The concept is more important than the specific platform.

The best way is still the most straightforward: open two windows side-by-side, put the same prompt into both, and critically analyze the results. It's not sexy, but it works. For those a bit more technical, you can use API playgrounds from OpenAI and Google to do more structured tests. And of course, platforms like Poe allow you to talk to different bots in one interface, which is a step in the right direction.

A Quick and Dirty Comparison Framework

When you're doing your manual comparisons, don't just skim. Look for specific things. Assess for clarity and coherence; does the output actually make sense and flow logically? Check for factual accuracy, because we all know how prone these things are to making up facts, or “hallucinating.” Consider the tone and style. If you asked for a witty and sarcastic response, did you get it, or did you get a dry, academic paragraph? Finally, check for completeness and originality. Did it answer every part of your prompt, and did it offer a unique take or just spit back the top 10 search results in paragraph form?

Frequently Asked Questions about AI Model Comparison

- What is the best AI for SEO content?

- It depends! I've found Gemini is great for keyword research and topical outlines because of its connection to Google Search. ChatGPT often excels at drafting the actual prose and creative meta descriptions. The best approach is to use them together.

- Is Gemini better than ChatGPT?

- That's the million-dollar question. “Better” is subjective. Gemini is often superior for real-time information and data analysis. ChatGPT, particularly GPT-4, often has a slight edge in nuanced, creative, and long-form writing. Your best bet is to test them on prompts relevant to your work.

- Why would a tool compare different AI models?

- Efficiency and quality. It saves you the time of toggling between windows and allows for an immediate, side-by-side analysis of tone, accuracy, and style. This helps you pick the best possible output for your specific task.

- Are these AI comparison tools free?

- The one I found appeared to be free, with no pricing information available. Many tools in the AI space start as free betas to attract users. This can change, but for now, many foundational tools can be used at no cost.

- What is prompt engineering?

- Prompt engineering is the art and science of crafting the perfect input (prompt) to get the desired output from an AI. It involves being specific, providing context, defining the desired format, and iterating. Better prompts lead to better comparisons.

- Can I trust the accuracy of AI models?

- No, not 100%. Always, always, always fact-check any critical information, data, or statistics provided by an AI. They are known to hallucinate, so treat them as a highly-skilled but sometimes unreliable assistant.

Final Thoughts on the AI Gauntlet

Whether this specific comparison tool ever comes back or not, it represents a necessary evolution in how we interact with AI. The future isn't about picking one single “best” model. It’s about having a toolkit and knowing which tool to use for which job. The mindset of constant comparison and critical evaluation is far more valuable than any single piece of software.

So keep your tabs open. Keep experimenting. The AI arms race is chaotic, but it’s also pushing innovation at a dizzying pace. And for those of us who make our living in the digital world, that's an incredibly exciting thing to be a part of. Now if you'll excuse me, I have a prompt I need to test in a few different windows.