Another week, another AI tool drops on Product Hunt promising to revolutionize how we work. I know, I know. My inbox is flooded with them too. It’s easy to get cynical. Most are just thin wrappers around the same big APIs, offering little more than a slick UI. But every now and then, something pops up that makes my SEO-blogger Spidey-sense tingle. Something that isn't just shouting into the void but seems to be quietly answering the questions serious dev teams are actually asking.

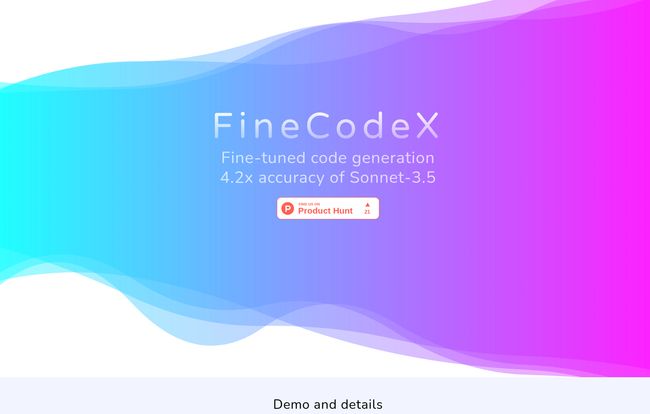

This week, that tool is FineCodeX. And honestly, its landing page made some pretty bold claims. We're talking accuracy that supposedly blows mainstream models out of the water, costs that seem almost too good to be true, and a privacy promise that every CTO dreams of. So, is it just marketing fluff, or is there some real fire behind this smoke? Let's get into it.

So, What is FineCodeX, Really?

At its core, FineCodeX isn’t trying to be another general-purpose chatbot that can write you a poem and then try to debug your Kubernetes config. Instead, its entire focus is on one thing: fine-tuning a powerful Large Language Model (LLM) on your specific, private codebase.

Think about it like this. Using a generic model like GPT-4 or Sonnet for your company's code is like buying a suit off the rack. It might be a nice suit, but it's not going to fit you perfectly. There will be weird pulling at the shoulders, the sleeves might be a tad too long, and it just won’t feel like yours. FineCodeX is the master tailor. It takes a high-quality material—in this case, Meta's Llama-3-70B model—and custom-fits it to the unique contours of your code repository. It learns your libraries, your coding style, your internal APIs, your architectural patterns. The result? An AI assistant that speaks your team's native language, not just a generic programming tongue.

Visit FineCodeX

The Dream Team Behind the Code

Before we even get to the performance claims, let's talk about the elephant in the room: who is building this? In the AI gold rush, credibility is everything. And this is where FineCodeX immediately stands out. Their team isn't a group of fresh-faced grads; it’s a roster of seasoned pros from the very companies that are defining this space. We're talking former research scientists and AI engineers from OpenAI, Anthropic, and Asana.

This, for me, is a massive trust signal. These aren't people chasing a trend. These are people who have been on the front lines, building the foundational models we all use. They've seen the limitations firsthand and have clearly set out to solve them. It suggests a deep, nuanced understanding of the problem space, not just a surface-level application.

Breaking Down Those Big, Bold Claims

Okay, impressive team, cool concept. But what about the results? FineCodeX puts three huge numbers front and center on their site. Let’s pick them apart.

That 4.2x Accuracy Claim

This is the headline grabber: "4.2x more accurate" than Anthropic's latest, Sonnet 3.5. That's... a lot. How? By fine-tuning. When a model is trained on your specific codebase, it's far less likely to hallucinate or suggest code that uses outdated patterns your team has deprecated. It’s generating code with context.

Now, for a dose of professional skepticism. As noted in their own data, this accuracy claim is based on testing against a specific, large repository (the Discourse repo, for those curious). Does this mean you’ll see an exact 4.2x improvement on your codebase? Maybe, maybe not. Results will vary. But it’s a powerful proof of concept that demonstrates the immense potential of fine-tuning over generic prompting. It shows that specialization leads to higher quality outputs, which makes a ton of sense.

Can It Really Be 9x Cheaper?

My wallet perked up at this one. AI token costs can get scary, fast. Especially for automated workflows that are running constantly. FineCodeX claims they can deliver their dedicated model at a cost of around $0.7 per 1 million tokens. For anyone who has seen an OpenAI or Anthropic bill after a month of heavy usage, that number is incredibly attractive.

By offering a dedicated, optimized model, they cut out a lot of the overhead associated with the massive, serve-everyone APIs. High usage becomes a benefit that drives down cost, not a liability that bloats your budget. For teams looking to integrate AI code generation deeply into their CI/CD pipelines or developer workflows, this economic shift is a game-changer.

Your Code Stays Your Code: The 100% Privacy Promise

For me, this is the real showstopper. The biggest blocker for AI adoption in many large companies isn't capability, it’s security. No CISO wants to hear that their proprietary source code is being sent to a third-party API for processing. It’s a non-starter.

FineCodeX addresses this head-on. They offer two models for deployment: either you get the fine-tuned model weights to run on your own infrastructure, or they set up a dedicated, VPC-peered instance of the model in their infrastructure. In plain English? You get a private, sealed-off version of the AI. Your data isn’t used to train models for other customers. No cross-contamination. No prying eyes. It's your AI, in your castle, protected by your moat. This is the only way enterprise-grade AI for code can truly work.

A Healthy Dose of Reality

Alright, it sounds pretty great. But nothing is perfect, right? A real review has to look at the potential downsides. From my digging, there are a couple of things to be aware of. First, it requires JavaScript to run, which is a minor technical note but still a dependency to manage. Second, while they tout the results of their fine-tuning, the exact “secret sauce” of that process isn’t detailed publicly. This is understandable—it's their IP, after all—but it does mean you’re placing trust in their expertise. Finally, as mentioned before, the incredible accuracy claim is based on one public repository. It's a strong benchmark, but your mileage may vary.

So, What's the Price Tag?

This is the part where I went to click their pricing page and... hit a 404. Whoops. A classic sign of a startup moving fast and maybe breaking a few small things. But it also tells a story. FineCodeX isn’t selling a $20/month plan for individual hobbyists. The entire model—dedicated instances, VPC peering, chatting via Calendly to get started—screams enterprise and high-growth startups.

The pricing is almost certainly quote-based, tailored to the size of your codebase and your usage needs. This isn’t a flaw; it’s just the nature of a B2B service this specialized. The call to action is clear: if you're a serious team feeling the pain of inaccurate, expensive, or insecure AI, you book a chat with them.

Frequently Asked Questions about FineCodeX

- What exactly is FineCodeX?

- FineCodeX is a service that takes powerful LLMs like Llama-3 and fine-tunes them on your company's private codebase. This creates a custom AI code generation tool that is highly accurate, cost-effective, and secure.

- Who should use FineCodeX?

- It's designed for tech companies, from high-growth startups to large enterprises, who need a secure, accurate, and budget-friendly AI code assistant for their development teams. If you're worried about sending your proprietary code to public APIs, this is for you.

- How does FineCodeX ensure data privacy?

- It offers two solutions: you can either receive the fine-tuned model weights to run entirely on your own servers, or they can provide a dedicated instance in a Virtual Private Cloud (VPC) that is peered only with your network. Your code is never mixed with other customers' data.

- Is it really more accurate than Sonnet 3.5?

- Their internal benchmarks, run on the public Discourse code repository, showed their fine-tuned model to be 4.2 times more accurate at generating correct code changes. While individual results can vary, it shows the power of specialization.

- How much does FineCodeX cost?

- Pricing isn't publicly listed. Given their enterprise focus, it's likely a custom quote-based model. You can get in touch with them directly through their website to discuss your team's specific needs.

My Final Verdict on FineCodeX

So, do I think FineCodeX is just more hype? No, I don't. I think it’s a smart, targeted solution to real problems. The AI industry is currently in its “brute force” era, throwing massive, generalized models at everything. FineCodeX represents the next logical step: the “specialization” era. It’s a move toward smaller, more efficient, and more effective tools that are custom-built for a specific job.

The combination of a world-class team, a smart technical approach, and a business model that prioritizes privacy and cost-effectiveness is a potent one. If you're a CTO or an engineering lead tired of the trade-offs with generic AI tools, I'd say FineCodeX is absolutely worth a look. It might just be the custom-fit suit your dev team has been looking for.

References and Sources

- FineCodeX Official Website

- FineCodeX Calendly Booking

- Discourse GitHub Repository (used in benchmarking)