You and me, developer to developer. We’ve all been there. You get a brilliant idea for a new feature. It involves a sprinkle of AI—maybe some text classification, or finding similar items for recommendations. You think, "I'll just whip up a quick serverless function. It'll be cheap, scalable, perfect!"

And then reality hits you like a ton of bricks. Or, more accurately, like a 5-second cold start on your API endpoint.

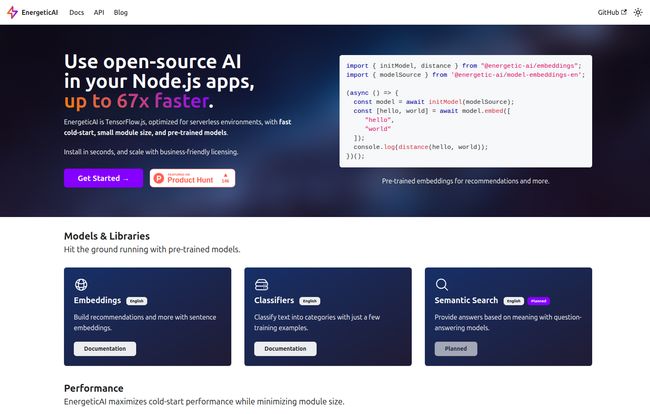

The dream of nimble, on-demand AI gets bogged down by clunky model loading times. Your users are staring at a spinner, your costs are... not as low as you'd hoped, and you start wondering if you need a dedicated, always-on server just for this one little feature. It’s a classic serverless headache. I was scrolling through my feed the other day, procrastinating on some CPC reports, when I stumbled upon a tool that made a pretty bold claim: “Use open-source AI in your Node.js apps, up to 67x faster.”

My skepticism alarm went off immediately. I’ve seen my fair share of over-the-top marketing claims. But this one, from a platform called EnergeticAI, had some numbers to back it up. So, I decided to take a closer look.

So What Is EnergeticAI, Actually?

First off, let’s be clear. EnergeticAI isn't a new, from-scratch AI model or a competitor to TensorFlow or PyTorch. That would be a monumental task. Instead, it’s much cleverer. Think of it as a high-performance tuning kit for a specific engine. In this case, the engine is TensorFlow.js, and the car is your Node.js serverless function.

It takes the power of open-source TensorFlow.js models and repackages them to be ridiculously efficient in environments like AWS Lambda, Vercel Functions, or Google Cloud Functions. It focuses on solving the most painful problems of running AI in these places: slow initial load times (cold starts) and chunky module sizes.

The Cold Start Problem: Serverless AI's Kryptonite

If you've worked with serverless, you know the term "cold start." It's the delay when the cloud provider has to spin up a new instance of your function from scratch. For a simple function that just returns some JSON, it’s a minor annoyance. But when that function needs to load a hefty AI model… yikes.

I’ve seen cold starts for Node.js AI functions stretch into multiple seconds. That's an eternity in web time. It's the difference between a happy user and a bounced user. This is the beast that EnergeticAI claims to have slain. And their performance graphs are, frankly, a little bit jaw-dropping.

The Performance Claims Under the Microscope

Okay, let's get to the juicy part. The numbers. According to their own benchmarks, the difference is stark.

Cold Starts Decimated

This is the headline act. The main event. The benchmark shows a standard TensorFlow.js implementation taking around 2,239 milliseconds for a cold start. That's over two seconds. Not great.EnergeticAI with a bundled model? 53 milliseconds.

Read that again. 53ms. That's not just better; it's a completely different class of performance. It turns a sluggish, barely-usable endpoint into something that feels instantaneous. This is the kind of optimization that can make or break a project. It’s like putting a finely-tuned F1 engine into your serverless function, instead of a tractor motor that takes forever to get going.

Visit EnergeticAI

Warm Starts and Module Size

The improvements don't stop there. Even for warm starts (when the function is already running), it's faster. And it promises a smaller bundle size, which is a huge deal for deployment overhead and, you guessed it, faster cold starts. The headline claim of "up to 67x faster" seems to be referring to the overall inference speed in a serverless context, which is a combination of all these factors. Is it always 67x faster? Probably not. But even if it's 20x or 30x faster in a real-world scenario, that’s still a massive win.

Getting Your Hands Dirty with EnergeticAI

So, is it a pain to set up? I was expecting some complex configuration, some weird build steps. Nope. It’s literally:

npm install energetic-ai/coreThat’s it. This simplicity is a major selling point for me. As someone who manages a lot of different projects, I don't have time for a week-long setup process. The less friction, the better.

Out of the box, it comes with a few pre-trained model libraries ready to go:

- Embeddings: Perfect for creating vector representations of text. Think recommendation engines, finding similar documents, or clustering content.

- Classifiers: Great for tasks like sentiment analysis, topic categorization, or spam detection using just a few examples (few-shot learning).

They also have Semantic Search listed as 'Planned', which gets me pretty excited. The ability to perform search based on meaning rather than just keywords, with this kind of performance, could be a game-changer for so many applications.

The Good, The Bad, and The Node.js Version

No tool is perfect, right? A balanced view is always needed. I've been doing this long enough to know there are always trade-offs.

On one hand, the advantages are clear and compelling. The speed is phenomenal, the small module size is a godsend for serverless deployments, and the installation is a breeze. The fact that it's released under an Apache 2.0 license is a huge plus for businesses who are often wary of more restrictive open-source licenses. It's 'business-friendly'.

However, there are a few caveats to keep in mind. First, it requires Node 18+. That might be a non-starter for legacy projects stuck on older runtimes. Second, you're limited to the models that are based on TensorFlow.js. If your heart is set on a specific PyTorch model, you're out of luck here. Finally, while the core package is Apache 2.0, they wisely point out that the dependencies may have their own licenses. As always, do your due diligence!

So, Who Is This For?

I see a pretty clear sweet spot for EnergeticAI. If you're a Node.js developer, especially one building on platforms like Vercel or AWS Lambda, and you want to add AI features without adding a ton of complexity or performance overhead, this is for you. It's for the startup that wants to build an AI-powered MVP without hiring a dedicated MLOps team. It's for the full-stack dev who just wants to import a package and get back to building the product.

It's not for the data scientist who needs to build and train custom, cutting-edge models from the ground up on massive datasets. This is a tool for application, not for fundamental research.

What's the Price Tag?

From everything I can see, EnergeticAI appears to be free and open-source. The `npm install` and the Apache 2.0 license strongly suggest this. There’s no pricing page, no mention of tiers or enterprise plans. This lowers the barrier to entry to basically zero. You can just try it out on your next project without any financial commitment, which is exactly how powerful developer tools should be introduced, in my opinion.

Frequently Asked Questions

- What is EnergeticAI in simple terms?

- It's an optimization toolkit for TensorFlow.js that makes it run incredibly fast in Node.js serverless environments by dramatically reducing cold start times and module size.

- How does EnergeticAI speed things up so much?

- It focuses on pre-compiling and bundling the models in a way that's hyper-optimized for quick loading in serverless runtimes, bypassing many of the slow initialization steps of standard TensorFlow.js.

- Is EnergeticAI free to use for commercial projects?

- Yes, the core library is licensed under the business-friendly Apache 2.0 license. However, you should always verify the licenses of the underlying dependencies for your specific use case.

- What are the main limitations of EnergeticAI?

- It's limited to the TensorFlow.js ecosystem, so you can't use PyTorch models. It also requires a modern version of Node.js (18 or higher), which might not be suitable for older applications.

- Is EnergeticAI a replacement for TensorFlow?

- No. It's a companion and optimizer for TensorFlow.js. It uses TFJS under the hood but makes it practical for performance-sensitive serverless applications.

- What can I build with EnergeticAI right now?

- You can immediately start building features that require text embeddings (like recommendations or similarity search) and text classification (like sentiment or topic analysis).

My Final Thoughts

I'm genuinely impressed. EnergeticAI isn't trying to boil the ocean. It’s a focused tool that solves a very specific, very painful problem for a growing community of developers. It takes the promise of easy, scalable serverless AI and actually makes it practical. That performance jump isn't just an incremental improvement; it's a fundamental change that opens up new possibilities.

For anyone in the Node.js world who has looked at AI and thought, "it's just too slow for serverless," I think EnergeticAI might just be the tool that changes your mind. I know I’ll be giving it a shot on a side-project very soon.

References and Sources

- EnergeticAI Official Website

- TensorFlow.js Project

- EnergeticAI GitHub Repository (Note: I'm linking to the likely repo based on the site's creator)