We're all in this constant, caffeine-fueled rat race for the next big thing in AI. Every week there’s a new model, a new platform, a new “game-changer” that promises to revolutionize how we work. And as someone who lives and breathes this stuff, my wallet has been feeling the burn. The cost of API calls these days can make you seriously reconsider whether you really need that AI-powered cat meme generator for your side project.

So when I stumbled upon DeepSeek R1, I was intrigued but, admittedly, a bit skeptical. Another model in a sea of models? But then I saw two words that always get my attention: open-source. And then I saw the pricing, and well… we’ll get to that. It’s a story in itself.

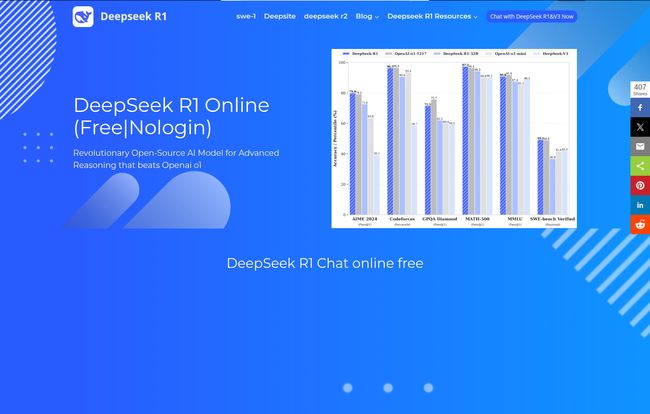

What Exactly is DeepSeek R1 Anyway?

Okay, so DeepSeek R1 isn't just another ChatGPT clone. It's positioned as a specialist in advanced reasoning. Think of it as the AI you'd want on your team for the heavy lifting—complex math problems, production-grade coding, and untangling knotty logical puzzles. It's built on a Mixture of Experts (MoE) architecture, which is a fancy way of saying it’s not one single brain trying to do everything. Instead, it’s more like a panel of specialized consultants. When a request comes in, the system smartly routes it to the expert—or combination of experts—best suited for the job. One expert for coding, another for creative writing, a third for mathematical reasoning. It’s an efficient approach that often leads to better, faster results.

And the best part? It’s released under an MIT license. For my fellow developers out there, you know what a big deal this is. It basically means you can use it, modify it, and build on it with very few restrictions, even for commercial projects. That kind of freedom is becoming a rare commodity in the increasingly walled-garden world of AI.

Visit DeepSeek R1 Online

Putting Its Performance to the Test

Talk is cheap, right? So I took it for a spin. The online platform, which is a fan-made project (an important detail!), gives you a pretty straightforward way to interact with the model. My first impression? It's snappy. For coding tasks, it felt less like a generalist trying to remember Python syntax and more like a seasoned partner. It generated clean, well-commented code that, for the most part, ran on the first try. That’s more than I can say for some of my own code, if I'm being honest.

One feature I found particularly cool for us geeks is the Chain-of-Thought visualization. You can actually see the model's reasoning process, step-by-step. It’s not just a black box spitting out answers. You can follow its logic, which is invaluable for debugging and understanding why it arrived at a certain conclusion. And don't even get me started on the long-context capabilities. We're talking up to 128K tokens. That's like feeding the AI a whole novella and asking about a minor character from chapter two... and it actually remembers. This is huge for tasks like summarizing long documents or maintaining context in extended conversations.

Let's Talk About the Elephant in the Room: The Price

Alright, this is the part where things get a little crazy. I spend a lot of time analyzing CPC, API costs, and a project's financial viability. Usually, high performance comes with a high price tag. It's just the law of the land. DeepSeek R1 seems to have not gotten the memo.

I pulled the pricing details and put them side-by-side with a well-known premium model, OpenAI's o1. Brace yourself.

| Model | Input Tokens (per 1M) | Output Tokens (per 1M) |

|---|---|---|

| DeepSeek R1 | $0.14 | $0.28 |

| OpenAI o1 | $5.00 | $15.00 |

A Direct Comparison That Boggles the Mind

No, that is not a typo. You're looking at input costs of $0.14 per million tokens versus $5.00. For output, it’s $0.28 versus a staggering $15.00. By my math, that makes DeepSeek R1 somewhere in the neighborhood of 97-99% cheaper than one of the industry's top-tier models. Let that sink in for a moment. For developers and businesses running millions of tokens a day, this isn't just a cost saving; it's a fundamental shift in what's possible. Projects that were once financially unfeasible are suddenly on the table. It completely changes the economic equation of building with AI.

Getting Your Hands on DeepSeek R1

So, you're sold on the price and performance. How do you actually use it? You have a couple of options, depending on your comfort level with code.

The Easy Way: The Online Platform

As I mentioned, there's an online portal that provides free, no-login-required access to try out the model. It's a great way to kick the tires and see if it fits your needs. This site also provides an OpenAI-compatible API endpoint, which is a genius move. It means for many existing applications, you could potentially swap out your current API endpoint for DeepSeek's with minimal code changes. Just be aware, the site clearly states it's a fan-made project and not officially affiliated with the DeepSeek team.

The DIY Route: Local Deployment

For those who want full control, privacy, and don't mind getting their hands dirty, you can deploy DeepSeek R1 locally. Because it's open-source, you can download the model and run it on your own hardware. This is the ultimate in flexibility. Of course, this path isn't for everyone. It requires a certain amount of technical know-how to set up the environment, manage dependencies, and get everything running smoothly. But for a startup or a researcher, the power of a private, locally-hosted reasoning engine is immense.

The Good, The Bad, and The Code-y

No tool is perfect. In my experience, it’s about finding the right tool for the job. DeepSeek R1 has some incredible strengths, but it's important to be aware of its limitations. The cost, as we've covered, is just fantastic. The open-source nature gives you unparalleled freedom, and its performance in its specialized areas of coding and reasoning is truly top-notch. That 128K context window is another massive win.

On the flip side, the DIY deployment can be a hurdle for non-technical users. And since performance can vary a bit between the different model variants, you might need to experiment to find the perfect one for your use case. It’s not quite the plug-and-play experience some other platforms offer, but then again, it's not trying to be.

Frequently Asked Questions About DeepSeek R1

Is DeepSeek R1 really open-source?

Yes, it absolutely is. It's available under the MIT License, which is one of the most permissive open-source licenses out there. This allows for broad use, including in commercial applications.

Can I run DeepSeek R1 on my own machine?

You sure can. If you have the technical chops and the right hardware, you can deploy it locally for maximum control and privacy. The models are available for download.

How does the API integration work?

The online platform provides an OpenAI-compatible API. This is a huge convenience, as it allows developers to integrate DeepSeek into existing projects with minimal changes to their code, often just by swapping out the API key and base URL.

Is the online platform the official website?

This is a critical point: No, it is not. The website providing the online chat and API is a fan-made project. While it’s a fantastic resource for testing, always be mindful of this distinction, especially for production workloads.

What is DeepSeek R1 best at?

It shines in tasks that require deep reasoning. This includes complex problem-solving, high-quality code generation across various languages, and mathematical challenges. It's less of a generalist chatbot and more of a specialist tool.

My Final Verdict on DeepSeek R1

So, is DeepSeek R1 the ultimate OpenAI killer? Maybe not for everyone, not today. It doesn't have the same polished, consumer-facing packaging. But that's not its goal. For developers, startups, researchers, and anyone running AI at scale, DeepSeek R1 feels like a revolution. It’s a powerful, highly capable model that democratizes access to top-tier AI by crashing through the cost barrier.

It represents a different philosophy—an open, community-driven approach that puts powerful tools directly into the hands of creators. I'm genuinely excited to see what people build with this. It's a reminder that some of the most exciting innovations in tech don't come from the biggest names, but from the open-source community. Go give it a try. You, and your wallet, might be pleasantly surprised.

Reference and Sources

- For technical details and access to the models, the official DeepSeek-AI GitHub is the primary source.

- To understand the license, you can read the full MIT License text.

- The fan-made platform offering online access is available at deepseek-r1.online.