I’ve been in the digital marketing and tech space for a while now. I’ve seen trends come and go, and I've seen more self-proclaimed “game-changers” than I can count. Most of the time, it's just marketing fluff. A fresh coat of paint on an old idea. But every so often, something pops up on my radar that genuinely makes me lean in closer to my monitor. That’s what happened when I first stumbled upon DeepSeek V3.

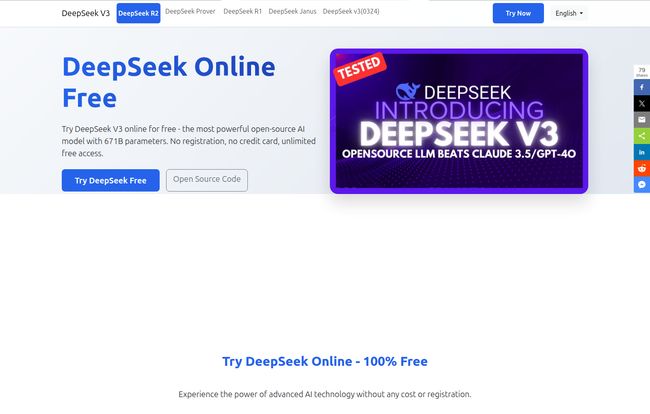

The claims are bold. A massive 671 billion parameter model. Beats giants like Claude 3.5 and GPT-4o on benchmarks. And the real kicker? It’s free. Not “free trial” free. Not “freemium with the good stuff locked away” free. Genuinely, no-strings-attached, commercially-usable free. My inner skeptic immediately went on high alert. So, naturally, I had to see for myself what was going on.

So What Exactly Is This DeepSeek V3 Thing?

At its core, DeepSeek V3 is a monster of a Large Language Model (LLM) created by the folks at DeepSeek AI. When we talk about parameters in AI models, you can think of them as the knobs and dials the AI uses to think—the more you have, generally, the more nuanced and complex its reasoning can be. For context, models considered state-of-the-art have parameter counts in the hundreds of billions. DeepSeek V3 clocks in at a staggering 671 billion parameters. That’s not just knocking on the door of the big leagues; that’s kicking it clean off its hinges.

What makes this so different from the usual suspects in the AI arms race (looking at you, OpenAI and Google) is its philosophy. It's open-source. This means the code and the model weights are out there for anyone to see, use, and build upon. That’s a huge deal in a world of increasingly closed-off, proprietary AI systems.

Visit DeepSeek Online

The Instant Gratification of the Online Demo

My first stop was the online demo. I was braced for the usual signup form, the email verification, the “give us your credit card just in case” dance. But there was… nothing. Just a clean interface and a blinking cursor, ready to go. I can't overstate how refreshing this is. No barriers. Just pure, instant access to a top-tier AI. Major points for that.

I threw a few test prompts at it. First, a classic SEO task:

"Generate five catchy H1 titles for a blog post about the benefits of intermittent fasting for busy professionals. The tone should be encouraging but realistic."

The results were solid. Better than many paid tools I've used, honestly. Then I tried something more complex, a coding problem that had been a minor headache for me last week. It spat out clean, well-commented Python code that, with a minor tweak, worked perfectly. The speed was impressive, and the reasoning seemed sound. So far, so good.

Going Rogue: The Power and Pain of Local Installation

The online demo is great for a test drive, but the real power of an open-source model is running it on your own hardware. This is where you get total privacy, no rate limits, and the ability to fine-tune the model on your own data. DeepSeek provides everything you need to do this over on their GitHub page.

But here’s the reality check. Running a 671B parameter model isn't something you do on your 2019 MacBook Pro. This is where we separate the hobbyists from the serious operators. To run this beast locally, you need a server rig with some serious GPU firepower. We’re talking about needing multiple high-end GPUs like NVIDIA's A100s or H100s. It’s like being handed the keys to a Bugatti Veyron; sure, it's free, but do you have a private racetrack and a team of mechanics to run it? For most individuals, the answer is a resounding no.

Luckily, they also offer a more manageable 67B parameter base model. It's still incredibly potent but has a much more… realistic hardware footprint for small businesses or dedicated enthusiasts. This is a smart move, providing an accessible entry point into their ecosystem.

Breaking Down the Key Features

Let's get into the nitty-gritty of what makes DeepSeek V3 stand out.

Truly Open Source for Commercial Use

This is probably the most important feature. The model is released under an open-source license that explicitly allows for commercial use. You can build a product on top of this model and sell it without paying DeepSeek a dime in licensing fees. For startups and developers trying to compete, this is an absolute gift. It lowers the barrier to entry for creating powerful AI applications, which can only be a good thing for innovation.

That Massive 128k Context Window

A model's "context window" is like its short-term memory. It determines how much information it can hold and consider at once. At 128,000 tokens, DeepSeek V3 has a very competitive memory. This means you can feed it entire documents, long codebases, or complex conversational histories, and it won't lose the plot. While some newer models are pushing even larger windows, 128k is more than enough for the vast majority of professional use cases.

DeepSeek V3 vs. The Heavyweights

The website boldly claims it beats models like GPT-4o and Claude 3.5. Does it? Well, it's complicated. AI benchmarks can be gamed, and raw performance doesn't always translate to a better user experience.

From my own testing and reading through community feedback, here's my take:

- For Coding and Logic: DeepSeek V3 is an absolute powerhouse. Its strong performance in math and coding benchmarks seems to hold up in real-world use. It's a fantastic copilot for developers.

- For Creative Writing: This is more subjective. I found it to be very good, but perhaps without some of the creative flair or 'personality' that models like Claude 3.5 Sonnet can sometimes exhibit. Your mileage may vary.

- For The Price: This isn't even a competition. One is free if you have the hardware or just use the demo. The others come with API costs that can quickly add up. For many, that fact alone makes DeepSeek V3 the winner.

It's not so much a “killer” as it is a powerful, legitimate alternative. It forces the big players to justify their price tags, and that competition benefits all of us.

| Feature | DeepSeek V3 Online | DeepSeek V3 Local Install |

|---|---|---|

| Cost | Free | Free (but requires significant hardware investment) |

| Registration | None needed | N/A |

| Use Case | Testing, casual use, quick tasks | Commercial apps, fine-tuning, privacy-focused tasks |

| Accessibility | Extremely high | Low (requires technical expertise and hardware) |

So, Who Is This For?

After playing with it for a bit, I see a few clear winners here.

Developers and Startups are the biggest beneficiaries. Having a commercially viable, open-source model of this caliber without the API costs of a giant like OpenAI is a revolution. It allows for experimentation and product development on a budget that was previously impossible.

AI Enthusiasts and Researchers get a new state-of-the-art playground. Being able to peek under the hood, run tests, and fine-tune the model is invaluable for pushing the boundaries of what's possible.

The Curious Public gets a no-risk way to experience top-tier AI. The barrier to entry is literally zero. If you've been curious about what these advanced AIs can do, there's no easier way to find out.

Frequently Asked Questions about DeepSeek V3

- 1. Is DeepSeek V3 really free to use?

- Yes, the online demo is 100% free with no registration. The model itself is also free to download and use, even for commercial purposes. The only "cost" is the powerful hardware needed to run it locally.

- 2. Can I use DeepSeek V3 for my business or a commercial product?

- Absolutely. It's released under a permissive open-source license that allows for commercial use, which is one of its biggest advantages.

- 3. What are the system requirements for a local installation?

- They are substantial. For the full 671B model, you'll need a server with multiple high-VRAM GPUs. The 67B base model is more manageable but still requires a powerful, dedicated machine with a high-end GPU. This isn't for your average desktop computer.

- 4. How does DeepSeek V3 compare to other AI models like GPT-4o?

- According to their published benchmarks, it's highly competitive and even superior in areas like coding and mathematics. In practice, it's a very strong alternative, though the "best" model often depends on the specific task.

- 5. Who is the company behind DeepSeek?

- DeepSeek is backed by DeepSeek AI, a research-focused company. Making their models open-source is a strategy to build community and drive innovation in the field.

- 6. Can I integrate DeepSeek V3 into my existing applications?

- Yes. You can interact with a locally hosted model via an API, similar to how you would with other major AI services. The GitHub repository provides documentation on how to set this up.

My Final Thoughts

DeepSeek V3 feels like a significant moment in the AI space. It's a direct challenge to the idea that cutting-edge AI must live in a walled garden with a price tag. It democratizes access to a ridiculously powerful tool, even if only the most well-equipped can run it at full throttle on their own turf.

Is it going to put OpenAI out of business tomorrow? No. But it doesn't have to. By simply existing, it puts pressure on the entire market, fosters competition, and empowers a new wave of builders and creators. And for me, that's more exciting than any single benchmark score. Go give the online demo a try. You've got nothing to lose and a whole lot of AI power to gain.

Reference and Sources

- DeepSeek Official Website

- DeepSeek V2 GitHub Repository (Note: V3 details are often updated within existing repos or linked from their main site)

- TechCrunch Article on DeepSeek's Model Release