If you’ve spent any time in the trenches trying to get an AI or machine learning feature off the ground, you know the pain. It starts with a cool idea, maybe from a new paper on arXiv, and then reality hits. The cost of renting a powerful GPU, the nightmare of managing dependencies, the cold sweat of wondering if your setup can handle more than five users at once... it's a lot. For years, the choice has been either a wallet-crushing managed service or a descent into DevOps madness.

I've been there. I've spent more nights than I'd like to admit wrestling with CUDA drivers and trying to figure out why my brilliant new model runs slower than a dial-up modem. So when a platform like Deep Infra comes along, promising fast, scalable, production-ready models with a simple API and pay-per-use pricing, my inner skeptic raises an eyebrow. Is it really that simple? Or is this just another pretty landing page with a complicated reality hiding underneath?

I decided to get my hands dirty and find out. This is my take on what Deep Infra gets right, where it stumbles, and who should seriously consider making it their go-to for AI inference.

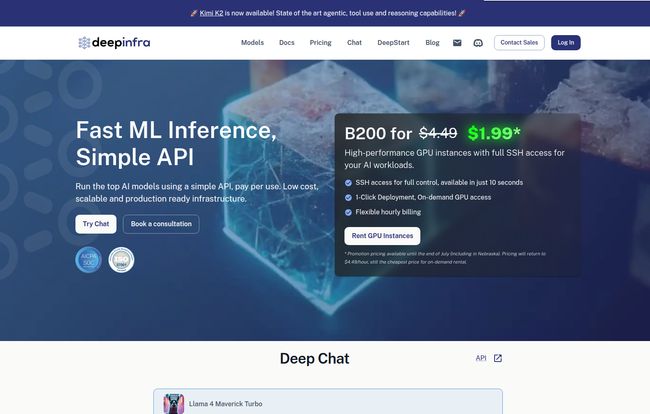

So, What Exactly Is Deep Infra?

Think of Deep Infra as a specialized tool that does one thing incredibly well: it runs popular machine learning models for you. That's it. You don't have to worry about the servers, the GPUs, or the software. You just send an API request with your input (like a text prompt), and it sends you back the output (like a generated story or a text-to-speech audio file).

It's kind of like a vending machine for AI. Instead of wrestling with a cow to get a glass of milk, you just put your money in and get what you need. It’s built for developers who want to integrate powerful AI capabilities—like those from Llama 3, Stable Diffusion, or Mistral—into their own applications without becoming full-time MLOps engineers. They handle the scaling, the speed, and the infrastructure. You handle the cool ideas.

Visit Deep Infra

The Good Stuff: Why I’m Genuinely Impressed

I’m naturally cynical about new platforms, but Deep Infra has a few features that made me sit up and pay attention. It’s not just hype; there’s some serious substance here.

The Pricing Model is a Breath of Fresh Air

Let's be honest, pricing in the AI world can be opaque and terrifying. You often have to commit to renting an expensive GPU instance by the hour, and it's on you whether you use it for one minute or sixty. Deep Infra's pay-per-use model flips that script. You’re billed based on the number of tokens you process (for language models) or the inference time (for image models). If your app has zero traffic at 3 AM, you pay zero dollars. This is huge for startups, indie developers, or anyone prototyping an idea without a venture capital-sized budget. You're paying for what you actually use, which feels… fair. Revolutionary, I know.

Getting Started is Almost Too Easy

This is where I was most skeptical, and where I was most pleasantly surprised. The process is dead simple. You pick a model from their extensive library, and they give you a cURL command or a Python snippet. You drop in your API key, and it just works. There’s no complex configuration, no YAML files to fight with, no containers to build. This low barrier to entry means you can go from idea to a working prototype in minutes, not days. For a fast-moving project, that speed is invaluable.

It’s Built for The Real World: Speed and Scale

A cool demo is one thing, a production-ready service is another. Deep Infra seems to understand this distinction. Their big promise is low-latency inference, and in my tests, it's pretty snappy. More importantly, they have auto-scaling built in. This means if your blog post suddenly goes viral and your AI-powered summarizer gets hit with thousands of requests, Deep Infra automatically spins up more resources to handle the load. You dont have to do a thing. It’s the kind of peace-of-mind feature that lets you sleep at night.

Let's Talk Turkey: The Deep Infra Pricing Breakdown

Okay, “pay-per-use” sounds great, but what does it actually cost? The pricing is granular, varying from model to model. They bill per million tokens, which can seem a bit abstract at first. A token is roughly ¾ of a word. So let’s make it concrete.

Here’s a quick look at some popular models (pricing can change, so always check their site for the latest):

| Model | Type | Price per 1M Input Tokens | Price per 1M Output Tokens |

|---|---|---|---|

| meta-llama/Llama-3-8B-Instruct | Text Generation | $0.07 | $0.27 |

| mistralai/Mistral-7B-Instruct-v0.1 | Text Generation | $0.07 | $0.27 |

| microsoft/phi-2 | Text Generation | $0.07 | $0.27 |

| BAAI/bge-large-en-v1.5 | Embeddings | $0.02 | N/A |

For those with more specific needs, you can even rent dedicated GPUs for running custom fine-tuned LLMs. This is a great hybrid option—you get the power of your own hardware without the physical maintenance, all while still using their infrastructure. It's a nice escape hatch if the shared model pool doesn't quite fit your use case.

The Not-So-Perfect Parts: A Few Caveats

No platform is perfect, and it would be dishonest to pretend otherwise. There are a few things about Deep Infra that might give you pause.

The Upfront Commitment

While the pricing model is pay-as-you-go, you can't just start playing around without putting a credit card on file or pre-paying. This is a standard practice to prevent abuse, but it does create a small barrier for students or hobbyists who just want to experiment without any financial commitment whatsoever. A small, free trial tier would be a welcome addition here.

Mind Your Limits

Out of the box, accounts are limited to 200 concurrent requests. For 99% of applications, this is more than enough. But if you’re building the next massive-scale AI sensation, this is a number you'll need to be aware of. It's a soft ceiling that you might hit sooner than you think if you have a very spiky, high-traffic service. I imagine they have enterprise plans to raise this, but it’s something to note for the standard tiers.

Navigating Billing Tiers

The billing system has usage tiers. As your spending increases, you move into higher tiers which might have different invoicing thresholds. It's not overly complex, but it is another thing to keep track of. You need to monitor your usage to avoid any surprises. This is pretty typical for cloud services, but worth mentioning for those new to this kind of model.

Deep Infra in the Wild: How it Stacks Up

So where does Deep Infra fit into the broader ecosystem? It's competing in a crowded space with players like Replicate, Anyscale, and even the big cloud providers' own AI services. In my opinion, Deep Infra's strength is its razor-sharp focus on being a fast, cheap, and reliable inference utility. It's less of an all-in-one MLOps platform and more of a specialized API for getting model outputs.

If other platforms are a Swiss Army Knife with a bunch of tools, Deep Infra is a perfectly weighted, razor-sharp chef’s knife. It does one job, and it does it exceptionally well.

If you need extensive tools for fine-tuning, dataset management, and a fancy UI, you might look elsewhere. But if your goal is simply to call a powerful AI model from your code as efficiently as possible, Deep Infra is a very, very compelling option.

Frequently Asked Questions about Deep Infra

- Is Deep Infra good for beginners?

- Yes and no. If you're a developer who is new to AI but comfortable with APIs, it's fantastic. The easy integration is a huge plus. If you're a complete non-coder, it's probably not the right tool for you, as it requires some programming to use.

- How does Deep Infra's pricing really work?

- For most language models, you pay based on the amount of text you process, measured in tokens (both input and output). For other models, like image generators, you might be billed per second of inference time. It’s a usage-based model, so you only pay for the computation you actually use.

- Can I run my own custom model on Deep Infra?

- Yes! This is a powerful feature. You can deploy custom Large Language Models (LLMs) on dedicated GPU instances. This gives you the performance of your own hardware with the convenience of Deep Infra's infrastructure.

- What kinds of AI models can I find on Deep Infra?

- A whole bunch. Their library includes models for text generation (like Llama and Mistral), text-to-image (like Stable Diffusion), text-to-speech, and automatic speech recognition (ASR). They tend to feature popular and state-of-the-art open-source models.

- Is Deep Infra scalable enough for a real business?

- Absolutely. With features like auto-scaling, it's designed for production workloads. It can handle traffic spikes automatically, which is a major benefit for any growing application or business.

The Final Verdict: Is Deep Infra Worth It?

After spending some quality time with it, my answer is a resounding yes—for the right person.

Deep Infra is a fantastic tool for developers, startups, and small to medium-sized businesses who want to leverage the power of AI without the astronomical cost and complexity of building their own infrastructure. The pay-per-use pricing is fair, the performance is solid, and the ease of use is genuinely best-in-class. It democratizes access to incredibly powerful technology.

It might not be the perfect fit for a massive enterprise with a deeply entrenched, custom MLOps pipeline, or for someone who needs a completely codeless interface. But for the vast majority of us building on the modern web, it hits a sweet spot that has been sorely needed for a long time. It lets you focus on what matters: building amazing things.

Reference and Sources

- Deep Infra Official Website

- Deep Infra Pricing Page

- Replicate - A similar platform for AI model deployment.