I spend my days eyeballs-deep in analytics, trends, and the latest marketing tech. Let's be honest, the AI hype train has been chugging along at full steam for a while now. We've all seen what large language models can do with text. We’ve been wowed by image generators. But I’ve always felt there’s been a piece missing. A disconnect. AI is incredibly book-smart, but it’s rarely… well, street-smart. It lives in the cloud, detached from my immediate reality.

I can ask GPT-4 about the history of the Eiffel Tower, but I can't ask it, "Hey, based on this gloomy sky and the current humidity, should I bring an umbrella for my walk to that specific coffee shop over there?" The AI lacks my context. My senses. It's like having a conversation with a genius who's locked in a soundproof room.

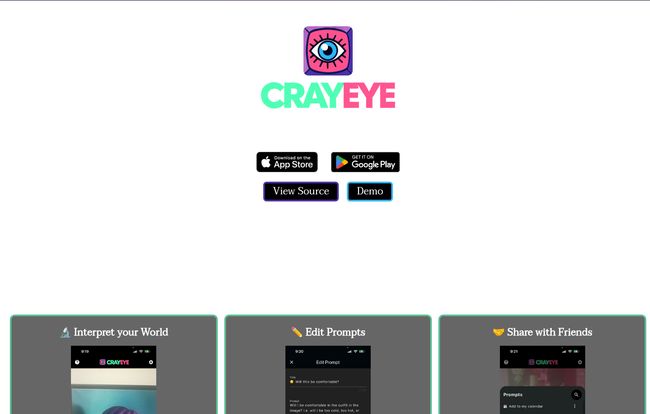

Then I stumbled upon a curious little tool called CrayEye. And I have to say, it’s one of the first things I've seen that genuinely tries to bridge that gap. It’s not just another AI chatbot; it's an attempt to give AI a nervous system, to plug it directly into the real world through the one thing we all carry: our smartphone.

So, What on Earth is CrayEye?

Alright, let's cut through the jargon. CrayEye is a free, open-source “multimodal multitool.” Fancy words, I know. In simple terms, it lets you create and share AI prompts that combine what your phone’s camera sees with data from its other sensors and APIs. Think location, weather, time of day, you name it.

Instead of just showing the AI a picture and asking “What is this?”, you can build a much richer query. It’s the difference between showing a botanist a photo of a leaf versus bringing them the whole plant, soil and all, and telling them exactly where and when you found it. The context changes everything.

And here’s the kicker, the part that made me raise an eyebrow: the website says CrayEye was “written by A.I.” We’re officially at the point where AI is building the tools to make itself more powerful. A little meta, a little unnerving, but absolutely fascinating.

Visit CrayEye

Giving AI Your Senses

What really makes CrayEye tick isn't just one single thing, but the way it blends a few cool ideas together. It’s a bit of a Frankenstein’s monster, in the best possible way.

More Than Just a Picture

The term “multimodal” gets thrown around a lot in AI circles. It just means the model can understand and process different types of information at once—text, images, sound, etc. We’ve seen this with powerful models like OpenAI’s GPT-4V. CrayEye takes this concept and puts it into a practical, pocket-sized application. You’re not just sending an image; you’re sending an entire data packet of your immediate environment. This allows for prompts that are layered and specific in a way that’s been clunky or impossible before.

The Secret Sauce: Sensor and API Integration

This is the core of it's potential. By letting you customize prompts with data from sensors, CrayEye gives the AI real-time, hyperlocal information. Imagine pointing your camera at your sad-looking tomato plant and crafting a prompt like:

"Analyze this plant's health. It's currently {time_of_day} in {city}, the temperature is {weather_temp}, and it hasn't rained in {days_since_rain}. Based on the leaf discoloration and these conditions, what's the most likely issue and what should I do?"

Suddenly, the AI isn’t just guessing based on a generic picture of a plant. It’s a consulting agronomist with real-time field data. That’s a massive leap.

The Open-Source Spirit

I have a soft spot for open-source projects. It means transparency. It means community. The fact that CrayEye is open-source (you can literally go “View Source” and peek under the hood) is a huge plus. It invites developers to tinker, improve, and adapt the tool. It also builds trust, which is desperately needed in an industry that can often feel like a black box. You’re not just using a product; you’re engaging with an idea that a community can build upon. It also means no sneaky subscription fees popping up down the line.

The Good, The Quirky, and The Maybe-Not-So-Good

No tool is perfect, right? After playing around with the concept and looking at what it offers, here’s my honest take. It’s not a formal review, just what I’m seeing from my little corner of the internet.

What I love is obvious: it’s free, it’s open-source, and it’s pushing a genuinely innovative idea. The ability to craft and share these hyper-contextual prompts is a playground for creativity. I can see this being used for everything from education (imagine a museum tour guide app) to DIY home repair. The potential is immense.

However, there are some things that give me pause. The effectiveness of CrayEye is completely chained to the quality and availability of your device’s sensors. Got an old phone with a wonky GPS? Your results might suffer. It also seems like it would require a bit of technical know-how to really unlock its full power. You have to think like a programmer, crafting prompts with variables. This isn’t your grandma’s point-and-shoot AI app. Not yet, anyway.

There's also a bit of a question mark around the specific AI models it uses and their full capabilities. But for a free, experimental tool, that's kind of expected. You’re on the cutting edge, and the cutting edge is never perfectly polished.

What’s the Price of Admission?

This part is easy. It’s free. As in, zero dollars. Zilch. In an age of endless SaaS subscriptions and freemium models designed to upsell you, “free and open-source” is a breath of fresh air. This lowers the barrier to entry so anyone with a smartphone and a bit of curiosity can start experimenting.

Who Is This Really For?

So, should you rush to download it? Well, it depends on who you are.

- Developers & Tinkerers: Absolutely. This is a sandbox for you. The open-source nature means you can dig in, contribute, and maybe even build your own applications on top of its ideas.

- AI Enthusiasts: For sure. If you want to see where AI is heading beyond simple text prompts, this is a fascinating glimpse into the future of context-aware interaction.

- Educators: I see huge potential here for creating interactive learning experiences. Science classes, history lessons, art analysis... the list goes on.

- The Everyday User: Maybe wait a little bit. While you could definitely have some fun with it, the need to manually craft prompts might feel a bit clunky if you’re just looking for a simple answer. But if you’re curious, why not? It's free.

The Bigger Picture for Marketers and SEOs

Okay, putting my SEO hat back on. Why does a tool like CrayEye matter to us? Because it’s a sign of what’s to come. We’re moving away from generic search queries and toward highly specific, contextual, and conversational interactions. This technology could have wild implications for things like local SEO, where proximity and real-time conditions are everything. Imagine a user asking an AI, "Where can I get the best iced coffee near me right now, considering it's 90 degrees out and I want a place with outdoor seating in the shade?" That’s a CrayEye-style query. The businesses that can provide that level of detailed, structured data are the ones that will win in the AI-driven future.

Final Thoughts: A Gimmick or a Glimpse of the Future?

So, is CrayEye the final frontier? Probably not. But it's an important and exciting step. It’s a clever solution to AI’s “locked room” problem. By giving models access to our real-world context, it makes them infinitely more practical and, dare I say, more human. It’s messy, a bit nerdy, and probably not for everyone just yet. But the best ideas usually start that way. I, for one, am excited to see where the community takes it.

Frequently Asked Questions About CrayEye

- 1. What is CrayEye in simple terms?

- CrayEye is a free mobile app that lets you combine pictures from your camera with data like your location or the current weather to ask AI more detailed questions about the world around you.

- 2. Is CrayEye free to use?

- Yes, CrayEye is completely free and open-source. There are no hidden costs or subscriptions mentioned.

- 3. Do I need to be a programmer to use it?

- While anyone can use it, you'll get the most out of CrayEye if you're comfortable thinking logically and crafting detailed prompts. Some technical curiosity is definitely a plus.

- 4. What does "multimodal" mean?

- Multimodal AI refers to artificial intelligence that can process and understand multiple types of data at once. For CrayEye, this means it uses both image data (from the camera) and text/sensor data (like GPS coordinates) in a single prompt.

- 5. Is CrayEye available on both iOS and Android?

- Yes, the images show that CrayEye is available for download on both the Apple App Store and the Google Play Store.

- 6. Where can I see the source code?

- The app's website provides a "View Source" button, which typically leads to a code repository like GitHub where you can view and contribute to the project.

Reference and Sources

- OpenAI's Research on GPT-4V (Vision) - For background on multimodal AI capabilities.

- GitHub - As a general reference for open-source projects. A specific link for CrayEye would be found on their official site.

- Hugging Face - A central hub for the open-source AI community.