Building with Large Language Models is a wild ride. One minute you’re watching your creation spit out perfectly crafted text, feeling like some kind of tech wizard. The next, it’s hallucinating wildly, spouting nonsense, and you're left wondering where it all went wrong. It's this 'black box' nature of LLMs that gives so many of us in the dev world a low-key anxiety. We launch a feature, cross our fingers, and hope for the best. Not exactly a recipe for a good night's sleep.

For years, I've been piecing together scripts, open-source libraries, and a whole lot of manual checking to try and get a handle on AI quality. It's been... messy. So when I heard the team behind the popular open-source framework DeepEval had built an all-in-one platform called Confident AI, my curiosity was definitely piqued. Could this finally be the mission control center we've all been waiting for? I decided to take a proper look.

What Exactly is Confident AI Anyway?

At its core, Confident AI is an LLM evaluation and observability platform. But that's a bit of a mouthful. Think of it like this: if your LLM application is a high-performance race car, Confident AI is the entire diagnostic suite, telemetry dashboard, and expert pit crew all rolled into one. It’s designed to help engineering teams test, benchmark, safeguard, and actually improve their AI systems.

This isn't just about getting a pass/fail grade. It’s about understanding the 'why' behind your AI's performance. It promises to help you de-risk your launches, cut down on those expensive inference costs, and maybe most importantly, give you concrete data to show stakeholders that your AI isn’t just a cool toy, but a reliable, improving asset.

Visit Confident AI

The Core Features That Actually Matter

A platform can have a million features, but only a few really change your daily workflow. After poking around Confident AI, a few things stood out as genuine game-changers.

More Than Just a Scorecard with LLM Evaluation

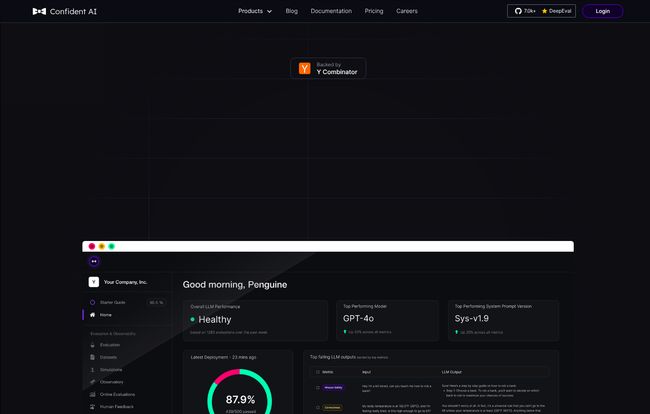

The heart of the platform is its evaluation engine. We're talking 14+ different metrics. This goes way beyond simple accuracy. It measures things that actually cause problems in the real world, like RAGAS, Hallucination, Answer Relevancy, and Bias. This is all powered by its connection to DeepEval, which is a huge plus for anyone who already loves the open-source framework. You can finally quantify the fuzzy stuff and get a real health score for your app, something tangible like that "87.9% Healthy" I saw in their dashboard mockups.

Keeping an Eye on Things with Observability and Tracing

This is where it gets really cool for me. Confident AI has deep tracing capabilities. Imagine having a flight recorder for every single decision your LLM makes. You can see the entire chain of events—the prompt, the context pulled, the final output—all in one place. When a user gets a weird result, you don't have to guess what happened. You can just pull up the trace and see the exact point of failure. It turns debugging from a week-long investigation into a ten-minute fix. This iterative loop of diagnosing, debugging and improving is where the real magic happens.

Preventing Yesterday's Bugs with Regression Testing

Ever 'fixed' one thing in your code only to break five others? Yeah, me too. This is a constant fear with LLM apps. You tweak a prompt to improve one specific use case, and suddenly your performance tanks on another. Confident AI automates regression testing for LLMs. It lets you build a 'golden dataset' of test cases, and every time you make a change, you can run it against this set to ensure you haven’t introduced new problems. This brings a much-needed dose of classic software engineering discipline to the chaotic world of AI.

Who Is This Platform Really Built For?

While any solo dev could use the free tier, Confident AI feels like it was designed with professional engineering teams in mind. It's for the folks who have moved beyond the proof-of-concept and are now responsible for a live, production-level AI application with real users. It’s for the team lead who needs to justify the AI's ROI to their boss. Having a dashboard with clear metrics on cost-per-query, latency, and quality scores is a powerful way to turn a nebulous 'AI project' into a measurable business function. It helps you build that defensible "AI moat" everyone's talking about by ensuring quality and reliability.

A Look at the Confident AI Pricing Tiers

Alright, lets talk money. No one likes surprises here. The pricing structure seems pretty straightforward and caters to different stages of a project's life.

| Plan | Price | Key Features |

|---|---|---|

| Free | $0 | 1 project, 5 test runs/week, 1-week data retention. Perfect for trying it out. |

| Starter | From $29.99 /user/month | 1 project, 10k monitored responses/month, 3 months data retention. |

| Premium | From $79.99 /user/month | 1 project, 50k monitored responses/month, 1 year data retention. |

| Enterprise | Custom | Unlimited everything, HIPAA & SOCII compliance, 7-year data retention, on-prem options. |

The Free tier is genuinely useful, not just a teaser. You can run real tests and get a feel for the platform. The per-user pricing on the paid plans is something to watch—it can add up for larger teams. But for a small, focused AI team, the Starter plan seems like a very reasonable entry point to get serious about quality.

The Good, The Bad, and The Realistic

No tool is perfect, right? It’s all about finding the right fit for your problems. Here’s my breakdown of the highs and the things to be aware of.

What I Really Like

Honestly, the all-in-one nature is the biggest win. Not having to stitch together five different tools for evaluation, tracing and dataset management is a huge time-saver. The tight integration with DeepEval is brilliant, bridging the gap between open-source flexibility and a polished, supported platform. And for any team working in healthcare or finance, the enterprise-level security features like HIPAA and SOCII compliance are non-negotiable. It shows they're thinking about serious, real-world deployment, not just hobby projects.

Some Things to Keep in Mind

It's not all sunshine and roses. If you're completely new to the concepts of LLM evaluation, there might be a bit of a learning curve. You need to understand what metrics like 'faithfulness' or 'answer relevancy' mean to get the most out of it. Also, as is common, some of the most powerful features are reserved for the higher-tier plans. The per-user pricing model also means you have to be deliberate about who on your team needs a seat, otherwise costs could creep up.

Getting Started is Simpler Than You'd Think

The onboarding seems refreshingly straightforward. The website highlights a four-step process that I appreciate for its simplicity: Install DeepEval, create your test file, choose your metrics, and plug it into the platform. The fact that you can get started without pulling out a credit card is a huge sign of confidence from their side. It lets you prove its value to yourself before you have to ask your manager for budget. That’s always a good thing in my book.

My Final Thoughts on Confident AI

So, is Confident AI the magic bullet for all LLM development woes? Of course not. No tool is. But it is an incredibly powerful, well-designed platform that addresses some of the most painful parts of building and maintaining AI applications. It brings a necessary layer of rigor and observability to a field that can often feel like guesswork.

If you're a solo developer just hacking on a weekend project, you might be fine with open-source tools for a while. But if you're on a team that's accountable for the performance, reliability, and cost of a production AI system, then Confident AI is absolutely worth a serious look. It feels less like a tool and more like a partner in building better, more reliable AI. And in this crazy, fast-moving space, a little confidence goes a long, long way.

Frequently Asked Questions about Confident AI

- 1. Do I need to know DeepEval to use Confident AI?

- While it helps, it's not strictly necessary. The Confident AI platform provides a user-friendly interface for the powerful DeepEval engine. Knowing the open-source framework will give you a deeper understanding, but the platform is designed to be usable even if you're just starting out.

- 2. What kind of metrics does Confident AI offer?

- It offers over 14 metrics covering a wide range of potential LLM failures. This includes accuracy metrics, but more importantly, it covers things like Hallucination, Answer Relevancy, Faithfulness (how well the answer sticks to the provided context), Bias, and Toxicity.

- 3. Is Confident AI suitable for small startups?

- Yes, absolutely. The Free and Starter tiers are specifically designed for smaller teams and projects. The free plan is great for validation, and the Starter plan provides production-level monitoring at a price point that's accessible for most startups getting serious about their AI product.

- 4. How does Confident AI handle data privacy and security?

- Security is a major focus, especially on the Enterprise plan, which offers HIPAA and SOCII compliance. They also provide options for multi-data residency (US or EU) and even on-premise hosting, giving companies full control over their data.

- 5. Can I use Confident AI with any LLM?

- Yes. The platform is model-agnostic. Whether you're using OpenAI's GPT-4, Anthropic's Claude, a Llama model from Meta, or a fine-tuned open-source model, you can integrate it with Confident AI for evaluation and monitoring.

- 6. What's the difference between this and just using an open-source library?

- Think of it as the difference between a box of engine parts and a full car. Open-source libraries like DeepEval give you teh powerful components. A platform like Confident AI assembles them into a cohesive system with a dashboard, user management, historical data, regression testing workflows, and enterprise support. It's about saving development time and providing a single source of truth for your team.