We’re all on the same hamster wheel, aren’t we? The content treadmill. Churning out blog posts, newsletters, and of course, video. Endless, endless video. You spend days, maybe even weeks, scripting, shooting, and editing a fantastic hour-long webinar or a deep-dive podcast episode. You hit publish. And then... crickets. Or at least, not the standing ovation you were hoping for.

The problem is that the beautiful long-form piece you created needs to be sliced and diced for the hyper-speed world of TikTok, Instagram Reels, and YouTube Shorts. People want the highlights, the golden nuggets, the zingers. And who has the time to manually scrub through an hour of footage to find them? I know I don’t. I've spent more hours than I care to admit hunched over Adobe Premiere, my eyes glazing over, looking for that one perfect 30-second clip. It’s soul-crushing work.

So, when I stumbled across a tool called Clips AI, my ears perked up. The promise? Automating the creation of social media clips from long-form videos. For free. Sounds too good to be true, right? Well, it kinda is. And it kinda isn’t.

So, What on Earth is Clips AI?

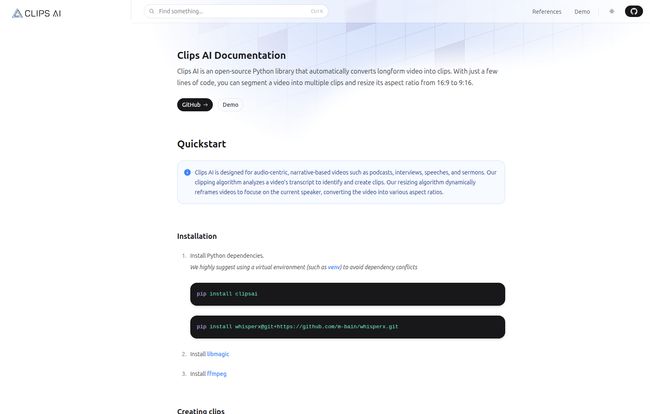

Let's get one thing straight right away: this isn't some slick, drag-and-drop web application where you upload a video and a magic unicorn spits out perfect clips. Nope. Clips AI is an open-source Python library. If that last sentence made you break out in a cold sweat, this might not be the tool for you, and that’s okay. But if you’re a bit of a tinkerer, or you have a developer on your team, stick with me.

It's designed specifically for what the creators call “audio-centric, narrative-based videos.” Think podcasts, interviews, keynote speeches, sermons, or educational webinars. Basically, any content where the words are the star of the show. It’s not built for highlight reels of your latest vacation or a montage of epic drone shots. Its brain is all about language.

How It Actually Works (The Techy Bit)

The magic behind Clips AI is a two-part process. It’s less like a magician pulling a rabbit out of a hat and more like a very, very smart and efficient robot assistant. It first finds the good parts, and then it makes them look good.

Finding the Gold with Transcript Analysis

First, Clips AI doesn’t watch your video; it reads it. It uses an open-source transcription model—the documentation points to a powerful one called WhisperX—to create a full transcript of your video. This is the foundation for everything. The clipping algorithm then analyzes this text, looking for the most compelling, self-contained segments. It's looking for the bits that make sense on their own, the juicy quotes and the powerful statements. This transcript-based approach is so much smarter than just randomly chopping a video into 60-second chunks. Its a more intelligent way to find the actual value.

The Magic of Dynamic Reframing

Once it has a clip, it tackles the next big problem: aspect ratios. Your 16:9 webinar recording looks awful on a vertical phone screen. Clips AI has a resizing algorithm that dynamically reframes the video to focus on who’s talking. It uses a technique called speaker diarization (with help from another tool, Pyannote) to identify “who spoke when.” So if you have two people in an interview, the frame will automatically shift to the person who is currently speaking. No more awkward empty space or manually keyframing the position. Pretty slick.

Visit Clips AI

The Good, The Bad, and The Code-y

Alright, so we've established it's a powerful tool with some serious brains. But as with any tool, especially an open-source one, there are trade-offs. Let's break down what I love and what gives me pause.

Why I'm Genuinely Excited

The biggest pro is staring us right in the face: it’s free. In a world of ever-increasing SaaS subscriptions, a powerful, free library is a breath of fresh air. For agencies or marketing teams looking to build an in-house, automated content repurposing workflow, this is a phenomenal starting point. You can save a ridiculous amount of time and money that would otherwise be spent on manual editing or expensive software.

Automating this process frees up your team to focus on strategy and creativity instead of mind-numbing, repetitive tasks. Think about it: you finish a podcast recording, run a script, and an hour later you have 10-15 potential social clips ready for review. That’s a massive win for social media engagement and traffic generation.

The Reality Check

Now for the other side of the coin. The main hurdle is obvious: you need to be comfortable with Python and the command line. The documentation shows you need to run `pip install` commands and install dependencies like `ffmpeg`. This isn't for the technically faint of heart. It’s not an app; its a toolkit.

You also need to get a Hugging Face access token to use the resizing feature. It’s free, but it's another step in the setup process. And, the quality of your clips is entirely dependent on the quality of your transcription. If your audio is poor or the transcription model messes up, the clipping algorithm will be flying blind. Garbage in, garbage out, as they say.

So Who Is Clips AI Actually For?

This is the million-dollar question. Clips AI is NOT for the solo entrepreneur who just wants to click a button and get clips. For that, you’re better off with services like Opus Clip or Descript. They have a monthly fee, but you’re paying for the user-friendly interface.

Clips AI is for a different breed. It’s for:

- Tech-Savvy Content Creators: The YouTuber or podcaster who isn't afraid to get their hands dirty with some code to build a custom solution.

- Marketing Teams with Dev Resources: If you have a developer on your team, you could task them with setting up a script that your content marketers can then run easily. It's the best of both worlds: automation without every team member needing to be a coder.

- Agencies and Startups: Anyone looking to build a scalable, low-cost video repurposing pipeline. You can integrate this library into your own internal tools.

The Cost of Clips AI (Spoiler: It's Your Time)

I went looking for a pricing page on their site, just to be sure. I hit a 404 “Page not found” error. For now, it seems the library is completely free to use. The real “price” is the investment in technical expertise and the time required for setup and maintenance. You’re not paying with your credit card; you’re paying with your (or your developer’s) time and brainpower. For many, that's a bargain. For others, it's a deal-breaker.

Final Thoughts: A Powerful Tool for the Right Hands

Look, Clips AI is not a magic bullet. It’s a box of very powerful, very sharp tools. In the hands of a skilled craftsman (or coder, in this case), it can build something amazing and efficient. In the hands of someone who doesn't know what they're doing, it's just a confusing pile of parts.

I’m genuinely impressed with what Clips AI offers. The combination of transcript-based clip finding and dynamic speaker-focused reframing is a killer feature set for an open-source project. It represents a shift towards more accessible, customizable AI tools for marketers. It won't replace the big SaaS players for everyone, but for the right user, it's a powerful and—most importantly—free alternative that puts you in complete control of your content workflow.

Frequently Asked Questions about Clips AI

- What is Clips AI?

- Clips AI is a free, open-source Python library that automatically creates short, social media-friendly clips from long-form, narrative-based videos like podcasts, interviews, and webinars. It uses AI to analyze the video's transcript and find the best segments.

- Is Clips AI really free to use?

- Yes, the Python library itself is free to use. The “cost” is the technical knowledge required to install, configure, and run it. You're trading money for technical effort.

- Do I need to be a programmer to use Clips AI?

- Pretty much, yes. You need to be comfortable working with Python, installing packages using pip, and working from a command line. It is not a user-friendly application for non-technical users.

- What kind of videos work best with Clips AI?

- It's optimized for audio-centric and narrative-driven content. Think podcasts with video, interviews, speeches, sermons, and educational content where the spoken word is the main focus.

- What do I need to install to use it?

- According to the documentation, you'll need Python, the Clips AI library itself (`clipsai`), a transcription library like `WhisperX`, and the `ffmpeg` multimedia framework. You will also need a Hugging Face token for the automatic resizing feature.

- How does it compare to other tools like Opus Clip?

- Think of Clips AI as the engine, while tools like Opus Clip are the whole car. Opus Clip provides a simple web interface for a monthly fee. Clips AI is a free, powerful library that gives you more control and customization, but you have to build the car (or at least, the workflow) yourself.

Reference and Sources

- Clips AI Documentation: The primary source for this review, though the homepage link was used from the provided images. A direct GitHub link would be the primary destination for developers.

- WhisperX GitHub Repository: https://github.com/m-bain/whisperx

- Pyannote on Hugging Face: https://huggingface.co/pyannote/speaker-diarization-3.1