The world of Large Language Models feels a bit like the wild west right now. One day you’re all in on OpenAI's GPT-4, the next day Anthropic's Claude 3 Opus drops a masterpiece, and then Google's Gemini family comes knocking. Every single one has its own API, its own quirks, its own way of doing things. For a developer, it's… a lot. You end up writing spaghetti code full of `if-else` statements just to switch between models. It’s a pain.

I’ve spent years neck-deep in the ever-shifting sands of SEO and digital trends, and I've seen this pattern before. A new tech explodes, creating chaos and fragmentation, and then, slowly, tools emerge to bring order. I’ve been keeping an eye out for the tool that would do this for the LLM space. And I think I might have found a serious contender in LiteLLM.

It popped up on my radar after seeing it was backed by Y Combinator and had some serious love on GitHub. So, I decided to do a deep dive. Is this the universal translator for LLMs we've all been secretly wishing for? Let's find out.

What Exactly is LiteLLM? The 30,000-Foot View

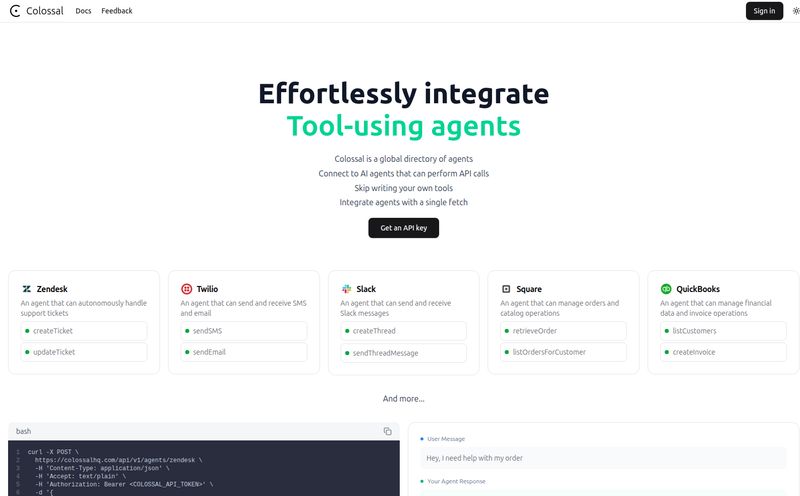

At its heart, LiteLLM is an LLM Gateway. Think of it like a universal power adapter you'd take on an international trip. You have one plug (your code) and it can connect to any outlet (any LLM), whether it’s in Europe, Asia, or North America. You don't need a different plug for every country. LiteLLM does the same thing for code. It provides a single, consistent way to call over 100 different LLMs.

This isn't just a toy for solo developers tinkering on a weekend project (though you could certainly use it for that). This is built for platform teams. The unsung heroes inside companies who have to provide stable, reliable AI infrastructure to their developers. It’s designed to take the messy, multi-provider reality of enterprise AI and make it manageable.

Visit Berri

The Killer Features That Actually Matter

A tool can have a million features, but only a few usually solve the real, hair-pulling problems. From what I’ve seen, LiteLLM focuses on the stuff that gives engineering managers and budget holders nightmares.

A Unified API That Speaks "OpenAI"

This is the big one. The absolute game-changer. LiteLLM lets you call any supported model—Claude, Bedrock, Cohere, you name it—using the exact same format as the OpenAI API. Why is this such a big deal? Because it means developers can write their code once. They can switch the underlying model from `gpt-4-turbo` to `claude-3-opus` by changing a single string, not by rewriting a chunk of their application.

Imagine the engineering hours saved. No more context-switching, no more reading through five different API documentations. It standardizes the chaos. That alone is worth its weight in gold.

Finally, Sane Cost Management and Spend Tracking

I've seen CPC campaigns spiral out of control. A misplaced setting, an unexpected surge in traffic, and suddenly you're looking at a bill that makes your eyes water. AI spending is even scarier. It’s a black box for many organizations. Who's spending what? Which project is burning through the budget? LiteLLM shines a massive spotlight into that black box.

It allows you to accurately attribute cost per request to a specific user, team, or project key. You can see your spend broken down by model—how much did you spend on OpenAI versus Azure versus Anthropic this month? You can even track spending on your own fine-tuned models hosted on Hugging Face. For anyone responsible for a P&L, this isn't a nice-to-have; it's a must-have.

Smart Routing with Fallbacks and Budgets

What happens when the OpenAI API has a wobble? It happens. If your entire product relies on it, your product goes down too. LiteLLM has a brilliant solution: LLM Fallbacks. You can set it up to automatically reroute a failed request to a different provider. If GPT-4 is down, the request seamlessly goes to Claude 3, and your user never even knows there was a problem. That’s how you build resilient AI applications.

And to prevent that rogue script from causing financial ruin, you can set hard budgets and rate limits. It's the set of guardrails that lets you innovate without fear. It’s the difference between a controlled experiment and a costly mistake.

Who is This Really For? The Ideal User Profile

Look, if you're a student just playing with a single free API, LiteLLM is probably overkill. But if you're part of a team or an organization building real products with AI, this is aimed squarely at you. Startups, scale-ups, and massive enterprises are the sweet spot.

But don't just take my word for it. The social proof here is pretty compelling.

"LiteLLM has let our team provide the latest LLM models to our developers while being provider agnostic... [it] has saved us months of work."

- David Leon, AI Infra @ Netflix

"LiteLLM is the simplest way to add support for multiple LLM models."

- Mark Kotliar, Head of AI/ML @ Replit

When teams at Netflix and Replit are saying it saved them months of work, you tend to listen. These are companies operating at a scale most of us can only dream of, and they're using this to manage their complex AI needs.

The Elephant in the Room: What's the Catch?

No tool is perfect, right? So, what are the potential downsides? The website itself doesn't list any cons, but based on my experience with similar gateway tools, I can speculate.

First, you're introducing another layer into your stack. This could, potentially, add a millisecond or two of latency. For most applications, this is completely negligible, but for high-frequency trading or real-time bidding, it might be a consideration. Second, there’s the dependency issue. By routing everything through LiteLLM, you become reliant on it. The good news? It's open-source with a very active GitHub (we're talking thousands of stars and frequent commits), so you're not locked into a proprietary black box. You can see the code and even contribute.

As for pricing, this is where I hit a small snag. The homepage has a pricing link, but when I clicked it, I got a 404 error. It happens to the best of us! Based on its open-source nature, you can definitely self-host it for free. They almost certainly have a paid Enterprise or cloud-hosted version for teams that dont want the hassle of managing the infrastructure themselves.

My Final Verdict: Is LiteLLM Worth Your Time?

Absolutely, yes. In my opinion, LiteLLM isn’t just a helpful utility; it's a strategic piece of infrastructure for any company that's serious about AI. It addresses the three biggest headaches in the current LLM ecosystem: developer friction, cost control, and reliability.

It turns the chaotic, multi-provider landscape into a simple, standardized resource. It gives you the freedom to experiment with the best model for the job without being penalized with a mountain of custom integration work. It’s a tool for building, but more importantly, it's a tool for scaling.

Frequently Asked Questions about LiteLLM

Is LiteLLM a free tool?

Yes, LiteLLM is an open-source project. You can access the code on GitHub, self-host it, and use it for free. They also appear to have an Enterprise offering for larger companies that would likely include dedicated support and managed hosting.

What language models does LiteLLM support?

LiteLLM supports a huge range of over 100 LLMs. This includes all the major players like OpenAI (GPT-4, etc.), Anthropic (Claude), Google (Gemini), Mistral, Cohere, and providers like Azure, Bedrock, and Vertex AI. It also supports open-source models hosted on platforms like Hugging Face.

How does LiteLLM improve the reliability of AI apps?

The key feature here is "LLM Fallbacks." You can configure a primary model (e.g., OpenAI) and a secondary model (e.g., Anthropic). If the primary API fails or is too slow, LiteLLM automatically retries the request with the fallback model, making your application much more robust and resilient to provider outages.

Can I use LiteLLM to track costs for my own fine-tuned models?

Yes. The platform explicitly mentions the ability to get spend data for fine-tuned or Hugging Face (HF) models. This allows you to maintain consistent cost tracking across both commercial APIs and your own custom models.

Is LiteLLM only for big companies like Netflix?

While it's incredibly valuable for large enterprises, any team or even a small startup that uses more than one LLM provider can benefit immensely. The standardization and cost controls it offers can save significant time and money, regardless of company size.

Wrapping It All Up

The AI space isn't going to get any simpler. We're going to see more models, more providers, and more specialization. Trying to manage that fragmentation manually is a recipe for disaster. Tools like LiteLLM are no longer just a convenience; they’re becoming the essential plumbing that makes this new technological era possible. It’s a smart solution to a messy problem, and in my book, that’s always a win.