If you've spent any time building an application that talks to an LLM like GPT-4 or Gemini, you know the drill. You get this brilliant idea, you stitch together a slick frontend, and then you hit the wall. The big, scary wall of... the backend. Specifically, how in the world do you let your users interact with your fancy AI model without just plastering your secret API keys all over your client-side code? That’s a rookie mistake that’ll get your keys stolen and your credit card maxed out faster than you can say “prompt injection.”

For years, the answer was always the same: build a server. Set up an endpoint. Manage authentication. Handle proxying requests. It's a whole song and dance, and frankly, it’s a drag. It’s the boilerplate that kills the creative buzz. I’ve been there more times than I can count, and I’ve always thought, “There has to be a better way.”

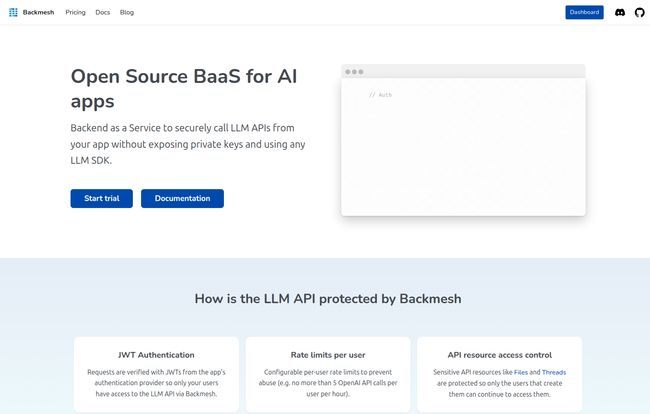

Well, I think I might have just stumbled upon it. It's called Backmesh, and it’s been making some quiet waves. It bills itself as an “Open Source BaaS for AI apps,” which is a fancy way of saying it wants to be the middleman that handles all that annoying backend security stuff for you.

So, What is Backmesh, Really?

Think of Backmesh as a highly specialized bouncer for your app’s connection to Large Language Models. Your frontend app doesn't talk directly to OpenAI or Anthropic. No way. Instead, it talks to Backmesh. And Backmesh, standing at the door, checks your user's ID (authentication), makes sure they aren't causing trouble (rate limiting), and then, only then, does it go and get the data from the LLM for them. Crucially, your precious, secret API keys stay safely tucked away with the bouncer, never to be seen by the public.

It’s a Backend-as-a-Service (BaaS), a category made famous by tools like Firebase and Supabase. But while they are general-purpose tools, Backmesh is purpose-built for one thing: making AI app development less of a pain. It's not trying to be your entire backend; its trying to be the best possible API gatekeeper for AI.

Visit Backmesh

The Core Features That Actually Matter

A lot of platforms throw a kitchen sink of features at you. I’m more interested in the ones that solve real problems. Here’s what caught my eye with Backmesh.

Fort Knox for Your API Keys

This is the main event. By acting as a proxy, Backmesh completely abstracts away the need for an LLM SDK on your frontend. Your app makes a request to your Backmesh instance, and Backmesh forwards it to the appropriate LLM with the correct private key. It’s simple, but it’s the foundational piece that makes everything else possible. No more waking up in a cold sweat wondering if you accidentally committed a .env file to a public GitHub repo.

Who Goes There? JWT Authentication

Backmesh doesn't reinvent the wheel for user management. Smart. It hooks into your existing authentication provider using JWTs (JSON Web Tokens). So if your users already log in via Supabase, Firebase Auth, or any other service that spits out a JWT, you're pretty much good to go. You just configure Backmesh to verify those tokens. It ensures that only your legitimate, signed-in users can make calls to the LLM. It’s a clean way to protect your resources.

Putting a Cap on It: Rate Limiting and Access Control

This is where things get interesting for anyone who's ever worried about a runaway script or a malicious user racking up a massive OpenAI bill. Backmesh lets you set configurable rate limits per user. Want to make sure no single user can send more than 50 requests an hour? Done. This is huge for managing costs and ensuring fair usage, especially on a free or trial tier of your own product. You can also control which resources users can access, adding another layer of security. Maybe only paid users can access your GPT-4o-powered feature, while free users are limited to a less expensive model. That kind of granular control is gold.

Knowing Your Users: The Magic of LLM Analytics

Okay, this is the feature that made me sit up straight. Backmesh includes LLM user analytics without needing to install some other complicated product analytics package. You can see which users are making requests, how often, and identify patterns. This is more than just a vanity metric. Are certain users getting more value than others? Is a new feature seeing adoption? Are API costs for a specific user segment spiraling out of control? This data helps you move from guessing to knowing, which is how you build a better product (and keep your accountant happy).

The Open Source Advantage and Self-Hosting

One of the best things about Backmesh is that it's open source. This isn’t just a philosophical win; it has practical benefits. You can see exactly how it works under the hood. There's no black box. If you're a bit of a control freak like me, that's incredibly reassuring. The community aspect, often found on platforms like GitHub and Discord, means you're not screaming into the void when you hit a snag.

The biggest perk, of course, is the ability to self-host. If you don't want to use their managed service, you can just grab the code and run it on your own infrastructure. This gives you maximum control and can be more cost-effective at scale. Now, the flip side is that this requires some technical chops. You'll need to know your way around servers and deployments. It’s not a one-click install for your grandma, but for a seasoned developer, it’s a powerful option to have.

Let's Talk Money: Backmesh Pricing

So, what does this all cost? Their pricing model is refreshingly straightforward, which I appreciate. You can start with a 15-day free trial to kick the tires.

| Plan | Price | Key Features |

|---|---|---|

| Starter | $10 / month | 500k included requests, 50k included Monthly Active Users (MAUs), unlimited users and gatekeepers. |

| Pro | Contact for Pricing | 2M included requests, 100k included MAUs, with overage fees ($1 per 1M requests, $0.003 per MAU). |

| Enterprise | Contact for Pricing | Unlimited everything. For the big players. |

Honestly, that $10 Starter plan seems like an absolute steal for indie developers or small startups. 500,000 requests is a very generous starting point. You can build and launch a real product on that. The dreaded “Contact us for pricing” on the higher tiers is a classic enterprise sales move, but for most people reading this, the Starter plan is likely the perfect fit.

My Honest Take: Who Is This For?

I’ve seen a lot of developer tools come and go. Many are solutions in search of a problem. Backmesh isn't one of them. It tackles a very real, very annoying issue head-on.

This is a fantastic tool for:

- Indie hackers and solopreneurs building AI micro-SaaS products.

- Small to medium-sized teams who want to add AI features to an existing app without spinning up a whole new backend team.

- Frontend developers who want to build full-featured AI apps without getting bogged down in server-side code.

It might not be the best fit for:

- Massive enterprises that already have deeply entrenched, custom-built API gateway and security infrastructure.

- Absolute beginners who have never worked with the concept of authentication before, as there's a slight learning curve with JWTs.

The main downside, if you can call it that, is that it’s another piece of your stack to manage. And if you're integrating with a less-common auth provider, you might have a bit of configuration work to do. But in my opinion, the security and cost-control benefits far outweigh that minor setup cost.

Frequently Asked Questions

Can I use Backmesh with any LLM provider?

Yes, that's the idea. It's designed to be a universal gatekeeper. The documentation shows examples with OpenAI, Anthropic, and Gemini, but it should work with any LLM that has a REST API.

Is Backmesh really free to self-host?

The software itself is free because it's open source. You'll still have to pay for the server or cloud service you run it on, of course. There’s no free lunch in cloud hosting!

How difficult is it to set up?

The managed cloud version is quite straightforward, especially if you're already using a common JWT provider. Self-hosting requires more technical knowledge of things like Docker and server administration.

What is a 'BaaS' again?

It stands for Backend-as-a-Service. It’s a platform that handles server-side logic and functionality for you, so you can focus on building the front end. Think of it as outsourcing your backend headaches.

Can I really integrate my existing user base?

Yes, as long as your current system uses JWT for authentication, you can wire it up to Backmesh to secure your API calls for your existing users.

So, What's the Verdict?

I'm genuinely excited about tools like Backmesh. We're in the middle of an explosion in AI development, but a lot of the tooling still feels clunky and stuck in the past. We need more platforms that streamline the boring stuff so we can focus on building cool, innovative products.

Backmesh feels like a step in the right direction. It's a focused, well-designed solution to a specific, painful problem. It's not trying to do everything, and that's its strength. By providing a secure, manageable, and insightful gateway to LLMs, it empowers a whole new wave of developers to build the next generation of AI applications. And for just 10 bucks a month to get started? That's a pretty compelling offer.