We’re all riding this insane AI wave. For SEOs, content creators, and developers, it's been a whirlwind of new tools, APIs, and workflows. I’ve personally spent more on API credits over the past year than I have on good coffee, and that’s saying something. There's always this nagging feeling in the back of my mind, though. This reliance on Big Tech's cloud. The privacy questions. The ever-changing pricing models. What happens when the service you rely on goes down, or they decide to triple the cost overnight?

It’s why the local LLM movement has me so excited. The idea of running a powerful language model right here, on my own machine, offline? That’s the dream. Total privacy. No per-token fees. Just pure, unadulterated AI power. But getting it all set up... well, that can be a journey into a dark forest of command lines, dependencies, and cryptic error messages. I've been there.

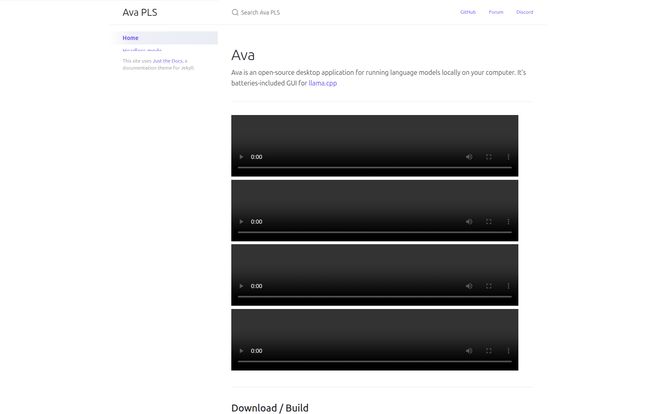

And that’s where a neat little tool called Ava PLS caught my eye. It promises to be the friendly face for a notoriously powerful, but tricky, piece of kit.

So, What Exactly is This Ava PLS Thing?

In the simplest terms, Ava PLS is a free, open-source desktop app that puts a graphical user interface (GUI) on top of llama.cpp. If you're not in the loop, llama.cpp is a legendary C++ port of Meta's LLaMA model. It's the engine that allows regular folks with decent computers (especially Macs with Apple Silicon) to run some seriously impressive language models without needing a data center in their basement. It's incredibly efficient.

But it's also a command-line tool. For many, that's a non-starter. Ava PLS changes that. It’s like having a high-performance V8 engine sitting on your garage floor – all the power is there, but it's a mess of wires and requires expert knowledge to even start. Ava PLS is the beautiful, custom-built hot rod chassis you drop it into, complete with a dashboard, a steering wheel, and a simple 'on' button. No more typing long, complicated commands into a black terminal window. Just point, click, and chat with your local AI.

Visit Ava PLS

Why Running AI on Your Own Machine is a Game Changer

Okay, so it makes a command-line tool easier to use. Cool. But why should you care? Oh, let me count the ways. I've become a huge advocate for local-first tech, and this is a prime example of why.

Your Data Stays YOURS. Period.

This is the big one for me. When you use a cloud-based AI service, you're sending your data—your prompts, your documents, your sensitive business ideas—to a third-party server. You're trusting that they're handling it securely and not using it to train their next model. With a local setup like Ava PLS, the model runs entirely on your computer. Nothing ever leaves your hard drive. It's 100% private. You can feed it your most confidential information without a second thought. For anyone working with proprietary data, this isn’t just a feature; it's a necessity.

Cutting the Cord (and the Bill)

API calls add up. Fast. That experimental script you're running, or that long-form content you're generating… it all costs money. A local model has a one-time cost: the hardware you're running it on. After that, you can use it as much as you want, 24/7, for free. No subscriptions, no usage tiers. Just pure, unmetered access. Plus, you don’t even need an internet connection to use it. Working from a cabin in the woods or on a spotty Wi-Fi connection? No problem.

How to Get Started with Ava PLS

Getting your hands on Ava PLS is straightforward, but it comes with a small fork in the road. According to their GitHub page, you have two options:

- Download the Artifacts: The easiest way is to grab the pre-built application directly from their GitHub Actions page. You'll need to find the latest successful run and download the build artifact for your operating system.

- Build it Yourself: For the more technically inclined, you can build it from the source code. You'll need to have Zig installed, but then it's a matter of running a command like

zig build run. They even have a headless mode for running it without the GUI.

This is probably the biggest hurdle for a non-developer. It's not a simple one-click `.exe` or `.dmg` download from a fancy website. When I was poking around, I even hit a classic GitHub Pages 404 error on one of the links. And you know what? It felt… authentic. This isn't some mega-corp project with a 99.999% uptime SLA. It's a real tool built by real people, and that has a certain charm.

A Quick Look Under the Hood

For my fellow nerds out there, the tech stack is pretty interesting. It's built with Zig, C++ (for llama.cpp), SQLite, Preact, Preact Signals, and Twind. The use of Zig is particularly modern and shows a forward-thinking approach. Preact is a lightweight alternative to React, which keeps the front-end snappy and small. It's a solid, no-fluff stack built for performance.

The Good, The Bad, and The... Local

No tool is perfect, right? Let's break down the realities of using Ava PLS, based on what we know.

The Upsides I Really Like

The benefits are clear and compelling. First, it’s open-source under an MIT license. That means it’s free to use, inspect, and modify. I love that transparency. The ability to run offline is a huge plus, offering both privacy and convenience. And the core promise—providing an easy-to-use GUI for a powerful backend—is its main selling point. It democratizes access to powerful tech. It even supports a headless mode, which is great for automation and server-side tasks for you power users out there.

Potential Hiccups to Expect

The main drawback is the setup. You have to be comfortable either downloading files from GitHub Actions (which isn't as scary as it sounds) or potentially building the application from its source code. This will definitely be a barrier for some. Another major consideration is that the performance is entirely dependent on your local computer's resources. If you have an old laptop, you're going to have a bad time. You need a machine with a decent processor, plenty of RAM, and preferably a good GPU to get smooth, fast responses from larger models. This isn't a fault of Ava PLS itself, but a reality of running local AI.

So, Who is Ava PLS Actually For?

I see a few key groups getting a ton of value from this. Developers who want a quick way to test models without spinning up a complex environment. AI hobbyists and tinkerers who love to experiment. Privacy advocates who want to cut ties with big cloud providers. And any SEO or content creator who is technically curious and wants to build custom, private workflows without breaking teh bank on API calls.

If you're someone who just wants a polished, works-out-of-the-box-with-zero-effort web app, this might not be for you just yet. But if you're willing to do a tiny bit of setup, the rewards are immense.

Frequently Asked Questions

- Is Ava PLS free to use?

- Yes, absolutely. It's an open-source project released under the MIT License, which is very permissive. You can use it for personal and even commercial projects.

- What is llama.cpp and why does it matter?

- Think of llama.cpp as the 'engine'. It's a highly optimized program that makes it possible to run large language models on consumer-grade hardware (like your Mac or PC) instead of requiring massive, expensive servers.

- Do I need a really powerful computer to run this?

- It helps. A lot. The performance of the AI models will depend directly on your computer's CPU, the amount of RAM you have, and your GPU. A newer computer, especially one with a good graphics card or an Apple Silicon chip, will provide a much better experience.

- Is it difficult to install Ava PLS?

- It can be if you're not used to tools like GitHub. The easiest path is downloading the completed 'build artifact' from the GitHub Actions tab. If you're comfortable with the command line, building from source is also an option. It's not a one-click installer, so expect a little bit of a learning curve.

- Where can I find support if I run into trouble?

- The project's GitHub page is the best place to start. The homepage image also mentions a Forum and a Discord server, which are likely the best places for community-based support and discussion.

My Final Take on Ava PLS

I'm genuinely excited about projects like Ava PLS. They represent a crucial counter-movement to the centralization of AI. It's about empowerment, privacy, and ownership. While it's still a bit rough around the edges—more of a tool for the enthusiast than the absolute beginner—it solves a real problem. It lowers the barrier to entry for running private, local AI.

If you've been curious about local LLMs but have been intimidated by the command line, this is your sign. Give Ava PLS a look. It might just be the tool that finally convinces you to bring your AI workflow home, right where it belongs: on your own machine.

Reference and Sources

- Ava PLS GitHub Repository: https://github.com/louisgv/ava

- llama.cpp Official GitHub Repository: https://github.com/ggerganov/llama.cpp