The last couple of years in the tech world have felt like a wild, chaotic gold rush. Every week there’s a new Large Language Model (LLM) that promises to change everything. You’ve got your OpenAI keys, your Anthropic credentials, a handful from Google, maybe you're even dabbling with some open-source models from Hugging Face. It’s chaos. Pure, unadulterated API key chaos.

And if you’re a developer or a product manager trying to build on top of this new generative AI wave, you know exactly what I'm talking about. Juggling different endpoints, trying to track costs across ten different dashboards, ensuring security... it’s a full-time job. It's like trying to conduct an orchestra where every musician is playing a different tune in a different language. A total headache.

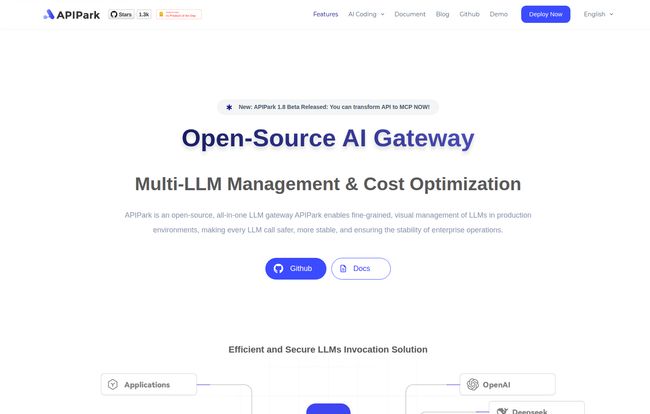

This is precisely the problem that AI Gateways are built to solve. And today, I want to talk about one that's been making some noise: APIPark.

So, What Exactly is an AI Gateway?

Before we get into the nitty-gritty of APIPark, let's level-set. Think of an AI Gateway as a master traffic controller for all your AI services. Instead of your applications having to connect directly to OpenAI, then to Cohere, then to some other service, they all talk to one single point: the gateway.

This gateway then intelligently routes the requests to the right model, handles authentication, logs everything, enforces security rules, and can even do clever things like cache results to save you money. It turns your tangled mess of API connections into a clean, manageable, single stream. It’s the sanity layer on top of the AI madness. For anyone in the DevOps or MLOps space, this idea probably sounds mighty familiar, borrowing from the battle-tested world of API gateways like Kong or Tyk, but with a specific, much-needed AI focus.

Visit APIPark

Enter APIPark: The Open-Source Contender

APIPark steps onto the scene as an open-source AI Gateway and Developer Portal. The "open-source" part is what first caught my eye. In a world of proprietary, locked-down platforms, having an open-source option is a huge breath of fresh air. It means transparency, community, and the ability to get under the hood and customize things yourself.

It bills itself as a one-stop shop to manage, integrate, and deploy AI services. And looking at its backers—we’re talking big names like Sequoia—it's clear this isn't just a weekend project. This is a serious platform with serious investment behind it.

The Features That Actually Matter

A feature list is just a list. What I care about is what problems it solves. So let's break down APIPark's capabilities by the pain points they address.

Taming the LLM Zoo: Multi-Model Management

The platform claims to connect with over 200+ LLMs. This is the core of its appeal. With a Unified API Signature, your developers can write code once and APIPark handles the translation to whatever model is on the backend. Want to switch from GPT-4 to Claude 3 Opus for a specific task? You don’t need to rewrite your application code. You just flip a switch in the gateway.

It even includes a Load Balancer for LLMs. This is brilliant. It lets you distribute traffic across multiple models or deployments, ensuring high availability and preventing you from hitting rate limits on a single provider. It’s like having a universal remote for your entire AI stack.

Keeping Your Wallet Happy: Cost Control and Optimization

Let’s talk about the elephant in the room: cost. LLM APIs can get expensive, fast. An unmonitored script can accidentally rack up thousands of dollars in bills overnight. APIPark addresses this head-on with some really smart features.

You get fine-grained traffic control and quota management. You can set hard limits on a per-user or per-key basis to prevent overuse. The real-time traffic monitoring gives you a clear dashboard of who is using what, and how much it's costing you.

But my favorite feature here is the caching strategy. It can use semantic caching to store the results of common prompts. If five different users ask the same complex question, the model only has to run once. The gateway serves the cached result to the other four. This alone could lead to massive cost savings and speed improvements.

Fort Knox for Your APIs: Security and Governance

When you're piping sensitive company data through third-party models, security is not optional. APIPark provides a centralized point for access control and security. A standout feature is Data Masking, which can automatically identify and redact sensitive information like PII before it ever leaves your infrastructure. This is a huge deal for compliance in industries like healthcare or finance.

Not Just a Gateway, but a Full-Blown Developer Portal

This is where APIPark goes a step further. It isn't just a backend tool. It provides an API Open Portal, which is essentially a storefront for your internal or external APIs. You can document them, provide access, and even set up API Billing. This transforms the gateway from a simple utility into a platform for creating and monetizing your own AI-powered services.

The Two Flavors: Community vs. Enterprise

Of course, there's always a catch with open-source, right? APIPark comes in two editions: Community and Enterprise. It's important to understand the difference.

- The Community Edition: This is the free, open-source version. It's described as fast, lightweight, and suitable for SMEs with internal APIs. It gives you the basic gateway functionality, user management, and the portal. It's a fantastic way to get your feet wet and manage a smaller-scale operation.

- The Enterprise Edition: This is the paid, commercially supported version for larger companies. This is where you get all the advanced goodies: plugin extensions, comprehensive data statistics, advanced security and governance features, and, of course, professional support.

Here’s a quick breakdown of what you get—and what you don't get—with the Community Edition:

| Feature Group | Available in Community Edition? | Notes |

|---|---|---|

| Service Publishing & Subscription | ✔️ | Core functionality is there. |

| User, Permission, and Role Management | ✔️ | You can manage who has access. |

| Plugin Extensions | ❌ | This is a big one. Extensibility is Enterprise-only. |

| Advanced API Governance | ❌ | Full data processing and stats are in the paid tier. |

| Support and Services | ❌ | Community support only, no official SLA. |

My Unfiltered Thoughts: The Good, The Bad, and The "It Depends"

Alright, let's get real. On paper, APIPark looks fantastic. The open-source foundation is a massive win in my book. The multi-LLM management and cost-control features are solving a very real, very current problem that a lot of companies are struggling with right now.

However, you have to go in with your eyes open. The Community Edition is, to be blunt, a bit of a teaser. It's functional, yes, but many of the most powerful features—the ones that truly make a gateway a strategic asset like plugin extensions and advanced governance—are locked behind the Enterprise paywall. This is a classic open-core model, and there's nothing wrong with it, but you need to be aware that for serious, production-grade, customer-facing systems, you're probably going to be talking to their sales team.

There's also the question of technical expertise. While they claim a "5-minute" setup with a single command line (likely a Docker Compose or similar script), customizing and maintaining any piece of infrastructure requires some technical know-how. This isn't a plug-and-play SaaS for a non-technical marketing team; it's a powerful tool for developers and operations teams.

The Bottom Line: Is APIPark a Game-Changer?

So, is APIPark the solution to all our AI integration woes? I think it's one of the most promising ones I've seen in a while, especialy in the open-source space. It understands the core problems: the chaos of multiple models, the terror of runaway costs, and the need for tight security.

It provides a robust, well-thought-out platform for solving those problems. For a startup or an SME looking to bring some order to their internal AI experiments, the Community Edition is a no-brainer to try out. For a larger enterprise that sees AI as a strategic part of its future, the Enterprise Edition looks like a very serious contender for a central piece of its infrastructure.

It's not a magic wand, but it’s a damn good map and compass for navigating the wild west of generative AI. And right now, that's incredibly valuable.

Frequently Asked Questions about APIPark

1. What is an AI Gateway, really?

Think of it as a single front door for all your AI models. Instead of connecting to dozens of different services, your apps connect to one place. The gateway handles routing, security, caching, and logging, which simplifies development and cuts costs.2. Is APIPark completely free?

APIPark has a free, open-source Community Edition with a solid set of core features. However, for more advanced capabilities like plugin extensions, comprehensive analytics, and enterprise-grade support, you'll need the paid Enterprise Edition.3. Who should use the Enterprise Edition?

Large and medium-sized businesses that rely heavily on APIs, need advanced security and compliance features, require detailed data processing and statistics, and want dedicated technical support would be the ideal customers for the Enterprise Edition.4. Can I connect my own fine-tuned or custom LLMs?

Yes, one of the major advantages of a gateway like APIPark is its flexibility. You can integrate connections to major providers as well as your own internally hosted or custom models, putting everything under one management umbrella.5. How exactly does APIPark help me save money?

It helps in several ways: 1) Caching common requests so you don't pay for the same API call multiple times. 2) Setting quotas and rate limits to prevent accidental overspending. 3) Providing detailed analytics so you can see which models provide the best cost-to-performance ratio and optimize your usage.6. Is APIPark difficult to set up?

The platform promotes a very simple, one-command-line deployment, likely using containers like Docker. While this initial setup is easy for someone with technical skills, deeper customization and ongoing maintenance will require some dev or DevOps knowledge. It’s not a no-code tool.

Final Thoughts

The move toward AI gateways is a natural and necessary maturation of the AI development market. APIPark is a strong, credible player in this space. If you're feeling the pain of LLM API management, it's definitely worth spinning up the community edition and taking it for a spin. You might just find the sanity you’ve been looking for.

Reference and Sources

- APIPark Official Website: https://apipark.com

- APIPark Installation & Editions: https://apipark.com/install