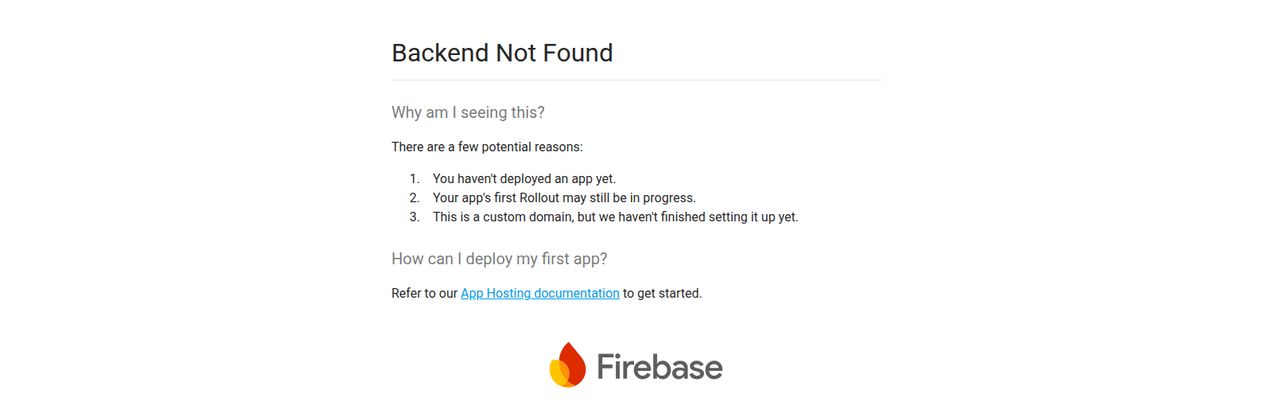

We’ve all been there. That sinking feeling. You’ve been coding for hours, you push your new app, and you’re greeted with… nothing. Or worse, that dreaded “Backend Not Found” screen. It’s the digital equivalent of a dial tone, a silent scream into the void of failed deployments. It's a universal pain for anyone who's ever tried to get a project off the ground.

Now, imagine that frustration multiplied by about a thousand. That’s what it can feel like trying to deploy and manage your own Large Language Models (LLMs). The setup, the GPU management, the scaling issues—it's a massive undertaking. A real monster of a task that can stop even the most exciting AI projects dead in their tracks.

For years, the solution was to just plug into one of the big players, like OpenAI. And don't get me wrong, that's fine. But what if you want more flexibility? What if you want to play with Anthropic's latest Claude model, or Meta's shiny new Llama 3.2, without signing your life away or building a server farm in your garage? This is the exact problem I stumbled upon a solution for, and it's called AMOD (AI Models On Demand).

Visit AMOD

So, What Exactly is AMOD?

Let's cut through the marketing fluff. AMOD is like a master key for a whole building of different LLMs. It’s a platform that lets you pick from a list of top-tier AI models, press a button (okay, it’s a bit more than one button), and get a live, working API endpoint for it. Instantly. No wrangling servers. No complex configuration files that make you want to tear your hair out.

It takes the gnarliest part of building with AI—the infrastructure—and just handles it. You get to focus on the fun stuff: actually building your application. AMOD supports some of the best models out there right now, including titans from Meta (Llama), Anthropic (Claude), Mistral, and even Amazon's own Titan models. You choose the model, it spins up an endpoint that scales automatically, and you start making calls. Simple as that.

The Features That Actually Matter

I’ve seen a lot of these “AI made easy” platforms pop up over the last year. Some are great, others are… less so. Here’s what made me take a closer look at AMOD and why I think it stands out from the crowd.

An AI Buffet: Pick Your Flavor

My biggest gripe with getting locked into a single AI ecosystem is the lack of choice. Sometimes, you need a model that's a creative writing genius, like Claude 3.5 Sonnet. Other times, you need a lean, fast coding assistant. With AMOD, you aren't forced to choose one. It’s like an all-you-can-eat buffet of state-of-the-art models. This flexibility is, in my opinion, its killer feature. You can test different models for your specific use case without a massive commitment, finding the one that gives you the best bang for your buck.

Deployment That Doesn't Involve Crying

I once spent a solid week trying to get a moderately complex open-source model running on my own cloud instance. It was a nightmare of dependency conflicts and cryptic error messages. AMOD turns that week of pain into about five minutes of clicking. This is a game-changer. It means small teams and even solo developers can now wield the same AI power as a massive corporation, without needing a dedicated DevOps team on payroll. The speed from idea to a working AI integration is just… chef’s kiss.

The OpenAI Escape Hatch

This one is huge. A lot of developers have built their entire stack on OpenAI's API structure. Moving away from it can feel like a monumental task of refactoring code. AMOD seems to get this. They've designed their platform to make migrating from OpenAI as painless as possible. This lowers the barrier to entry for trying new models and gives developers an 'out' if they want to diversify their AI toolkit. It's a smart, user-focused decision.

Let's Talk Money: A Look at AMOD's Pricing

Alright, this is the part everyone always scrolls to first. How much is this magic going to cost? The pricing structure is actually pretty straightforward and caters to a few different types of users. It's a breath of fresh air compared to some of the confusing credit-based systems I've seen.

Here's a quick breakdown as I see it:

| Plan | Price | Who It's For |

|---|---|---|

| Hobbyist | $19.99 / month | This is your entry point. Perfect for students, indie hackers, or if you're just messing around with a personal project. You get access to a solid set of models like Llama 3 and Claude Haiku. |

| Pro | $49.99 / month | This feels like the sweet spot for small businesses, freelancers, and startups. You get everything in Hobbyist plus the latest and greatest models like Llama 3.2 and Claude 3.5 Sonnet, plus unlimited model deployments. A pretty solid deal. |

| Standard | $0.005 / 1000 tokens | The pay-as-you-go option. This is fantastic if your usage is unpredictable. You only pay for what you actually use, which can be much more cost-effective than a fixed monthly fee if your traffic comes in bursts. The rate is competitive too. |

| Enterprise | Custom Pricing | For the big guns. If you need custom models, dedicated support, and fixed billing, this is your tier. You'll have to talk to their sales team for this one. |

Both the Hobbyist and Pro plans come with a 14-day free trial, so you can kick the tires before you commit. I always appreciate a try-before-you-buy approach.

The Not-So-Shiny Bits (Because Nothing's Perfect)

I wouldn’t be doing my job if I didn't point out a few things to keep in mind. AMOD is fantastic, but it's not a magical unicorn.

First, while it simplifies deployment, you still need some basic technical chops to integrate an API into your application. If you don't know what an API call is, you'll have a bit of a learning curve. Second, the pay-as-you-go plan is great, but you need to monitor your usage. A runaway script could lead to a suprise bill, so be mindful. Finally, if you're dreaming of running your own fine-tuned, custom model, you'll need to be on the Enterprise plan. That's pretty standard, but it's good to know upfront.

So, Is AMOD Right For You?

If you're a developer, a startup, or a business that wants to infuse AI into your products without the massive overhead of traditional LLM deployment, then yeah, I think AMOD is absolutely worth a look. It strikes a fantastic balance between power, flexibility, and ease of use.

It’s for the builders who would rather spend their time creating amazing user experiences than debugging server configurations. It’s for the businesses that want to experiment with different AI models to find the perfect fit for their customers. It democratizes access to some seriously powerful tech, and that's something I can always get behind.

Final Thoughts

In a world where AI is moving at a breakneck pace, tools like AMOD are what keep the field accessible and exciting. They remove the boring, frustrating barriers and let more people participate in the revolution. Instead of seeing that soul-crushing 'Backend Not Found' error, you get to see your AI-powered idea come to life. And honestly, what’s more satisfying than that?

Frequently Asked Questions about AMOD

- 1. Can I try AMOD for free?

- Yes! Both the Hobbyist and Pro plans offer a 14-day free trial, giving you plenty of time to test out the platform and see if it fits your needs before spending any money.

- 2. What Large Language Models does AMOD support?

- AMOD supports a great range of models from leading providers. This includes Meta's Llama series (like Llama 3 and 3.2), Anthropic's Claude models (like Haiku and Sonnet 3.5), Mistral, and Amazon Titan.

- 3. Is AMOD a good alternative to using the OpenAI API directly?

- It can be, especially if you want flexibility. AMOD allows you to easily switch between different models from different providers, which you can't do with OpenAI alone. They also simplify the migration process, making it a strong contender if you're looking to diversify.

- 4. How does the pay-as-you-go 'Standard' plan work?

- The Standard plan is usage-based. You pay a set rate of $0.005 for every 1,000 tokens (both input and output) that you process through the API. This is ideal for applications with variable or unpredictable traffic.

- 5. Do I need to be an expert developer to use AMOD?

- Not an expert, but some basic knowledge is helpful. You should be comfortable with the concept of APIs and know how to make API calls from your chosen programming language. The platform handles the difficult server-side deployment for you.

- 6. Can I deploy my own custom-trained models on AMOD?

- Yes, but this feature is reserved for the Enterprise plan. If you have a custom or fine-tuned model you need to deploy, you would need to contact their sales team to discuss an enterprise solution.